AiiNTENSE: AI for intensive care units

The start-up AiiNTENSE was incubated at IMT Starter and develops decision support tools for healthcare with the aim of advising intensive care personnel on the most appropriate therapeutic procedures. To this end, the start-up is developing a data platform of all diseases and conditions, which it has made available to researchers. It therefore seeks to provide support for launching clinical studies and increase medical knowledge.

Patients are often admitted to intensive care units due to neurological causes, especially in the case of a coma. And patients who leave these units are at risk of developing neurological complications that may impact their cognitive and functional capacities. These various situations pose diagnostic, therapeutic and ethical problems for physicians. How can neurological damage following intensive care be predicted in the short, medium and long term in order to provide appropriate care? What will the neurological evolution of a coma patient involve, between brain death, a vegetative state and partial recovery of consciousness? An incorrect assessment of the prognosis could have tragic consequences.

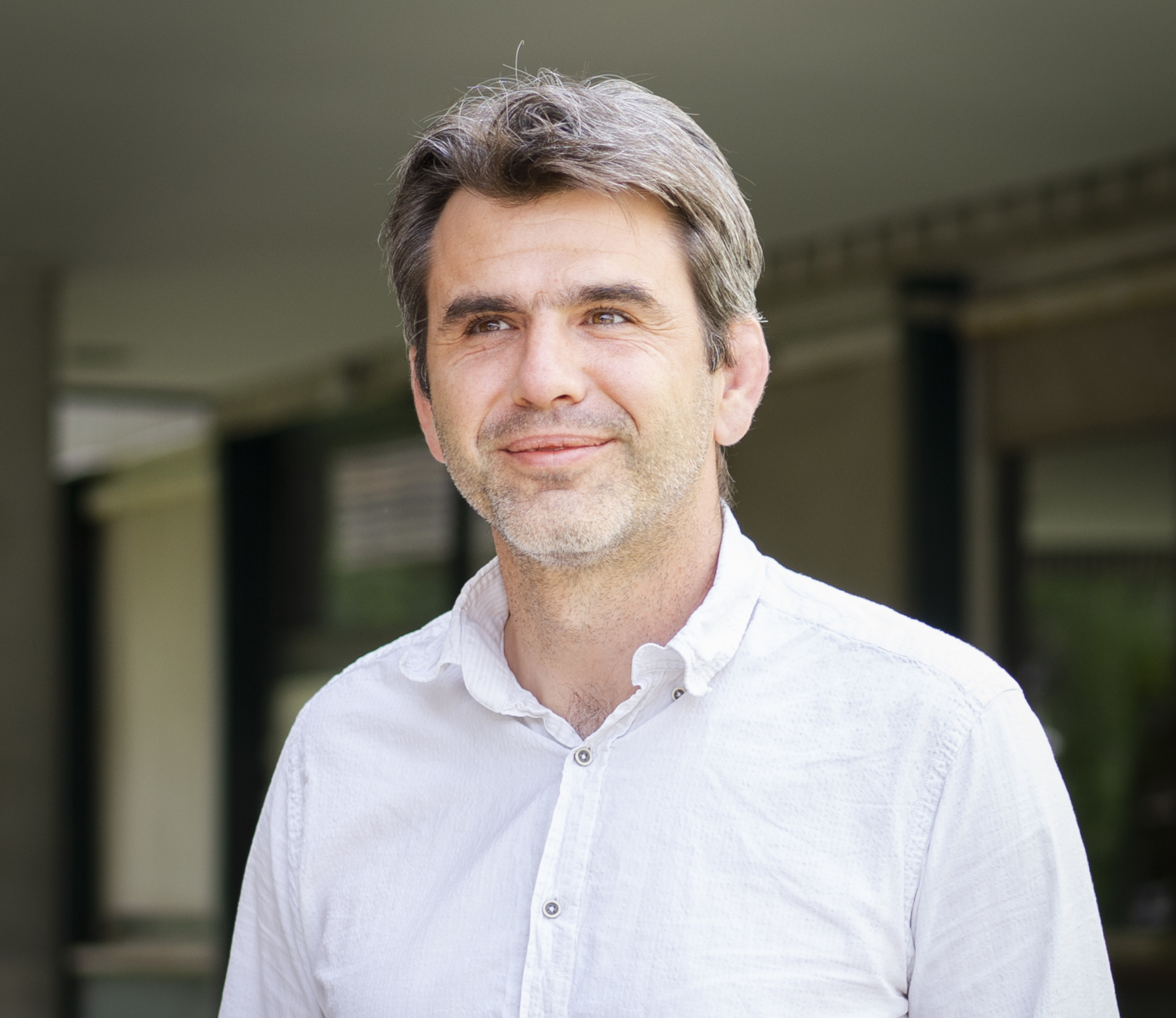

In 2015, Professor Tarek Sharshar, a neurologist specialized in intensive care, saw a twofold need for training – on one hand neurology training for intensivists, and on the other, intensive care training for neurologists. He proposed a tele-expertise system connecting the two communities. In 2017, this project gave rise to AiiNTENSE, a start-up incubated at IMT Starter, whose focus soon expanded. “We started out with our core area of expertise: neuro-intensive care and drawing on support from other experts and learned societies, we shifted to developing decision support tools for all of the diseases and conditions encountered in intensive care units,” says Daniel Duhautbout, co-founder of AiiNTENSE. The start-up is developing a database of patient records which it analyzes with algorithms using artificial intelligence.

AI to aid in diagnosis and prognosis

The start-up team is working on a prototype concerning post-cardiac arrest coma. Experts largely agree on methods for assessing the neurological prognosis for this condition. And yet, in 50% of the cases of this condition, physicians are not yet able to determine whether or not a patient will awake from the coma. “Providing a prognosis for a patient in a coma is extremely complex and many available variables are not taken into account, due to a lack of appropriate clinical studies and tools to make use of these variables,” explains Daniel Duhautbout. That’s where the start-up comes in.

In 2020, AiiNTENSE will launch its pilot prototype in five or six hospitals in France and abroad. This initial tool comprises, first and foremost, patient records, taken from the hospital’s information system, which contain all the relevant data for making medical decisions. This includes structured biomedical information and non-structured clinical data (hospitalization or exam reports). In order to make use of the latter, the start-up uses technology for the automated processing of natural language. This results in patient records with semantic, homogenized data, which take into account international standards for interoperability.

A use for each unit

The start-up is developing a program that will in time respond to intensivists’ immediate needs. It will provide a quick, comprehensive view of an individual patient’s situation. The tool will offer recommendations for therapeutic procedures or additional observations to help reach a diagnosis. Furthermore, it will guide the physician in order to assess how the patient’s state will evolve. The intensivist will still have access to an expert from AiiNTENSE’s tele-expertise network to discuss cases in which the medical knowledge implemented in the AiiNTENSE platform is not sufficiently advanced.

The start-up also indirectly responds to hospital management issues. Proposing accurate, timely diagnoses means limiting unnecessary exams, making for shorter hospital stays and, therefore lower costs. In addition, the tool optimizes the traceability of analyses and medical decisions, a key medical-legal priority.

In the long term, the start-up seeks to develop a precision intensive care model. That means being able to provide increasingly reliable diagnoses and prognoses tailored for each patient. “For the time being, for example, it’s hard to determine what a patient’s cognitive state will be when they awaken from a coma. We need clinical studies to improve our knowledge,” says Daniel Duhautbout. The database and its analytical tools are therefore open to researchers who wish to improve our knowledge of conditions that require intensive care. The results of their studies will then be disseminated through AiiNTENSE’s integration platform.

Protecting data on a large scale

In order to provide a viable and sustainable solution, AiiNTENSE must meet GDPR requirements and protect personal health data. With this aim, the team is collaborating with researchers at IMT Atlantique and plans to use the blockchain to protect data. Watermarking, a sort of invisible mark attached to data, would also appear to be a promising approach. It would make it possible to track those who use the data and who may have been involved in the event of data leakage to external servers. “We also take care to ensure the integrity of our algorithms so that they support physicians confronted with critical neurological patients in an ethical manner,” concludes Daniel Duhautbout.