The sun alone produces more than 64 billion neutrinos per second and per cm2 that pass right through the Earth. These elementary particles of matter are everywhere, yet they remain almost entirely elusive. The key word is almost… The European infrastructure KM3NeT, currently being installed in the depths of the Mediterranean Sea, has been designed to detect the extremely faint light generated by neutrino interactions in the water. Researcher Richard Dallier from IMT Atlantique offers insight on the major scientific and technical challenge of searching for neutrinos.

These “little neutral particles” are among the most mysterious in the universe. “Neutrinos have no electric charge, very low mass and move at a speed close to that of light. They are hard to study because they are extremely difficult to detect,” explains Richard Dallier, member of the KM3NeT team from the Neutrino group at Subatech laboratory[1]. “They interact so little with matter that only one particle out of 100 billion encounters an atom!”

Although their existence was first postulated in the 1930s by physicist Wolfgang Pauli, it was not confirmed experimentally until 1956 by American physicists Frederick Reines and Clyde Cowan–awarded the Nobel Prize in Physics for this discovery in 1995. This was a small revolution for particle physics. “It could justify the excess matter that enabled our existence. The Big Bang created as much matter as it did antimatter, but they mutually annihilated each other very quickly. So, there should not be any left! We hope that studying neutrinos will help us understand this imbalance,” Richard Dallier explains.

The Neutrino Saga

While there is still much to discover about these bashful particles, we do know that neutrinos exist in three forms or “flavors”: the electron neutrino, the muon neutrino and the tau neutrino. The neutrino is certainly an unusual particle, capable of transforming over the course of its journey. This phenomenon is called oscillation: “The neutrino, which can be generated from different sources, including the Sun, nuclear power plants and cosmic rays, is born as a certain type, takes on a hybrid form combining all three flavors as it travels and can then appear as a different flavor when it is detected,” Richard Dallier explains.

The oscillation of neutrinos was first revealed in 1998 with the Super-Kamiokande experiment, a Japanese neutrino observatory, which also received the Nobel Prize in Physics in 2015. This change in identity is key: it provides indirect evidence that neutrinos indeed have a mass, albeit extremely low. However, another mystery remains: what is the mass hierarchy of these 3 flavors? The answer to this question would further clarify our understanding of the Standard Model of particle physics.

The singularity of neutrinos is a fascinating area of study. An increasing number of observatories and detectors dedicated to the subject are being installed in great depths, where the combination of darkness and concentration of matter is ideal. Russia has installed a detector at the bottom of Lake Baikal and the United States in the South Pole. Europe, on the other hand, is working in the depths of the Mediterranean Sea. This phenomenon of fishing for neutrinos first began in 2008 with the Antares experiment, a unique type of telescope that can detect even the faintest light crossing the depths of the sea. Antares then made way for KM3NeT, with improved sensitivity to orders of magnitude. This experiment has brought together nearly 250 researchers from around 50 laboratories and institutes, including four French laboratories. In addition to studying the fundamental properties of neutrinos, the collaboration aims to discover and study the astrophysical sources of cosmic neutrinos.

Staring into the Universe

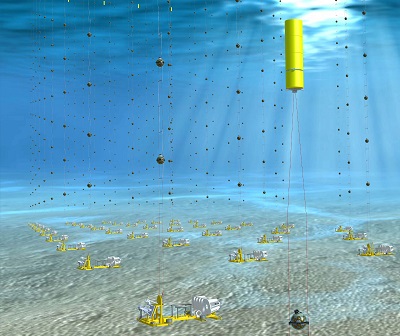

KM3NeT is actually comprised of two gigantic neutrino telescopes currently being installed at the bottom of the Mediterranean Sea. The first, called ORCA (Oscillation Research with Cosmics in the Abyss), is located off the coast of Toulon in France. Submerged at a depth of nearly 2,500 meters, it will eventually be composed of 115 strings attached to the seabed. “Optical detectors are placed on each of these 200-meter flexible strings, spaced 20 meters apart: 18 spheres measuring 45 centimeters spaced 9 meters apart each contain 31 light sensors,” explains Richard Dallier, who is participating in the construction and installation of these modules. “This unprecedented density of detectors is required in order to study the properties of the neutrinos: their nature, oscillations and thus their masses and classification thereof. The sources of neutrinos ORCA will focus on are the Sun and the terrestrial atmosphere, where they are generated in large numbers by the cosmic rays that bombard the Earth.”

Each of KM3Net’s optical modules is comprised of 31 photomultipliers to detect the light produced by interactions between neutrinos and matter. These spheres with a diameter of 47 centimeters (including a covering of nearly 2 cm!) were designed to withstand pressures of 350 bar.

The second KM3Net telescope is ARCA (Astroparticles Research with Cosmics in the Abyss). It will be located 3,500 meters under the sea in Sicily. There will be twice as many strings, which will be longer (700 meters) and spaced further apart (90 meters), but with the same number of sensors. With a volume of over one km3—hence the name KM3Net for km3 Neutrino Telescope—ARCA will be dedicated to searching for and observing the astrophysical sources of neutrinos, which are much rarer. A total of over 6,000 optical modules containing over 200,000 light sensors will be installed by 2022. These numbers make KM3Net the largest detector in the world, in equal position with its cousin, IceCube, in Antarctica.

Both ORCA and ARCA operate on the same principle based on the indirect detection of neutrinos. When a neutrino encounters an atom of matter, such as an atom of air, water, or the Earth itself—since they easily travel right through it—the neutrino can “deposit” its energy there. This energy is then instantly transformed into one of the three particles of the flavor of the neutrino: an electron, a muon or a tau. This “daughter” particle then continues its journey on the same path as the initial neutrino and at the same speed, emitting light in the atmosphere it is passing through, or interacting itself with atoms in the environment and disintegrating into other particles, which will also radiate as blue light.

“Since this is all happening at the speed of light, an extremely short light pulse of a few nanoseconds occurs. If the environment the neutrino is passing through is transparent–which is the case for the water in the Mediterranean Sea–and the path goes through the volume occupied by ORCA or ARCA, the light sensors will detect this extremely faint flash,” Richard Dallier explains. Therefore, if several sensors are touched, we can reconstitute every direction of the trajectory and determine the energy and nature of the original neutrino. But regardless of the source, the probability of neutrino interactions remains extremely low: with a volume of 1 km3, ARCA only expects to detect a few neutrinos originating from the universe.

Neutrinos: New Messengers Revealing a Violent Universe

Seen as cosmic messengers, these phantom particles open a window onto a violent universe. “Among other things, the study of neutrinos will provide a better understanding and knowledge of cosmic cataclysms,” says Richard Dallier. The collisions of black holes and neutron stars, supernovae, and even massive stars that collapse, produce gusts of neutrinos that bombard us without being absorbed or deflected in their journey. This means that light is no longer the only messenger of the objects in the universe.

Neutrinos have therefore strengthened the arsenal of “multi-messenger” astronomy, involving the cooperation of a maximum number of observatories and instruments throughout the world. Each wavelength and particle contributes to the study of various processes and additional aspects of astrophysical objects and phenomena. “The more observers and objects observed, the greater the chances of finding something,” Richard Dallier explains. And in these extraterrestrial particles lies the possibility of tracing our own origins with greater precision.

[1] SUBATECH is a research laboratory co-operated by IMT Atlantique, the Institut National de Physique Nucléaire et de Physique des Particules (IN2P3) of CNRS, and the Université de Nantes.

Article written for I’MTech (in French) by Anne-Sophie Boutaud

Gérard Dray is a Full Professor at IMT Mines Alès in the Laboratory of Computer Engineering and Production Engineering (LGI2P). He researches and develops methods of information processing whose objective is the digitalization of knowledge in order to facilitate the action of the man, to make it more reliable, more efficient. These information processing procedures are mainly based on artificial intelligence and machine learning methods. Gérard Dray is responsible of scientific activities in the field of “Health, Aging, Quality of Life” through a transversal axis to IMT Mines Alès. With the aim of developing this axis in a context close to clinical issues, he coordinates the work of the dedicated ICT and Health team of LGI2P hosted at the EuroMov research center of the University of Montpellier.

Gérard Dray is a Full Professor at IMT Mines Alès in the Laboratory of Computer Engineering and Production Engineering (LGI2P). He researches and develops methods of information processing whose objective is the digitalization of knowledge in order to facilitate the action of the man, to make it more reliable, more efficient. These information processing procedures are mainly based on artificial intelligence and machine learning methods. Gérard Dray is responsible of scientific activities in the field of “Health, Aging, Quality of Life” through a transversal axis to IMT Mines Alès. With the aim of developing this axis in a context close to clinical issues, he coordinates the work of the dedicated ICT and Health team of LGI2P hosted at the EuroMov research center of the University of Montpellier.