Imaging to help people with brain injuries

People with brain injuries have complex cognitive and neurobiological processes. This is the case for people who have suffered a stroke, or who are in a minimally conscious state and close to a vegetative state. At IMT Mines Alès, Gérard Dray is working on new technology involving neuroimaging and statistical learning. This research means that we can improve how we observe patients’ brain activity. Ultimately, his studies could greatly help to rehabilitate trauma patients.

As neuroimaging technology is becoming more effective, the brain is slowly losing its mystery; and as our ability to observe what is happening inside this organ becomes more accurate, we are opening up numerous possibilities, notably in medicine. For several years, at IMT Mines Alès, Gérard Dray has been working on new tools to detect brain activity. More precisely, he is aiming to improve how we record and understand the brain signals recorded by techniques such as electroencephalography (EEG) or infrared spectroscopy (NIRS). In partnership with the University of Montpellier’s research center EuroMov, and Montpellier and Nîmes University Hospitals, Dray is putting his research into application in order to support patients who have suffered heavy brain damage.

Read on I’MTech Technology that decrypts the way our brain works

This is notably the case of stroke victims; a part of their brain does not get enough blood supply from the circulatory system and therefore becomes necrotic. The neurons in this part of the brain die and the patient can lose certain motor functions in their legs or arms. However, this disability is not necessarily permanent. Appropriate rehabilitation can mean that stroke victims regain a part of their motor ability. “This is possible thanks to the plasticity of the brain, which allows the brain to move functions stored in the necrotic zone into a healthy part of the brain,” explains Gérard Dray.

Towards Post-Stroke Rehabilitation

In practice, this transfer happens thanks to rehabilitation sessions. Over several months, a stroke victim who has lost their motor skills is asked to imagine moving the part of their body that they are unable to move. In the first few sessions, a therapist guides the movement of the patient. The patient’s brain begins to associate the request for movement to the feeling of the limb moving; and gradually it recreates these neural connections in a healthy area of the brain. “These therapies are recent, less than 20 years old,” points out the researcher at IMT Mines Alès. However, although they have already proven that they work, they still have several limitations that Dray and his team are trying to overcome.

One of the problems with these therapies is the great uncertainty as to the patient’s involvement. When the therapist moves the limb of the victim and asks them to think about moving it, there is no guarantee that they are doing the exercise correctly. If the patient’s thoughts are not synchronized with their movement, then their rehabilitation will be much slower, and may even become ineffective in some cases. By using neuroimaging, researchers want to ensure that the patient is using their brain correctly and is not just being passive during a kinesiotherapy session. But the researchers want to go one step further. By knowing when the patient is thinking about lifting their arm or leg, it is possible to make a part of rehabilitation autonomous.

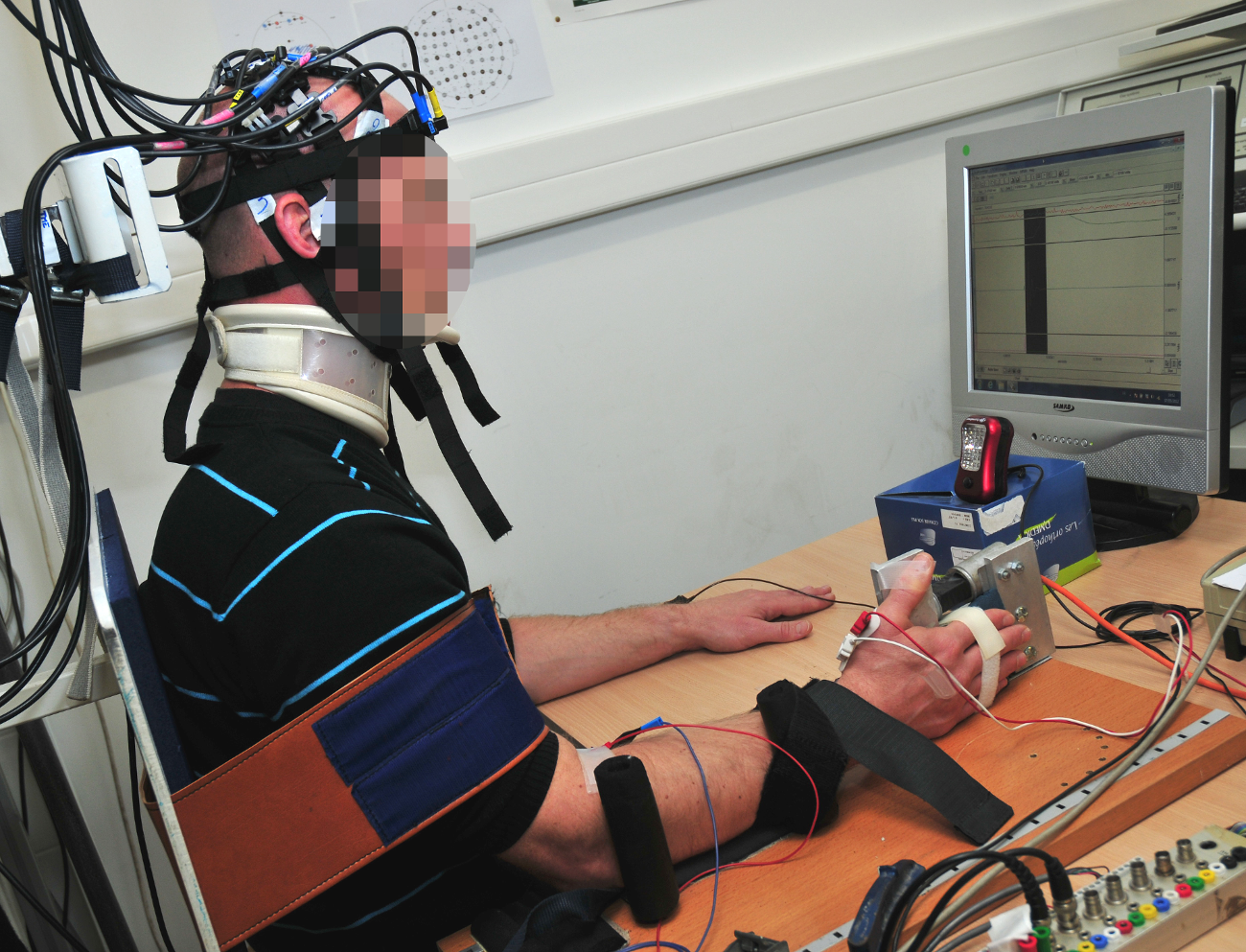

“With our partners, we have developed a device that detects brain signals, and is connected to an automatic glove,” describes Gérard Dray. “When we detect that the patient is thinking about lifting their arm, the glove carries out the associated movement.” The researcher warns that this cannot and should not replace sessions with a therapist, as these are essential for the patient to understand the rehabilitation system. However, the device allows the victim to complete the exercises in the sessions by themselves, which speeds up the transfer of brain functions towards a healthy zone. Like after fracture, stroke patients will often have to go through physiotherapy sessions both at the hospital and at home by themselves.

A glove which is connected to a brain activity detection system can help post-stroke rehabilitation.

The main task for this device is being able to detect the brain signals associated with the movement of the limb. When observing brain activity, the imaging tools record a constellation of signals associated with all the background activities managed by the brain. The neuronal signal which causes the arm to move gets lost in the crowd of background signals. In order to isolate it, the researchers use statistical learning tools. The patients are first asked to carry out guided and supervised motor actions, while their neural activity is recorded. Then, they move freely during several sessions, while being monitored by EEG or NIRS technology. Once sufficient data has been collected, the algorithms can categorize the signals by action and can therefore deduce, through real-time neuroimaging, if the patient is in the process of trying to move their arm or not.

In partnership with Montpellier University Hospital, the first clinical trial with the device was carried out on 20 patients. The results were used to test the device. “Although the results are positive, we are still not completely satisfied with them,” admits Dray. “The algorithms only detected the patients’ intention to move their arm in 80% of cases. This means that in two out of ten times, the patient thinks about doing it without us being able to record their thoughts using neuroimaging.” To improve these detection rates, researchers are working on numerous algorithms which categorize brain activity. “Notably, we are trying to couple imagery techniques with techniques that can detect fainter signals,” he continues.

Detecting Consciousness After Head Trauma

The improvement in these brain activity detection tools is not only useful for post-stroke rehabilitation. The IMT Mines Alès team uses the technology that they have developed on people who have suffered head trauma and whose state of consciousness has been altered. After an accident, a victim who is not responsive, but whose respiratory and circulatory functions are in good condition, can be in several different states. They can be in either a total and normal state of consciousness, a coma, a vegetative state, a state of minimal consciousness, or have locked-in syndrome. “These different states are characterized by two factors, consciousness and being awake,” summarizes Dray. In a normal state, we are both awake and conscious. However, a person who is in a coma is neither awake nor conscious. A person is a vegetative state is awake but not conscious of their surroundings.

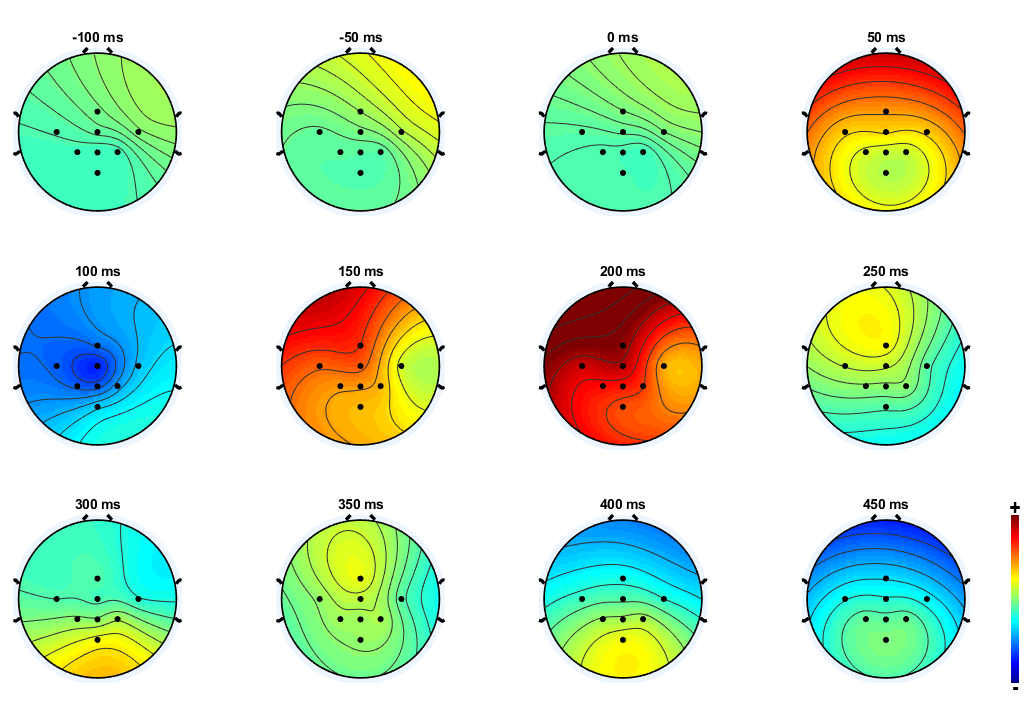

According to their different states, patients receive different types of care and have different prospects of recovery. The huge difficulty for doctors is being able to identify patients who are awake without being responsive, but whose state of consciousness is not yet gone. “With these people there is a hope that their state of consciousness will be able to return to normal,” explains the researcher. However, the patient’s state of consciousness is sometimes very weak, and we have to detect it using high-quality neuroimaging tools. For this, Gérard Dray and his team use EEG paired with sound stimuli. He explains the process. “We speak to the person and explain to them that we are going to play them a series of signals which have deep frequencies, in between these signals there will be high-pitched frequencies. We ask them to count the high-pitched frequencies. Their brain will react to each sound. However, when they are played a high-pitched sound, their cognitive response will be more important, as these are the signals which the brain will remember. More precisely, a wave called P300 is generated when we are innervated. In the case of the high-pitched sounds, the patient’s brain will generate this wave in an important way.”

The patients who still have a state of consciousness will produce a normal EEG in response to the exercise, despite not being able to communicate or move. However, a victim who is in a vegetative state will not respond to the stimuli. The results of these first clinical trials carried out on patients who had experienced head trauma are currently being analyzed. The first bits of feedback are promising for the researchers, who have already managed to detect differences in P300 wave generation. “Our work is only just beginning,” states Gérard Dray. “In 2015, we started our research on the detection of consciousness, and it’s a very recent field.” With increasing progress in neuroimaging techniques and learning tools, this is an entire field of neurology that is about to undergo major advances.

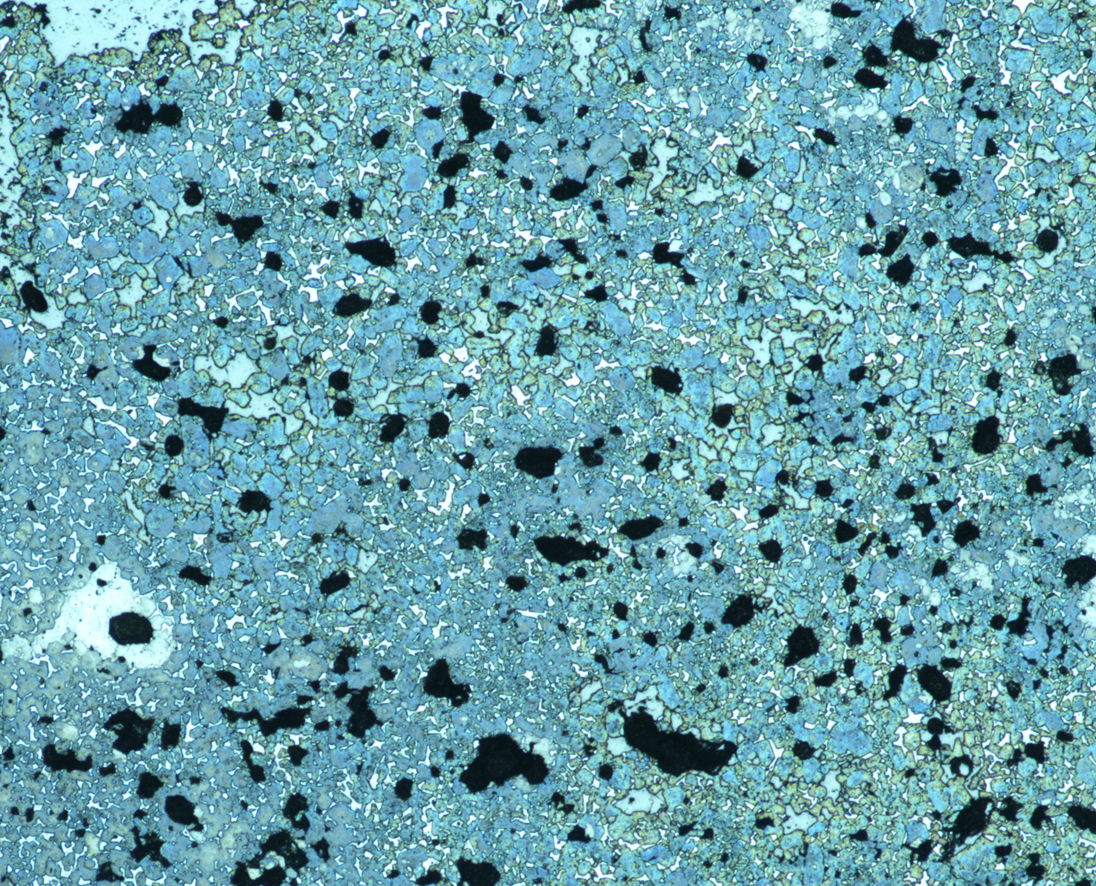

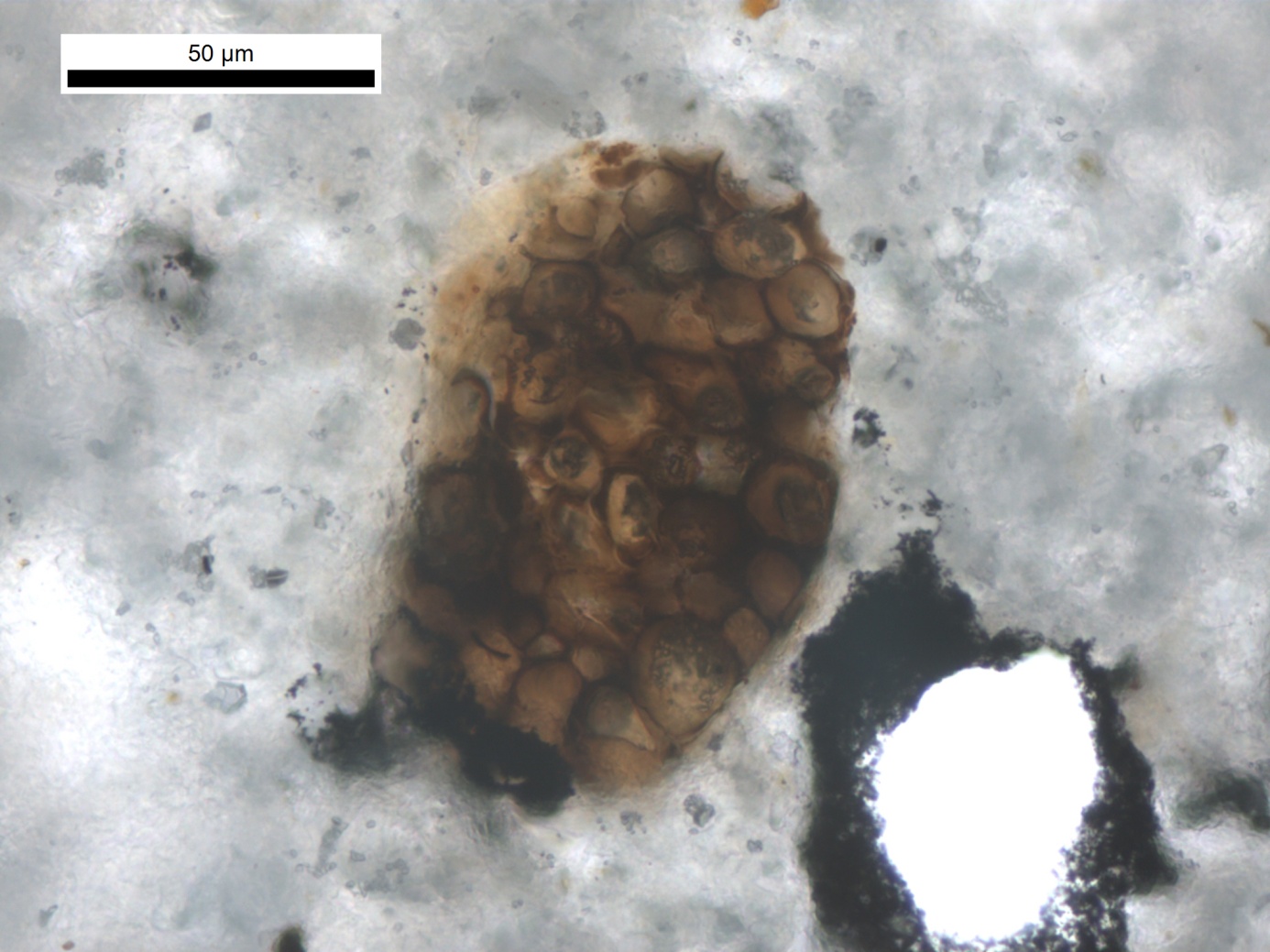

Vincent Thiéry is geologist, currently associate professor (qualified to conduct research) at IMT Nord Europe. His research interests deal with microstructural investigations of geomaterials based on optical microscopy, scanning electron microscopy, confocal laser scanning microscopy… Based on those tools, his research thematics cover a wide spectrum ranging from old cements (framework from the CASSIS project) to more fundamental geological interests (first finding of microdiamonds in metropolitan France) as well as industrial applications as polluted soil remediation using hydraulic binders. In his field of expertise, he insists on the need to break the boundaries between fundamental and engineering sciences in order to enrich each other.

Vincent Thiéry is geologist, currently associate professor (qualified to conduct research) at IMT Nord Europe. His research interests deal with microstructural investigations of geomaterials based on optical microscopy, scanning electron microscopy, confocal laser scanning microscopy… Based on those tools, his research thematics cover a wide spectrum ranging from old cements (framework from the CASSIS project) to more fundamental geological interests (first finding of microdiamonds in metropolitan France) as well as industrial applications as polluted soil remediation using hydraulic binders. In his field of expertise, he insists on the need to break the boundaries between fundamental and engineering sciences in order to enrich each other.