CloudButton: Big Data in one click

Launched in January 2019 for a three-year period, the European H2020 project CloudButton seeks to democratize Big Data by drastically simplifying its programming model. To achieve this, the project relies on a new cloud service that frees the final customer from having to physically manage servers. Pierre Sutra, researcher at Télécom SudParis, one the CloudButton partner, shares his perspective on the project.

What is the purpose of the project?

Pierre Sutra: Modern computer architectures are massively distributed across machines and a single click can require the computations from tens to hundreds of servers. However, it is very difficult to build this type of system, since it requires linking together many heterogeneous components. The key objective of CloudButton is to radically simplify this approach to programming.

How do you intend to do this?

PS: To accomplish this feat, the project builds on a recent concept that will profoundly change computer architectures: Function-as-a-Service (FaaS). FaaS makes it possible to invoke a function in the cloud on-demand, as if it was a local computation. Since it uses the cloud, a huge number of functions can be invoked concurrently, and only the usage is charged—with millisecond precision. It is a little like having your own supercomputer on demand.

Where did the idea for the CloudButton project come from?

PS: The idea came from a discussion with colleagues from the Spanish university Rovira i Virgili (URV) during the 2017 ICDCS in Atlanta (International Conference on Distributed Computing Systems). We had just presented a new storage layer for programming distributed systems. This layer was attractive, yet it lacked an application that would make it a true technological novelty. At the time, the University of Berkeley offered an approach for writing parallel applications on top of FaaS. We agreed that this was what we needed to move forward. It would allow us to use our storage system with the ultimate goal of moving single-computer applications to the cloud with minimal effort. The button metaphor illustrates this concept.

Who are your partners in this project?

PS: The consortium brings together five academic partners: URV (Tarragona, Spain), Imperial College (London, UK), EMBL (European Molecular Biology Laboratory, Heidelberg, Germany), The Pirbright Institute (Surrey, UK) and IMT, and several industrial partners, including IBM and RedHat. The institutes specializing in genomics (The Pirbright Institute) and molecular biology (EMBL) will be the end users of the software. They also provide us with new use cases and issues.

Can you give us an example of a use case?

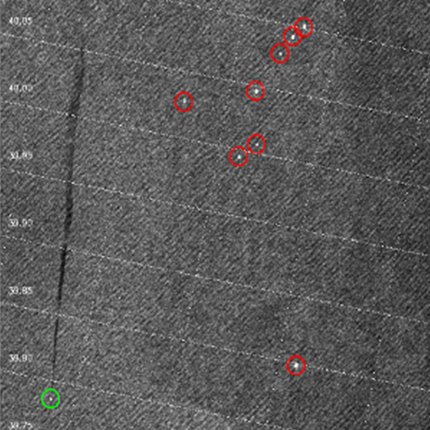

PS: EMBL offers its associate researchers access to a large bank of images from around the world. These images are stamped with information on the subject’s chemical composition by combining artificial intelligence and the expertise of EMBL researchers. For now, the system must calculate the stamps in advance. A use case for CloudButton would be for these computations to be performed on-demand, to customize user requests, for example.

How are Télécom SudParis researchers contributing to this project?

PS: Télécom SudParis is working on the storage layer for CloudButton. The goal is to design programming abstractions that are as similar as possible to what standard programming languages are. Of course, these abstractions must also be effective for the FaaS delivery model. This research is being conducted in collaboration with IBM and RedHat.

What technological and scientific challenges are you facing?

PS: In its current state, storage systems are not designed to handle massively parallel computations over a short period of time. The first challenge is therefore to adapt storage to the FaaS model. The second challenge is to reduce the synchronization between parallel tasks to a strict minimum in order to maximize performance. The third challenge is fault tolerance. Since the computations run on large-scale infrastructure, this infrastructure is regularly subject to errors. However, the faults must be hidden in order to display a simplified programming interface.

What are the expected benefits of this project?

PS: The success of a project like CloudButton can take several forms. Our first goal is to allow the institutes and companies involved in the project to resolve their computing and big data issues. On the other hand, the software we are developing could also meet with success among the open source community. Finally, we hope that this project will produce new design principles for computer system architectures that will be useful in the long run.

What are the important next steps in this project?

PS: We will meet with the European Commission one year from now for a mid-term assessment. So far, the prototypes and applications we have developed are encouraging. By then, I hope we will be able to present an ambitious computing platform based on an innovative use case.

[divider style=”normal” top=”20″ bottom=”20″]

The CloudButton consortium partners