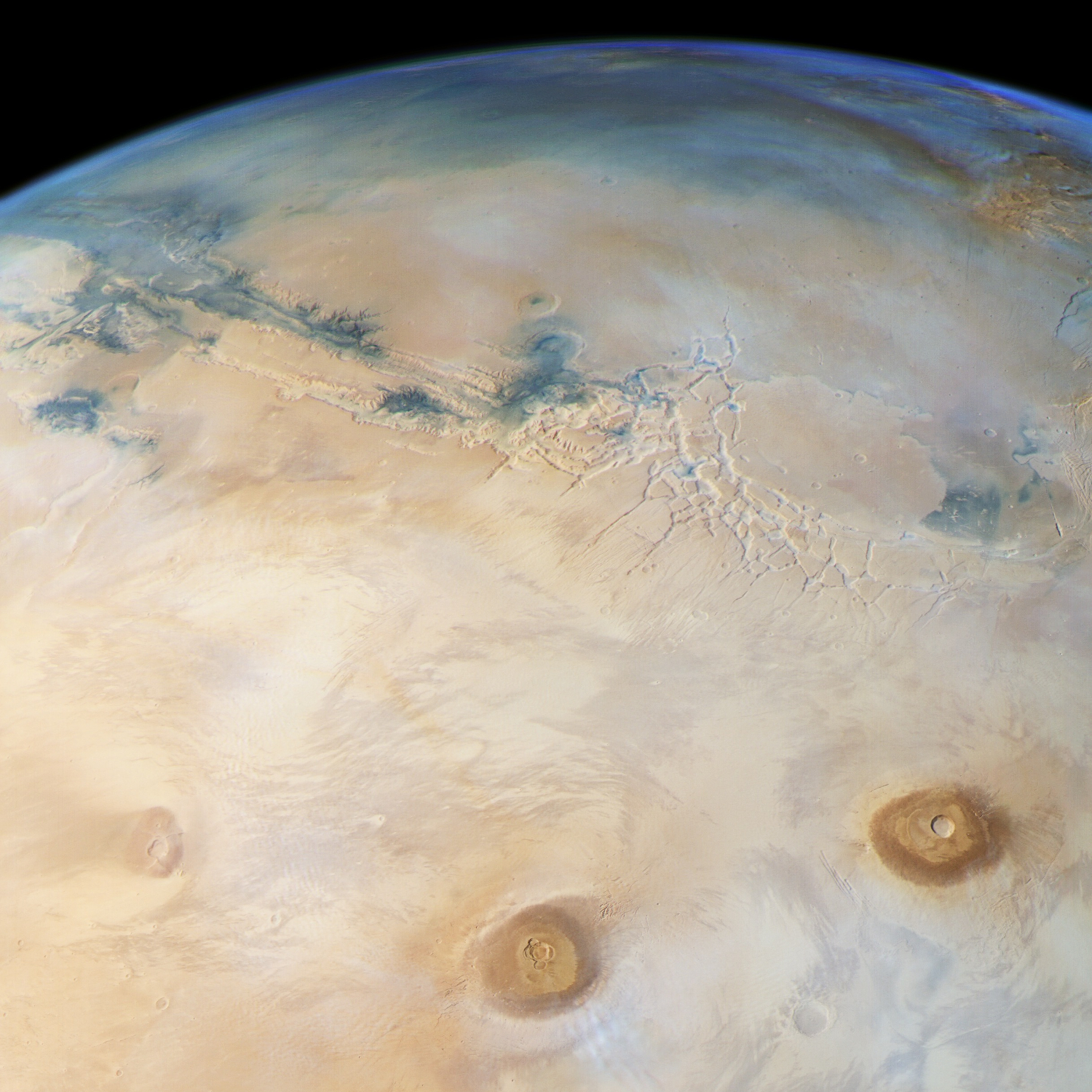

In order to assess the danger posed by fine particles in ambient air, it is crucial to do more than simply take regulatory measurements of their mass in the air. The diversity of their chemical composition means that different toxicological impacts are possible for an equal mass. Chemists at IMT Lille Douai are working on understanding the physicochemical properties of the fine particle components responsible for their adverse biological effects on health. They are developing a new method to indicate health effects, based on measuring the oxidizing potential of these pollutants in order to better identify those which pose risks to our health.

The smaller they are, the greater their danger. That is the rule of thumb to sum up the toxicity of the various types of particles present in the atmosphere. This is based on the ease with which the smallest particles penetrate deep into our lungs and get trapped there. While the size of particles clearly plays a major role in how dangerous they are, the impact of their chemical composition must not be understated. For an equal mass of fine particles in the air, those we breath in Paris are not the same as the ones we breathe in Dunkirk or Grenoble, due to the different nature of the sources which produce them. And even within the same city the particles we inhale vary greatly depending on where we are located in relation to a road or a factory.

“Fine particles are very diverse: they contain hundreds, or even thousands of chemical compounds,” say Laurent Alleman and Esperanza Perdrix, researchers in atmospheric pollution in the department of atmospheric sciences and environmental engineering at IMT Lille Douai. Carboxylic acid, polycyclic aromatic hydrocarbons are just some of the many examples of molecules found in particles in higher or lower proportions. A great number of metals and metalloids can be added to this organic cocktail: copper, iron, arsenic etc., as well as carbon black. The final composition of a fine particle therefore depends on its proximity to sources of each of these ingredients. Copper and antimony, for example, are commonly found in particles near roads, produced by cars when braking, while nickel and lanthanum are typical of fine particles produced from petrochemistry.

Read more on I’MTech: What are fine particles?

Today, only the mass concentration as a function of certain sizes of particles in the air is considered in establishing thresholds for warning the population. For Laurent Alleman and Esperanza Perdrix, it is important to go beyond mass and size to better understand and prevent the health impacts of particles based on their chemical properties. Each molecule, each chemical species present in a particle has a different toxicity. “When they penetrate our lungs, fine particles break down and release these components,” explains Laurent Alleman. “Depending on their physicochemical properties, these exogenous agents will have a more or less serious aggressive effect on the cells that make up our respiratory system.”

Measuring particles’ oxidizing potential

This aggression mainly takes the form of oxidation chemical reactions in cells: this is oxidative stress. This effect induces deterioration of biological tissue and inflammation, which can lead to different pathological conditions, whether in the respiratory system — asthma, chronic obstructive pulmonary diseases — or throughout the body. Since the chemical components and molecules produced by these stressed cells enter the bloodstream, they also create oxidative stress elsewhere in the body. “That’s why fine particles are also responsible for cardiovascular diseases such as cardiac rhythm disorders,” says Esperanza Perdrix. When it becomes too severe and chronic, oxidative stress can have mutagenic effects by altering DNA and can promote cancer.

For researchers, the scientific challenge is therefore to better assess a fine particle’s ability to cause oxidative stress. At IMT Lille Douai, the approach is to measure this ability in test tubes by determining the resulting production of oxidizing molecules for a specific type of particle. “We don’t directly measure the oxidative stress produced at the cellular level, but rather the fine particle’s potential to cause this stress,” explains Laurent Alleman. As such, the method is less expensive and quicker than a study in a biological environment. Most importantly, “Unlike tests on biological cells, measuring particles’ oxidizing potential is quick and can be automated, while giving us a good enough indication of the oxidative stress that would be produced in the body,” says Esperanza Perdrix. A winning combination, which would make it possible to make oxidizing potential a reference base for the analysis and ongoing, large-scale prevention of the toxicity of fine particles.

To measure the toxicity of fine particles, researchers are finding alternatives to biological analysis.

This approach has already allowed the IMT Lille Douai team to measure the harmfulness of metals. They have found that copper and iron are the chemical elements with the highest oxidizing potential. “Iron reacts with the hydrogen peroxide in the body to produce what we call free radicals: highly reactive chemical species with short lifespans, but very strong oxidizing potential,” explains Laurent Alleman. If the iron provided by the fine particles is not counterbalanced by an antioxidant — such as vitamin C — the radicals formed can break molecular bonds and damage cells.

Researchers caution, however, that, “Measuring oxidizing potential is not a unified method; it’s still in the developmental stages.” It is based on the principle of bringing together the component whose oxidizing potential is to be assessed with an antioxidant, and then measuring the quantity or rate of antioxidant consumed. In order for oxidizing potential to become a reference method, it still has to be become more popular among the scientific community, demonstrate its ability to accurately assess biological oxidative stress produced in vivo, and be standardized.

So for now, the mass concentration of fine particles remains the preferred method. Nevertheless, a growing number of studies are being carried out with the aim of taking account of chemical composition and health aspects. This is reflected in the many disciplines involved in this research. “Toxicological issues bring together a wide variety of fields such as chemistry, physics, biology, medicine, bioinformatics and risk analysis, to name just a few,” says Esperanza Perdrix, who also cites communities other than those with scientific expertise. “This topic extends beyond our disciplinary fields and must also involve environmental groups, citizens, elected officials and others,” she adds.

Research is ongoing at the international level as well, in particular through MISTRALS, a large-scale meta-program led by CNRS, launched in 2010 for a ten-year period. One of its programs, called ChArMEx, aims to study pollution phenomena in the Mediterranean basin. “Through this program, we’re developing international collaboration to improve methods for measuring oxidizing potential,” explains Laurent Alleman. “We plan to develop an automated tool for measuring oxidizing potential over the next few years, by working together with a number of other countries, especially those in the Mediterranean region such as Crete, Lebanon, Egypt, Turkey etc.”

Also read on I’MTech:

[divider style=”normal” top=”20″ bottom=”20″]

A MOOC to learn all about air pollution

On October 8, IMT launched a MOOC dedicated to air quality, drawing on the expertise of IMT Lille Douai. It presents the main air pollutants and their origin, whether man-made or natural. The MOOC will also provide an overview of the health-related, environmental and economic impacts of air pollution.

[divider style=”normal” top=”20″ bottom=”20″]