XENON1T observes one of the rarest events in the universe

The researchers working on the XENON1T project observed a strange phenomenon: the simultaneous capture of two electrons by the atomic nucleus of xenon. A phenomenon so rare that it earned the scientific collaboration, which includes the Subatech[1] laboratory, a spot on the cover of the prestigious journal Nature on 25 April 2019. It was both the longest and rarest phenomenon ever to be directly measured in the universe. Although the research team considers this observation — the first in the world — to be a success, it was not their primary aim. Dominque Thers, researcher at IMT Atlantique and head of the French portion of the XENON1T team, explains below.

What is this phenomenon of a simultaneous capture of two electrons by an atomic nucleus?

Dominique Thers: In an atom, it’s possible for an electron [a negative charge] orbiting a nucleus to be captured by it. Inside the nucleus, a proton [a positive charge] will thus become a neutron [a neutral charge]. This is a known phenomenon and has already been observed. However, the theory prohibits this phenomenon for certain atomic elements. This is the case for isotope 124 of xenon, whose nucleus cannot capture a single electron. The only event allowed by the laws of physics for this isotope of xenon is the capture of two electrons at the same time, which neutralize two protons, thereby producing two neutrons in the nucleus. The Xenon 124 therefore becomes tellurium 124, another element. It’s this simultaneous double capture phenomenon that we observed, which had never been observed before.

XENON1T was initially designed to search for WIMPs, the particles that make up the mysterious dark matter of the universe. How do you go from this objective to observing the atomic phenomenon, as you were able to do?

DT: In order to observe WIMPs, our strategy is based on the exposure of two tons of liquid xenon. This xenon contains different isotopes, of which approximately 0.15% are xenon 124. That may seem like a small amount, but for two tons of liquid it represents a large number of atoms. So there is a chance that this simultaneous double capture event will occur. When it does, the cloud of electrons around the nucleus reorganizes itself, and simultaneously emits X-rays and a specific kind of electrons known as Auger electrons. Both of these interact with the xenon and produce light using the same mechanism with which the WIMPs in dark matter react with xenon. Using the same measuring instrument as the one designed to detect WIMPs, we can therefore observe this simultaneous double capture mechanism. And it’s the energy signature of the event we measure that gives us information about the nature of the event. In this case, the energy released was approximately twice the energy required to bind an electron to its nucleus, which is characteristic of a double capture.

To understand how the XENON1T detector works, read our dedicated article: XENON1T: A giant dark matter hunter

Were you expecting to observe these events?

DT: We did not at all build XENON1T to observe these events. However, we have a cross-cutting research approach: we knew there was a chance that the double capture would occur, and that we may be able to detect it if it did. We also knew that the community that studies atom stability and this type of phenomenon hoped to observe such an event to consolidate their theories. Several other experiments around the world are working on this. What’s funny is that one of these experiments, XMASS, located in Japan, had published a theory ruling out such an observation over a much longer period of time than what we observed. In other words, according to the previous findings of their research on double electron capture, we weren’t supposed to observe the phenomenon with the parameters of our experiment. In reality, after re-evaluation, they were just unlucky, and could have observed it before we did with similar parameters.

One of the main characteristics of this observation, which makes it especially important, is its half-life time. Why is this?

DT : The half-life time measured is 1.8×1022 years, which corresponds to 1,000 billion times the age of the universe. To put it simply, within a sample of xenon 124, it takes billions of billions of years before this decay occurs for half of the atoms. So it’s an extremely rare process. It’s the phenomenon with the longest half-live ever directly observed in the universe; half-life times longer than that have only been deduced indirectly. What’s important to understand, behind all this information, is that successfully observing such a rare event means that we understand the matter that surrounds us very well. We wouldn’t have been able to detect this double capture if we hadn’t understood our environment with such precision.

Beyond this discovery, how does this contribute to your search for dark matter?

DT: The key added-value of this result is that it reassures us about the analysis we’re carrying out. It’s challenging to search for dark matter without ever achieving positive results. We’re often confronted with doubt, so seeing that we have a positive signal with a specific signature that has never been observed until now is reassuring. This proves that we’re breaking new ground, and encourages us to remain motivated. It also proves the usefulness of our instrument and attests to the quality and precision of our calibration campaigns.

Read on I’MTech: Even without dark matter Xenon1T is a success

What’s next for XENON1T?

DT: We still have an observation campaign to analyze since our approach is to let the experiment run for several months without human interference, then to discover and analyze the measurements to look for results. We improved the experiment in the beginning of 2019 to further increase the sensitivity of our detector. XENON1T initially contained a ton of xenon, which explains its name. At present, it has more than double that amount and by the end of the experiment, it will be called XENONnT and will contain 8 tons of xenon. This will allow us to obtain a sensitivity limit of detection for WIMPs which is ten times lower, in the hope of finally detecting these dark matter particles.

[1] The Subatech laboratory is a joint research unit between IMT Atlantique/CNRS/University of Nantes.

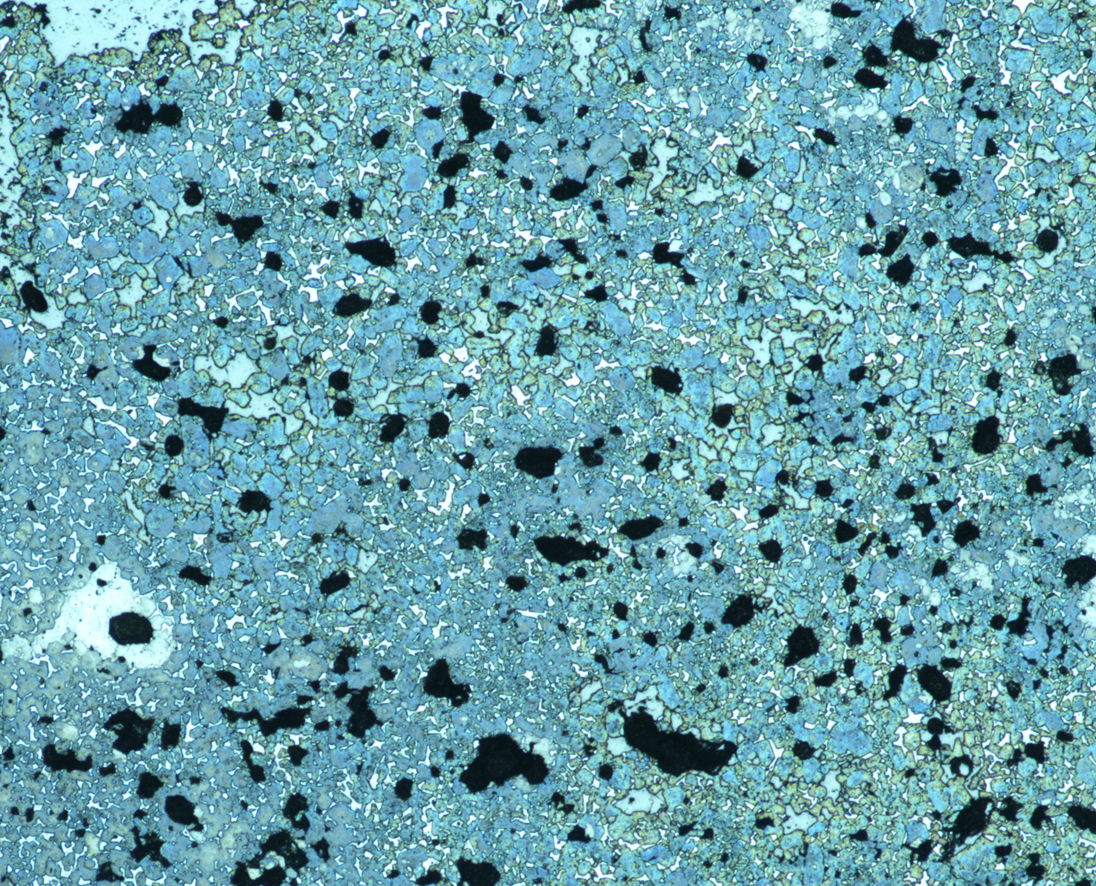

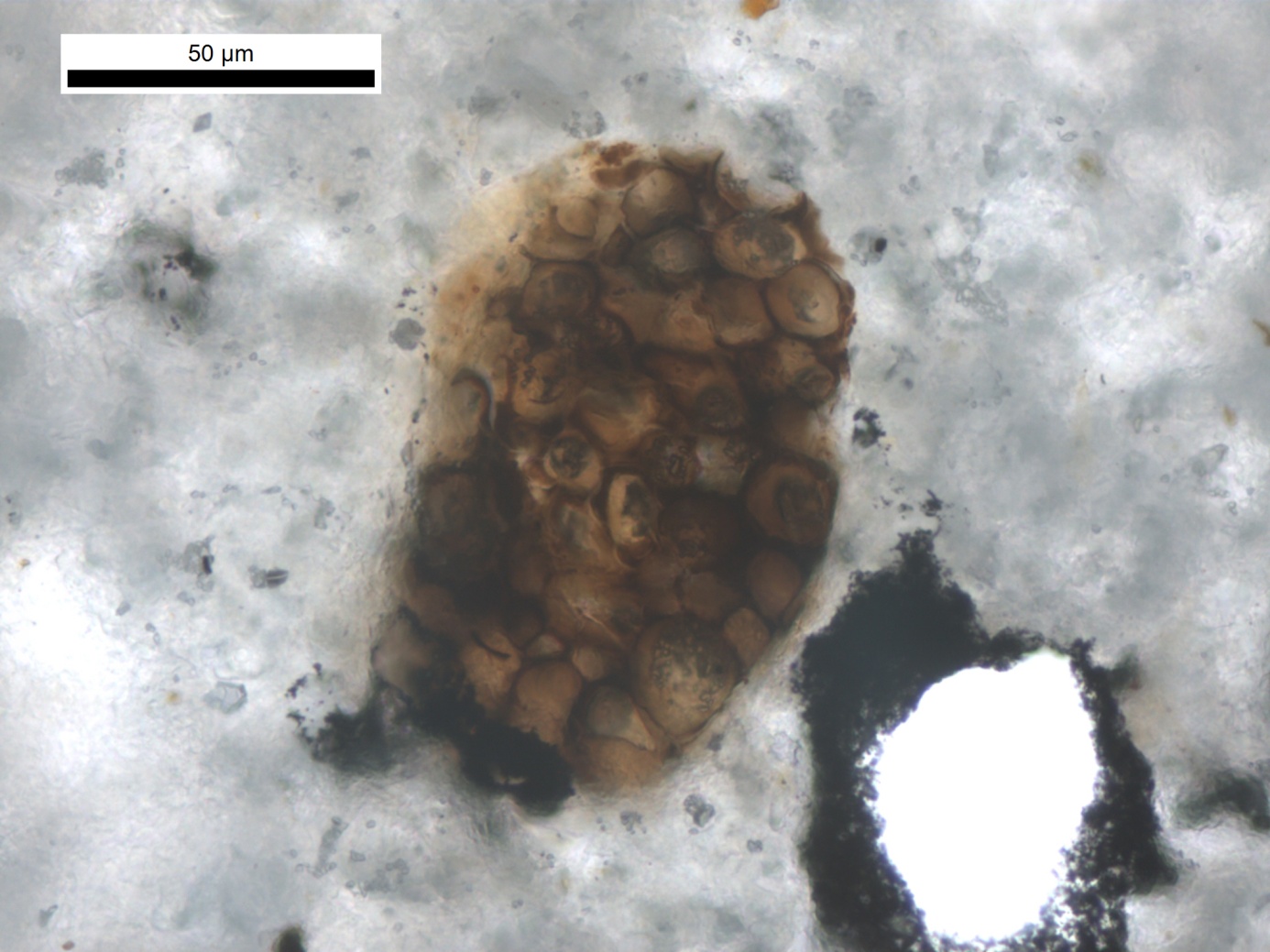

Vincent Thiéry is geologist, currently associate professor (qualified to conduct research) at IMT Nord Europe. His research interests deal with microstructural investigations of geomaterials based on optical microscopy, scanning electron microscopy, confocal laser scanning microscopy… Based on those tools, his research thematics cover a wide spectrum ranging from old cements (framework from the CASSIS project) to more fundamental geological interests (first finding of microdiamonds in metropolitan France) as well as industrial applications as polluted soil remediation using hydraulic binders. In his field of expertise, he insists on the need to break the boundaries between fundamental and engineering sciences in order to enrich each other.

Vincent Thiéry is geologist, currently associate professor (qualified to conduct research) at IMT Nord Europe. His research interests deal with microstructural investigations of geomaterials based on optical microscopy, scanning electron microscopy, confocal laser scanning microscopy… Based on those tools, his research thematics cover a wide spectrum ranging from old cements (framework from the CASSIS project) to more fundamental geological interests (first finding of microdiamonds in metropolitan France) as well as industrial applications as polluted soil remediation using hydraulic binders. In his field of expertise, he insists on the need to break the boundaries between fundamental and engineering sciences in order to enrich each other.