Ethical algorithms in health: a technological and societal challenge

The possibilities offered by algorithms and artificial intelligence in the healthcare field raise many questions. What risks do they pose? How can we ensure that they have a positive impact on the patient as an individual? What safeguards can be put in place to ensure that the values of our healthcare system are respected?

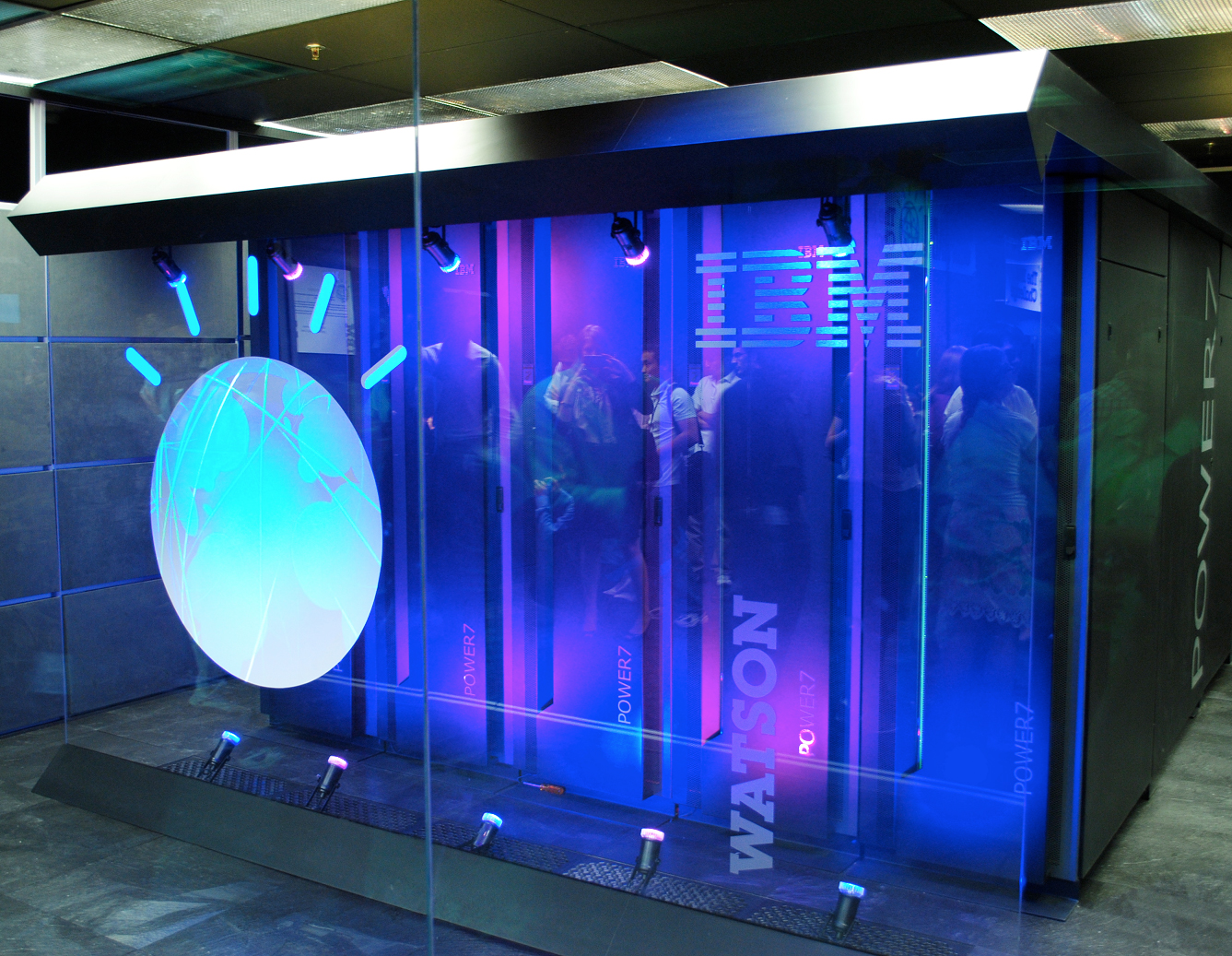

A few years ago, Watson, IBM’s supercomputer, turned to the health sector and particularly oncology. It has paved the way for hundreds of digital solutions, ranging from algorithms for analyzing radiology images to more complex programs designed to help physicians in their treatment decisions. Specialists agree that these tools will spark a revolution in medicine, but there are also some legitimate concerns. The CNIL, in its report on the ethical issues surrounding algorithms and artificial intelligence, stated that they “may cause bias, discrimination, and even forms of exclusion”.

In the field of bioethics, four basic principles were announced in 1978: justice, autonomy, beneficence and non-maleficence. These principles guide research on the ethical questions raised by new applications for digital technology. Christine Balagué, holder of the Smart Objects and Social Networks chair at the Institut Mines-Télécom Business School, highlights a pitfall, however: “the issue of ethics is tied to a culture’s values. China and France for example have not made the same choices in terms of individual freedom and privacy”. Regulations on algorithms and artificial intelligence may therefore not be universal.

However, we are currently living in a global system where there is no secure barrier to the dissemination of IT programs. The report made by the CCNE and the CERNA on digital technology and health suggests that the legislation imposed in France should not be so stringent as to restrict French research. This would come with the risk of pushing businesses in the healthcare sector towards digital solutions developed by other countries, with even less controlled safety and ethics criteria.

Bias, value judgments and discrimination

While some see algorithms as flawless, objective tools, Christine Balagué, who is also a member of CERNA and the DATAIA Institute highlights their weaknesses: “the relevance of the results of an algorithm depends on the information it receives in its learning process, the way it works, and the settings used”. Bias may be introduced at any of these stages.

Firstly, in the learning data: there may be an issue of representation, like for pharmacological studies, which are usually carried out on 20-40-year-old Caucasian men. The results establish the effectiveness and tolerance of the medicine for this population, but are not necessarily applicable to women, the elderly, etc. There may also be an issue of data quality: their precision and reliability are not necessarily consistent depending on the source.

Data processing, the “code”, also contains elements which are not neutral and may reproduce value judgments or discriminations made by their designers. “The developers do not necessarily have bad intentions, but they receive no training in these matters, and do not think of the implications of some of the choices they make in writing programs” explains Grazia Cecere, economics researcher at the Institut Mines-Télécom Business School.

Read on I’MTech: Ethics, an overlooked aspect of algorithms?

In the field of medical imaging, for example, determining an area may be a subject of contention: A medical expert will tend to want to classify uncertain images as “positive” so as to avoid missing a potential anomaly which could be cancer, which therefore increases the number of false-positives. On the contrary, a researcher will tend to maximize the relevance of their tool in favor of false-negatives. They do not have the same objectives, and the way data are processed will reflect this value judgment.

Security, loyalty and opacity

The security of medical databases is a hotly-debated subject, with the risk that algorithms may re-identify data made anonymous and may be used for malevolent or discriminatory purposes (by employers, insurance companies, etc.). But the security of health data also relies on individual awareness. “People do not necessarily realize that they are revealing critical information in their publications on social networks, or in their Google searches on an illness, a weight problem, etc.”, says Grazia Cecere.

Applications labeled for “health” purposes are often intrusive and gather data which could be sold on to potentially malevolent third parties. But the data collected will also be used for categorization by Google or Facebook algorithms. Indeed, the main purpose of these companies is not to provide objective, representative information, but rather to make profit. In order to maintain their audience, they need to show that audience what it wants to see.

The issue here is in the fairness of algorithms, as called for in France in 2016 in the law for a digital republic. “A number of studies have shown that there is discrimination in the type of results or content presented by algorithms, which effectively restricts issues to a particular social circle or a way of thinking. Anti-vaccination supporters, for example, will see a lot more publications in their favor” explains Grazia Cecere. These mechanisms are problematic, as they get in the way of public health and prevention messages, and the most at-risk populations are the most likely to miss out.

The opaque nature of deep learning algorithms is also an issue for debate and regulation. “Researchers have created a model for the spread of a virus such as Ebola in Africa. It appears to be effective. But does this mean that we can deactivate WHO surveillance networks, made up of local health professionals and epidemiologists who come at a significant cost, when no-one is able to explain the predictions of the model?” asks Christine Balagué.

Researchers from both hard sciences and social sciences and humanities are looking at how to make these technologies responsible. The goal is to be able to directly incorporate a program which will check that the algorithm is not corrupt and will respect the principles of bioethics. A sort of “responsible by design” technology, inspired by Asimov’s three laws of robotics.

Article initially written in French by Sarah Balfagon, for I’MTech.

Leave a Reply

Want to join the discussion?Feel free to contribute!