Aerosol therapy: An ex vivo model of lungs

A researcher in Health Engineering at Mines Saint-Étienne, Jérémie Pourchez, and his colleagues at the Saint-Étienne University Hospital, have developed an ex vivo model of lungs to help improve medical aerosol therapy devices. An advantage of this technology is that scientists can study inhalation therapy whilst limiting the amount of animal testing that they use.

This article is part of our dossier “When engineering helps improve healthcare“

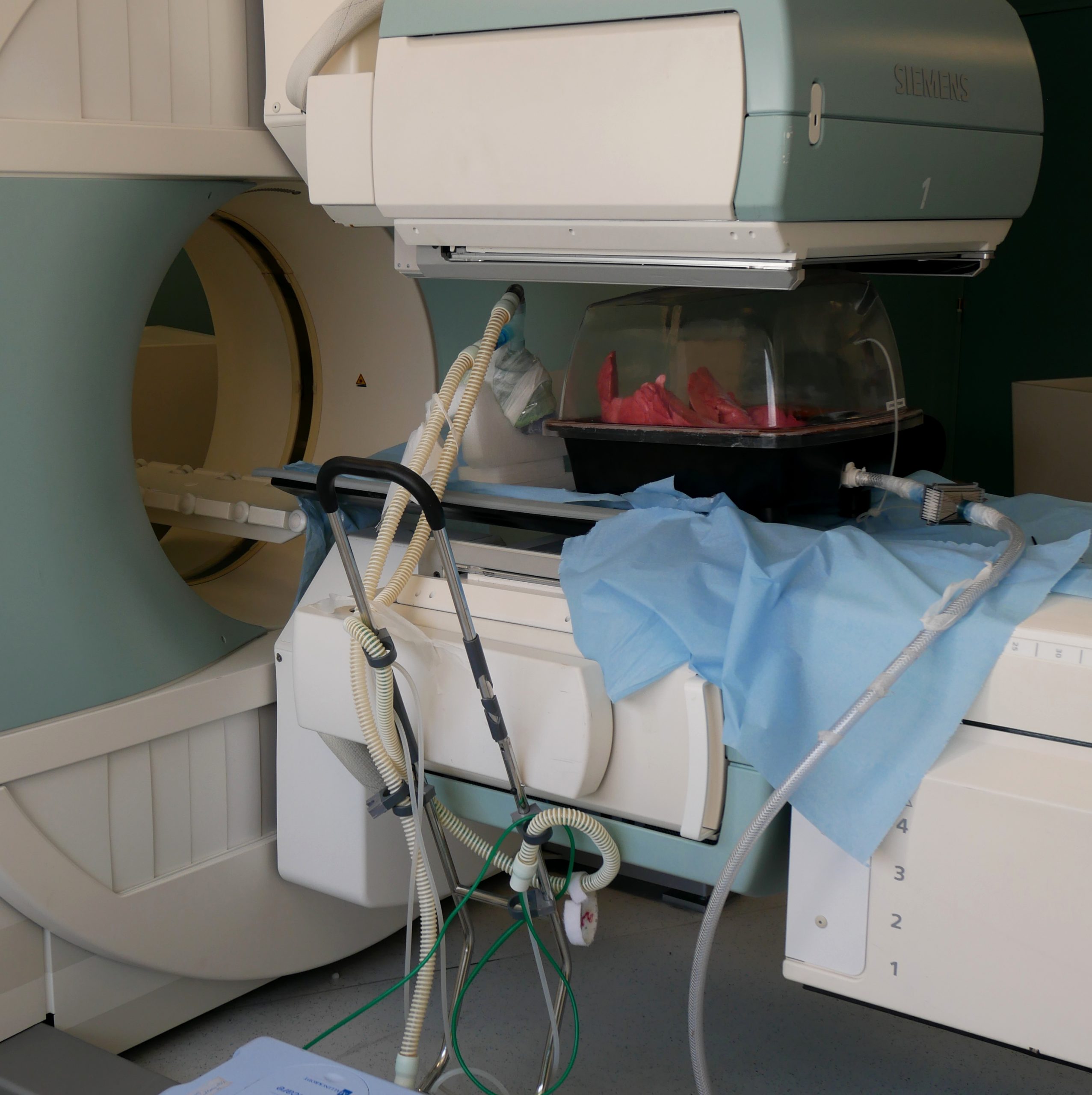

Imagine a laboratory where, sat on top of the workbench, is a model of a human head made using 3D printing. This anatomically correct replica is connected to a pipe which mimics the trachea, and then a vacuum chamber. Inside this chamber is a pair of lungs. Periodically, a pump stimulates the natural movement of the rib cage to induce breathing and the lungs inflate and deflate. This physical model of the respiratory system might sound like science-fiction, but it is actually located in the Health Engineering Center at Mines Saint-Étienne.

Developed by a team led by Jérémie Pourchez, a specialist in inhaled particles and aerosol therapy, and as part of the ANR AMADEUS project, this ex-vivo model stimulates breathing and the illnesses associated with it, such as asthma or fibrosis. For the past two years, the team have been working on developing and validating the model, and have already created both an adult and child-sized version of the device. By offering an alternative and less expensive solution to animal testing, the device has allowed the team to study drug delivery using aerosol therapy. The ex vivo pulmonary model is also far more ethical, as it only uses organs which would otherwise be thrown away by slaughterhouses

Does this ex vivo model work the same as real lungs?

One of the main objectives for the researchers is to prove that these ex vivo models can predict what happens inside the human body accurately. To do this, they must demonstrate three things.

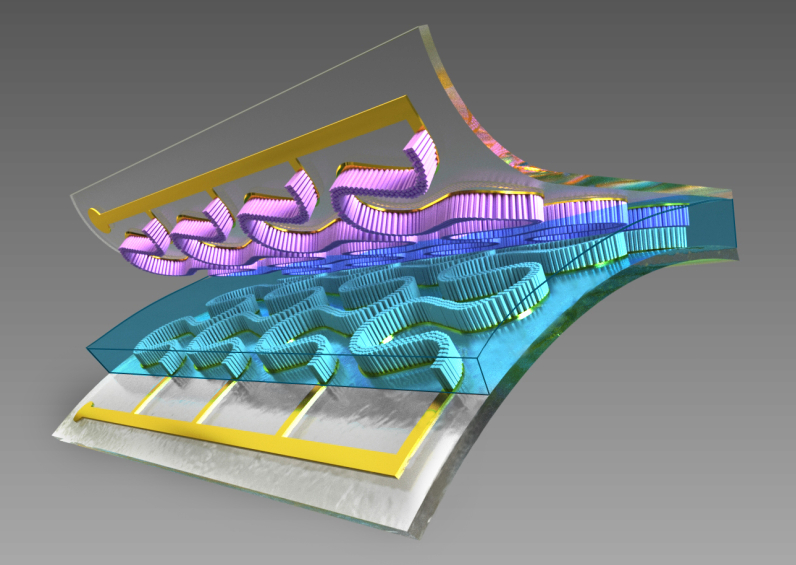

First, the researchers need to study the respiratory physiology of the models. By using the same indicators that are used on real patients, such as those used for measuring the respiratory parameters of an asthmatic, the team demonstrate that the model’s parameters are the same as those for a human being. After this, the team must analyze the model’s ventilation; for example, by making sure that there are no obstacles in the bronchi. To do this, the ex vivo lungs inhale krypton, a radioactive gas, which is then used as a tracer to visualize the air-flow throughout the model. Finally, the team study aerosol deposition in the respiratory tract, which involves observing where inhaled particles settle when using a nebulizer or spray. Again, this is done by using radioactive materials and nuclear medical imaging.

These results are then compared to results you would expect to see in humans, as defined by scientific literature. If the parameters match, then the model is validated. However, the pig’s lungs used in the model behave like the lungs of a healthy individual. This poses a problem for the team, as the aim of their research is to develop a model that can mimic illnesses so they can test the effectiveness of aerosol therapy treatments.

From a healthy model to an ill one

There are various pathologies that can affect someone’s breathing, and air pollution tends to increase the likelihood of contracting one of them. For example, fibrosis damages the elastic fibers that help our lungs to expand when we breathe in. This makes the lung more rigid and breathing more difficult. In order to mimic this in the ex vivo lungs, the organs are heat treated with steam to stiffen the surface of the tissue. This then changes their elasticity, and recreates the mechanical behavior of human lungs with fibrosis.

Other illnesses such as cystic fibrosis occur, amongst other things, due to the lungs secreting substances that make it difficult for air to travel through the bronchi. To recreate this, the researchers insert artificial secretions made from thickening agents, which allows the model lung to mimic these breathing difficulties.

Future versions of the model

Imitating these illnesses is an important first step. But in order to study how aerosol therapy treatments work, the researchers also need to observe how they diffuse into the bloodstream. This can be either an advantage or a disadvantage. Since the lungs are also an entry point where the drug can spread through the body, the research team installed one last tool: a pump to simulate a heartbeat. “The pump allows fluid to circulate around the model in the same way that blood circulates in lungs,” explains Jérémie Pourchez. “During a test, we can then measure the amount of inhaled drug that will diffuse into the bloodstream. We are currently validating this improved model.”

One problem that the team is now facing is the development of new systemic inhalation treatments. These are designed to treat illnesses in other organs, but are inhaled and use the lungs as an entry point into the body. “A few years ago, an insulin spray was put on the market,” says Jérémie Pourchez. Insulin, which is used to treat diabetes, needs to be regularly injected. “This would be a relief for patients suffering from the disease, as it would replace these injections with inhalations. But the drug also requires an extremely precise dose of the active ingredient, and obtaining this dose of insulin using an aerosol remains a scientific and technical challenge.”

As well as being easier to use, an advantage of inhaling a treatment is how quickly the active ingredient enters into the bloodstream. “That’s why people who are trying to quit smoking find that electronic cigarettes work better than patches at satisfying nicotine cravings”, says the researcher. The dose of nicotine inhaled is deposited in the lungs and is diffused directly into the blood. “It also led me to study electronic cigarette-type devices and evaluate whether they can be used to deliver different drugs by aerosol,” explains Jérémie Pourchez.

By modifying certain technical aspects, these devices could become aerosol therapy tools, and be used in the future to treat certain lung diseases. This is one of the ongoing projects of the Saint-Étienne team. However, there is still substantial research that needs to be done into the device’s potential toxicity and its effectiveness depending on the inhaled drugs being tested. Rather than just being used as a tool for quitting smoking, the electronic cigarette could one day become the newest technology in medical aerosol treatment.

Finally, this model will also provide an answer to another important issue surrounding lung transplants. When an organ is donated, it is up to the biomedicine agency to decide whether this donation can be given to the transplant teams. But this urgent decision may be based on factors that are sometimes not sufficient enough to be able to assess the real quality of the organ. “For example, to assess the quality of a donor’s lung,” says Jérémie Pourchez, “we refer to important data such as: smoking, age, or the donor’s known illneses. Therefore, our experimental device which makes lungs breathe ex vivo, can be used as a tool to more accurately assess the quality of the lungs when they are received by the transplant team. Then, based on the tests performed on the organ that is going to be transplanted, we can determine whether it is safe to perform the operation.”