Medicine for Materials

Did you know that materials have health problems too? To diagnose their structural integrity, researchers are increasingly using techniques similar to those used in the medical field for humans. X-rays, temperature checks and ultrasound imaging are just a few of the tools that help detection of abnormalities in parts. The advantage of these various techniques is that they are non-destructive. When used together, they can provide much information on a mechanical system without taking it out of service. Salim Chaki is one of the French pioneers in this area. The researcher with IMT Lille Douai explains why manufacturers are keeping a close watch on the latest advances in this field.

What is the principle behind a non-destructive inspection?

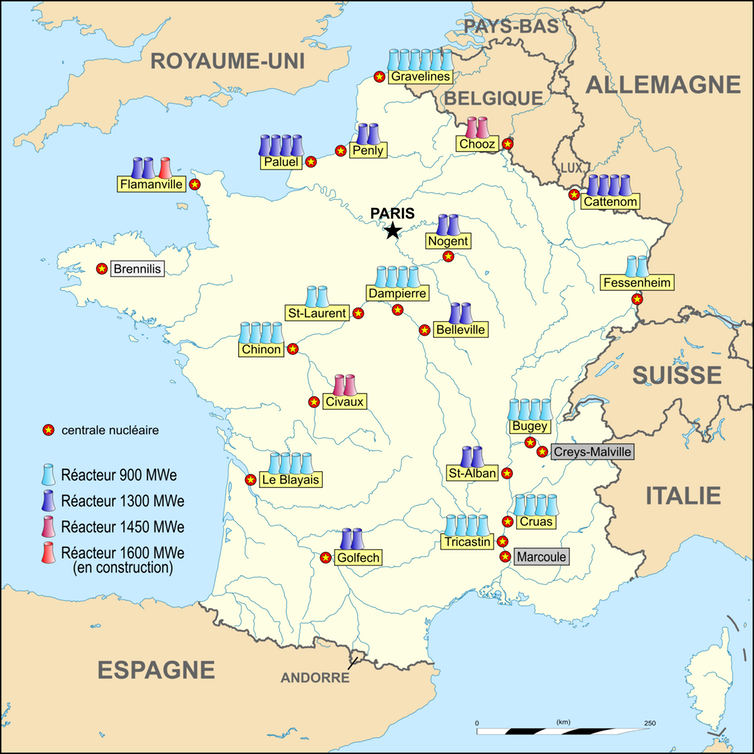

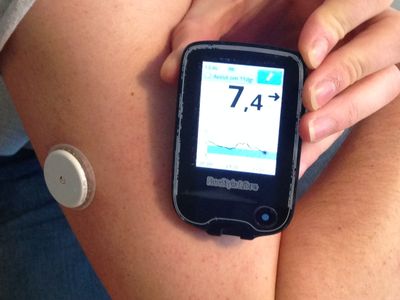

Salim Chaki: It is a set of techniques that can provide information about a part’s state of health without modifying it. Before these techniques were developed, the traditional approach involved cutting up a defective part and inspecting it to identify the defect. With the non-destructive method, the philosophy is the same as that of human medicine: we use x-rays and ultrasounds, for example, to study what is inside the part, or infrared thermography to take its surface temperature to detect abnormalities. The development of nuclear energy during the post-war period demanded this type of techniques since radioactivity introduced new constraints in handling radioactive objects.

Your research approach involves performing a non-destructive “multi-technical” approach. What is the advantage of this approach?

SC: Historically, engineers would choose to use x-rays, ultrasound or other techniques based on their needs. For several decades, manufacturers did not really consider using several techniques simultaneously, whereas in the medical world a more global approach was already being used, including a clinical examination, blood test, x-rays and possibly further tests to diagnose a patient’s illness. In 2006, we became pioneers by proposing a combination of several techniques to diagnose the structural integrity of composite parts during operation. At that point, manufacturers became very interested, convinced by the high potential of the approach. The possibility of diagnosing a defect without modifying the part and even without taking it out of service represents a major economic advantage. We demonstrated the benefit of the non-destructive multi-technical approach by using infrared cameras, optical cameras for measuring the deformation fields and passive acoustic sensors attached to the structure. These sensors pick up the sound of the vibrations emitted by the part when it cracks. Combining several non-destructive techniques therefore makes it possible to confirm the diagnosis of a part’s condition; it complements the information and improves its reliability.

Salim Chaki was one of the pioneers in this field when he began working on non-destructive multi-technical inspection in 2006.

Is it really that difficult for manufacturers who have not yet done this to combine two or more techniques?

SC: Yes, actually implementing several techniques is not necessarily straightforward. There are technical problems in real time related to synchronization: the data collected by one sensor must be able to be correlated both spatially and temporally with the data from the others. This requires them to all be perfectly synchronized during the measurements. There is also a major “data processing” aspect. For example, the infrared cameras record imaging data that are very big. They then must manage how these data are stored and processed. Finally, the interpretation process requires multiple skills since the data originate from different sensors related to different fields—optics, acoustics, heat science. However, we are currently working on data processing algorithms that would facilitate the use and interpretation of data in industrial settings.

What are the concrete applications of non-destructive multi-technical inspections?

SC: One of the most interesting applications involves pressure vessels—typically gas storage tanks. Regulations require that they be inspected periodically to assess their condition and whether they should remain in use. The non-destructive multi-technical approach not only allows this inspection to occur without emptying the tank and taking it out of service for each inspection, it could also be used to forecast the device’s remaining useful life. This is currently one of the major issues in our research. However, the multi-technique approach is still fairly recent, and therefore not many industrial applications exist. On the other hand, we believe that the future will be more conducive to multi-technical processes which will make this inspection more reliable, an aspect that is repeatedly requested by industrial equipment and plant operators, as well as by the administrative authorities responsible for their safety.

What are your lines of research now that manufacturers have begun adopting these techniques?

SC: First of all, it is important to pursue our efforts in convincing manufacturers of the advantages of multi-technical inspections, particularly the increased reliability of the inspection. There is no universal technique that offers a comprehensive diagnosis of a part’s condition. This introduces another interesting parallel with human medicine: it would be unrealistic to think a single test could detect everything. Also, as I said earlier, we are trying to go beyond the diagnosis by proposing an estimated remaining useful life for a part based on non-destructive measurements carried out while the part is in service. Very soon we will extend this concept to the inspection of parts’ initial health condition. The goal is to quickly predict if a part is healthy or not, starting at the production phase, and determine the duration of its service life. This is known as predictive maintenance.

Is the analysis of the data collected from all of the combined techniques also a research issue?

SC: Yes, of course! Since IMT Lille Douai was founded in 2017, as a result of the merger between Télécom Lille and Mines Douai, new perspectives have opened up through the synergy between our expertise in non-destructive testing of materials and our computer science colleagues’ specialization in data processing. The particular contribution of artificial intelligence algorithms and of big data to processing large volumes of data is crucial in anticipating anomalies for predictive maintenance. If we could streamline the prognosis using these digital tools it would be a major advantage for industrial applications.