The new European Personal Data Protection Regulation (GDPR) comes into effect on May 25. Out of the 99 articles contained in the regulation, two are specifically devoted to the question of certification. While establishing seals to demonstrate compliance with the regulation seems like a good idea in order to reassure citizens and economic stakeholders, a number of obstacles stand in the way.

Certification marks are ubiquitous these days since they are now used for all types of products and services. As consumers, we have become accustomed to seeing them everywhere: from the organic farming label for products on supermarket shelves to Energy certification for appliances. They can either be a sign of compliance with legislation, as is the case for CE marking, or a sign of credibility displayed by a company to highlight its good practices. While it can sometimes be difficult to make sense of the overwhelming number of seals and marks that exist today, some of them represent real value. AOC appellations, for example, are well-known and sought out by many consumers. So, why not create seals or marks to display responsible personal data management?

While this may seem like an odd question to citizens who see these seals as nothing more than red labels on free-range chicken packaging, the European Union has taken it into consideration. So much so, that Articles 42 and 43 of the new European Data Protection Regulation (GDPR) are devoted to this idea. The creation of seals and marks is encouraged by the text in order to enable companies established in the EU who process citizens’ data responsibly to demonstrate their compliance with the regulation. On paper, everything points to the establishment of clear signs of trust in relation to personal data protection.

However, a number of institutional and economic obstacles stand in the way. In fact, the question of seals is so complicated that IMT’s Personal Data Values and Policies Chair* (VPIP) has made it a separate research topic, especially in terms of how the GDPR affects the issue. This research, carried out between the adoption of the European text on April 14, 2016 and the date it is set to come into force, May 25, 2018, has led to the creation of a work of more than 230 pages entitled Signes de confiance : l’impact des labels sur la gestion des données personnelles (Signs of Trust — the impact of seals on personal data management).

For Claire Levallois-Barth, a researcher in Law at Télécom ParisTech and coordinator of the publication, the complexity stems in part from the number and heterogeneity of personal data protection marks. In Europe alone, there are at least 75 different marks, with a highly uneven geographic distribution. “Germany alone has more than 41 different seals,” says the researcher. “In France, we have nine, four of which are granted by the CNIL (National Commission for Computer Files and Individual Liberties).” Meanwhile, the United Kingdom has only two and Belgium only one. Each country has its own approach, largely for cultural reasons. It is therefore difficult to make sense of such a disparate assortment of marks with very different meanings.

Seals for what?

Because one of the key questions is: what should the seal describe? Services? Products? Processes within companies? “It all depends on the situation and the aim,” says Claire Levallois-Barth. Until only recently, the CNIL granted the “digital safe box” seal to certify that a service respected “the confidentiality and integrity of data that is stored there” according to its own criteria. At the same time, the Commission also has a “Training” seal that certifies the quality of training programs on European or national legislative texts. Though both were awarded by the same organization they do not have the same meaning. So saying that a company has been granted “a CNIL seal” provides little information. One must delve deeper into the complexity of these marks to understand what they mean, which seems contradictory to the very principle of simplification they are intended to represent.

One possible solution could be to create general seals to encompass services, internal processes and training for all individuals responsible for data processing at an organization. However, this would be difficult from an economic standpoint. For companies it could be expensive — or even very expensive — to have their best practices certified in order to receive a seal. And the more services and teams there are to be certified, the more time and money companies would have to spend to obtain this certification.

On March 31, 2018, the CNIL officially transitioned from a labeling activity to a certification activity.

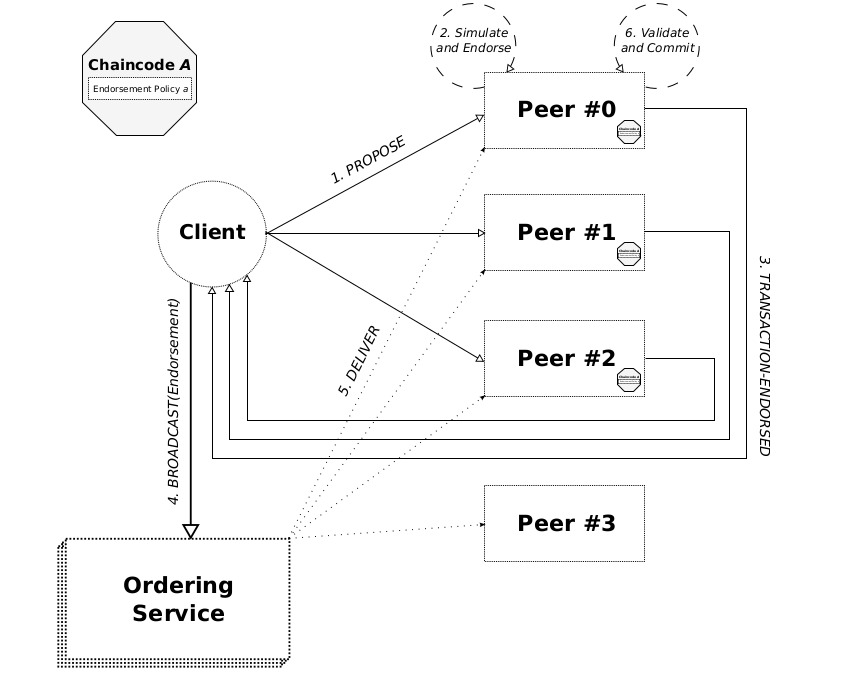

The CNIL has announced that it would stop awarding seals for free. “The Commission has decided that once the GDPR comes into effect it will concentrate instead on developing or approving certification standards. The seals themselves will be awarded by accredited certification organizations,” explains Claire Levallois-Barth. Afnor Certification or Bureau Veritas, for example, could offer certifications for which companies would have to pay. This would allow them to cover the time spent assessing internal processes and services, analyzing files, auditing information systems etc.

And for all the parties involved, the economic profitability of certification seems to be the crux of the issue. In general, companies do not want to spend tens of thousands, or even hundreds of thousands, of euros on certification just to receive a little-known seal. Certification organizations must therefore find the right formula: comprehensive enough to make the seal valuable, but without representing too much of an investment for most companies.

While it seems unlikely that a general seal will be created, some stakeholders are examining the possibility of creating sector-specific seals based on standards recognized by the GDPR, for cloud computing for example. This could occur if criteria were approved, either at the national level by a competent supervisory authority within a country (the CNIL in France), or at the European Union level by the European Data Protection Board (EDPB). A critical number of seals would then have to be granted. GDPR sets out two options for this as well.

According to Article 43 of the GDPR, certification may either be awarded by the supervisory authorities of each country, or by private certification organizations. In France, the supervisory authority is the CNIL, and certification organizations include Afnor and Bureau Veritas. These organizations are themselves monitored. They must be accredited either by the supervisory authority, or by the national accreditation body, which is the COFRAC in France.

This naturally leads to the question: if the supervisory authorities develop their own sets of standards, will they not tend to favor the accreditation of organizations that use these standards? Eric Lachaud, a PhD student in Law and Technology at Tilburg and guest at the presentation of the work by the Personal Data Values and Policies Chair on March 8, says, “this clearly raises questions about competition between the sets of standards developed by the public and private sectors.” Sophie Nerbonne, Director of Compliance at the CNIL, who was interviewed at the same event, says that the goal of the national commission is “not to foreclose the market but to draw on [its] expertise in very precise areas of certification, by acting as a data protection officer.”

A certain vision of data protection

It should be acknowledged, however that the area of expertise of a supervisory authority such as the CNIL, a pioneer in personal data protection in Europe, is quite vast. Beyond serving as a data protection officer and being responsible for ensuring compliance with GDPR within an organization that has appointed it, as an independent authority CNIL is in charge of regulating issues involving personal data processing, governances and protection, as indicated by the seals it has granted until now. Therefore, it is hard to imagine that the supervisory authorities would not emphasize their large area of expertise.

And even more so since not all the supervisory authorities are as advanced as the CNIL when it comes to certification in relation to personal data. “So competition between the supervisory authorities of different countries is an issue,” says Eric Lachaud. Can we hope for a dialogue between the 28 Member States of the European Union in order to limit this competition? “This leads to the question of having mutual recognition between countries, which has still not been solved,” says the Law PhD student. As Claire Levallois-Barth is quick to point out, “there is a significant risk of ‘a race to the bottom’.” However, there would be clear benefits. By recognizing the standards of each country, the countries of the European Union have the opportunity to give certification a truly transnational dimension, which would make the seals and marks valuable throughout Europe, thereby making them shared benchmarks for the citizens and companies of all 28 countries.

The high stakes of harmonization extend beyond the borders of the European Union. While the CE standard is criticized at times for how easy it is to obtain in comparison to stricter national standards, it has successfully imposed certain European standards around the world. Any manufacturer that hopes to reach the 500 million-person market that the European Union represents must meet this standard. For Éric Lachaud, this provides an example of what convergence between the European Member States can lead to: “We can hope that Europe will reproduce what it has done with CE marking: that it will strive to make the voices of the 28 states heard around the world and to promote a certain vision of data protection.”

The uncertainties surrounding the market for seals must be offset by the aims of the GDPR. The philosophy of this regulation is to establish strong legislation for technological changes with a long-term focus. In one way, Articles 42 and 43 of the GDPR can be seen as a foundation for initiating and regulating a market for certification. The current questions being raised then represent the first steps toward structuring this market. The first months after the GDPR comes into effect will define what the 28 Member States intend to build.

*The Personal Data Values and Policies Chair brings together the Télécom ParisTech, Télécom SudParis graduate schools, and Institut Mines-Télécom Business School. It is supported by Fondation Mines-Télécom.

[box type=”info” align=”” class=”” width=””]

Personal data certification seals – what is the point?

For companies, having a personal data protection seal allows them to meet the requirements of accountability imposed by article 24 of the GDPR. It requires all organizations responsible for processing data to be able to demonstrate compliance with the regulation. This requirement also applies to personal data subcontractors.

This is what leads many experts to think that the primary application for seals will be business-to-business relationships rather than business-to-consumer relationships. SME economic stakeholders could seek certification in order to meet growing demand amongst their customers, especially major firms, for compliance in their subcontracting operations.

Nevertheless, the GDPR is a European regulation. This means that compliance is assumed: all companies are supposed to abide by the regulation as soon as it comes into effect. A compliance seal cannot therefore be used as a marketing tool. It is, however, likely that the organizations responsible for establishing certification standards will choose to encourage seals that go beyond the requirements of the GDPR. In this case, stricter control over personal data processing than what is called for by the legislation could be a valuable way to set a company apart from its competitors. [/box]

A guarantee of excellence

A guarantee of excellence