AI in healthcare for the benefit of individuals and society?

Article written by Christian Roux (Director of Research and Innovation at IMT), Patrick Duvaut (Director of Innovation at IMT), and Eric Vibert (professor at Université Paris-Sud/Université Paris Saclay, and surgeon at Hôpital Paul Brousse (AP-HP) in Villejuif).

[divider style=”normal” top=”20″ bottom=”20″]

How can artificial intelligence be built in such a way that it is humanistic, explainable and ethical? This question is central to discussions about the future of AI technology in healthcare. This technology must provide humans with additional skills without replacing healthcare professionals. Focusing on the concept of “sustainable digital technology,” this article presents the key ideas explained by the authors of a long format report published in French in the Télécom Alumni journal.

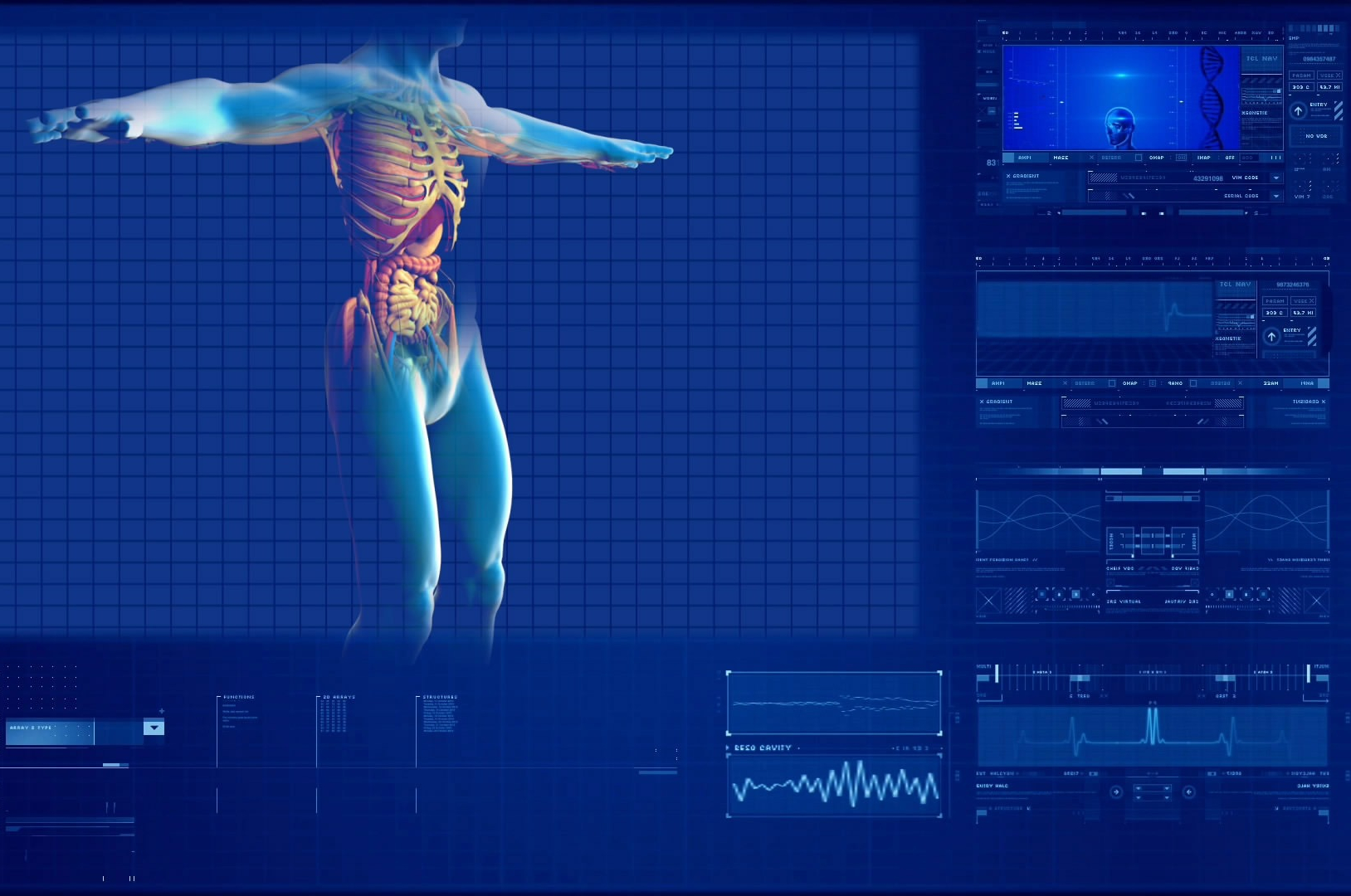

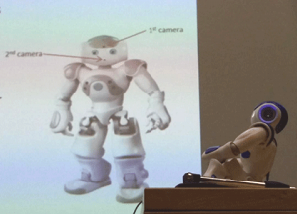

Artificial intelligence (AI) is still in its early stages. Performing single tasks and requiring huge numbers of examples, it lacks a conscience, empathy and common sense. In the medical field, the relationship between patient and augmented caregiver, or virtual medical assistant, is a key issue. In AI, what is needed is a personalized, social learning behavior between machines and patients. There is also a technological and scientific limitation: the lack of explainability in AI. Methods such as deep learning act like black boxes. A single verdict resulting from a process that can be described as “input big data, output results” is not sufficient for practitioners or patients. For the former it is an issue of taking responsibility for supporting, understanding and controlling the patient treatment pathway, while for the latter, it is one of adhering to a treatment and therapy approach, crucial aspects of patients’ involvement in their own care.

However, the greatest challenges facing AI are not technological, but are connected to its use, governance, ethics, etc. Going from “cognitive,” and “social” technologies to decision-making technologies is a major step. It means completely handing over responsibility to digital technology. The methodological bases required for such a transition exist, but putting them to use would have a significant impact on the nature of the coevolution of humans and AI. As far as doctors are concerned, the January 2018 report by the French Medical Council (CNOM) is quite clear: practitioners wish to keep control over decision-making. “Machines must serve man, not control him,” states the report. Machines must be restricted to augmenting decision-making or diagnoses, like IBM Watson. As philosopher and essayist Miguel Benasayag astutely points out, “AI does not ask questions, Man has to ask them.”

Leaving humans in charge of decision-making augmented by AI has become an even more crucial issue in recent years. Since 2016, society has been facing its most serious crisis of confidence in social media and digital service platforms since the dawn of the digital age, with the creation of the World Wide Web in 1990. After nearly 30 years of existence, digital society is going through a paradigm shift. Facing pressure from citizens driven by a crisis of confidence, it is arming itself with new tools, such as the European Data Protection Regulation (GDPR). The age of alienating digital technology, which was free to act as a cognitive, social and political colonizer for three decades, is being replaced by “sustainable digital technology” that puts citizens at the center of the cyber-sphere by giving them control over their data and their digital lives. In short, it is a matter of citizen empowerment.

In healthcare this trend translates into “patient empowerment.” The report by the French Medical Council and the health report for the General Council for the Economy both advocate a new healthcare model in the form of a “health democracy” based on the “6P health”: Preventative, Predictive, Personalized, Participatory, Precision-based, Patient-focused.

“Humanistic AI” for the new treatment pathway

The following extract from the January 2018 CNOM report asserts that healthcare improvements provided by AI must focus on the patient, ethics and building a trust-based relationship between patient and caregiver: “Keeping secrets is the very basis of people’s trust in doctors. This ethical requirement must be incorporated into big data processing when building algorithms.” This is also expressed in the recommendations set forth in Cédric Villani’s report on Artificial Intelligence. Specifically, humanistic artificial intelligence for healthcare and society would include three components to ensure “responsible improvements”: responsibility, measurability and native ethics.

The complexity of medical devices and processes, the large number of parties involved, and the need to instantly access huge quantities of highly secure data require the use of AI as a Trusted Service platforms (AIaaTS), which natively integrate all the virtues of “sustainable digital technology.” AIaaTS is based on a data vault that incorporates the three key aspects of digital cognitive technologies, perception, reasoning and action, and is not limited to only deep or machine learning.

Guarantees of trust, ethics, measurability and responsibility would rely on characteristics that use tools of the new digital society. Native compliance with GDPR, coupled with strong user authentication systems would make it possible to ensure patient safety. The blockchain, with its smart contracts, can also play a role by allowing for enhanced notarization of administrative management and medical procedures. Indicators for ethics and explainability already exist to avoid the black-box effect as much as possible. Other indicators, similar to the Value Based Healthcare model developed by Harvard University Professor Michael Porter, measure the results of AI in healthcare and its added value for the patient.

All these open-ended questions allow us to reflect on the future of AI. The promised cognitive and functional improvements must be truly responsible improvements and must not be made at the expense of alienating individuals and society. If AI can be described as a machine for improvements, humanistic AI is a machine for responsible improvements.