EUROP: Testing an Operator Network in a Single Room

The next article in our series on the Carnot Télécom technology platforms and digital society, with EUROP (Exchanges and Usages for Operator Networks) at Télécom Saint-Étienne. This platform offers physical testing of network configurations, to meet service providers’ needs. We discussed the platform with its director, Jacques Fayolle, and assignment manager Maxime Joncoux.

What is EUROP?

Jacques Fayolle: The EUROP platform was designed through a dual partnership between the Conseil Départemental de la Loire and LOTIM, a local telecommunications company and subsidiary of the Axione group. EUROP brings together researchers and engineers from Télécom Saint-Étienne, specialized in networks and telecommunications and in computer science. We are seeing an increasing convergence between infrastructure and the services that use this infrastructure. This is why these two skillsets are complementary.

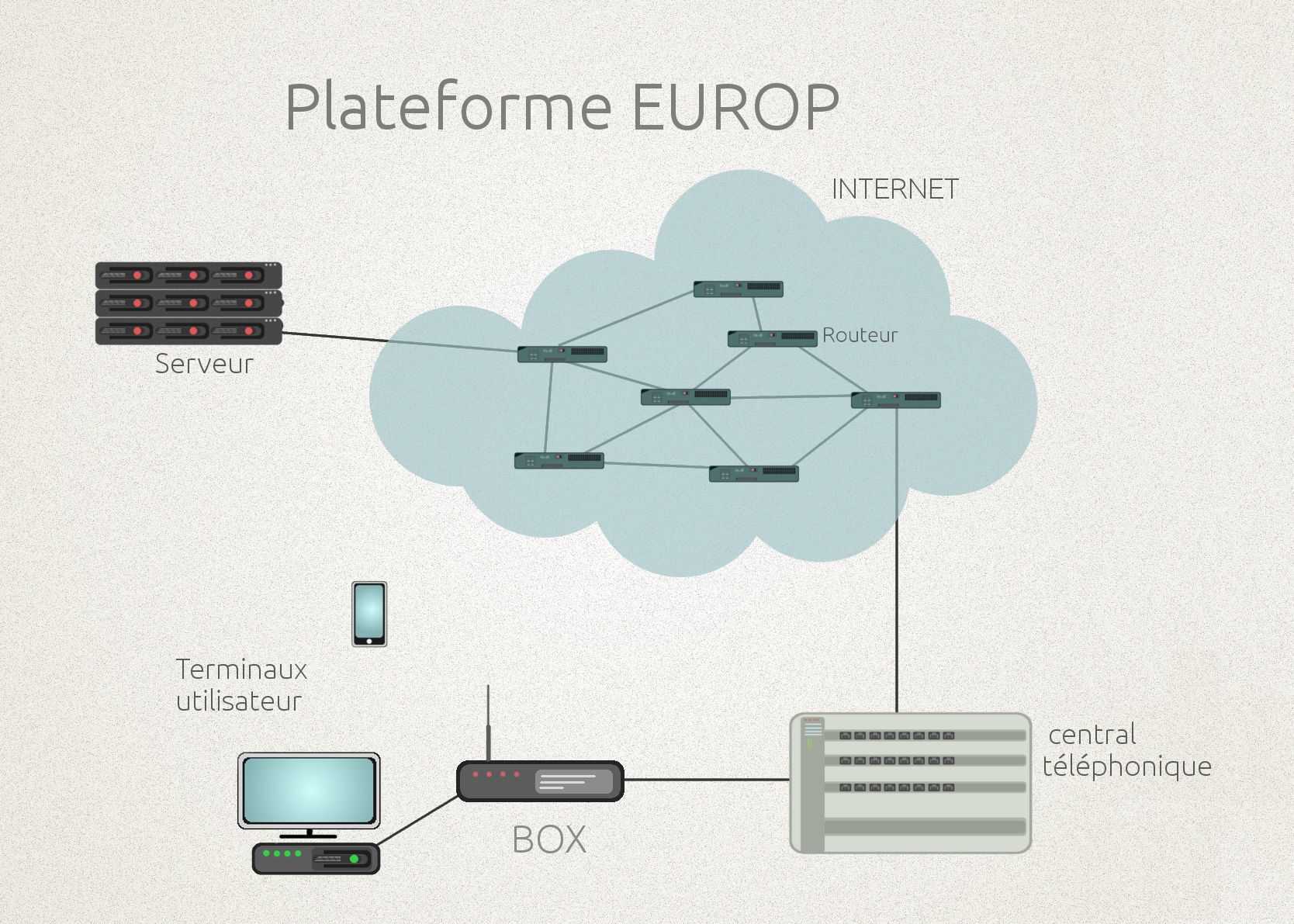

The goal of the platform is to simulate an operator network in a single room. To do so, we reconstructed a full network, from the production of services to consumption by a client company or an individual. This enabled us to depict every step in the distribution chain of a network, up to the home.

What technology is available on the platform?

JF: We are currently using wired technologies, which make up the operator part of a fiber network. We are particularly interested in being able to compare the usage of a service according to the protocols used as the signal is transferred from the server to the final customer. For instance, we can study what happens in a housing estate when an ADSL connection is replaced by FTTH fiber optics (Fiber to the Home).

The platform’s technology evolves, but the platform as a whole never changes. All we do is add new possibilities, because what we want to do is compare technologies with each other. A telecommunications system has a lifecycle of 5 to10 years. At the beginning, we mostly used copper technology, then we added fiber point to point, then point to multi-point. This means that we now have several dozen different technologies on the platform. This roughly corresponds to all technology currently used by telecommunications operators.

Maxime Joncoux: And they are all operational. The goal is to test the technical configurations in order to understand how a particular type of technology works, according to the physical layout of the network we put in place.

How can a network be represented in one room?

MJ: The network is very big, but in fact it fits into a small space. If we take the example of Saint-Étienne, this network fits into a large building, but it covers all communications in the city. This represents around 100,000 copper cables that have been reduced. Instead of having 30 connections, we only have one or two. As for the 80 kilometers of fiber in this network, they are simply wound around a coil.

JF: We also have distance simulators, objects that we can configure according to the distance we want to represent. Thanks to this technology, we can reproduce a real high-speed broadband or ADSL network. This enables us to look at, for example, how a service will be consumed depending on whether we have access to a high-speed broadband network, for example in the center of Paris, or in an isolated area in the countryside, where the speed might be slower. EUROP allows us to physically test these networks, rather than using IT models.

It is not a simulation, but a real laboratory reproduction. We can set up scenarios to analyze and compare a situation with other configurations. We can therefore directly assess the potential impact of a change in technology across the chain of a service proposed by an operator.

Who is the platform for?

JF: We are directly targeting companies that want to innovate with the platform, either by validating service configurations or by assessing the evolution of a particular piece of equipment in order to achieve better-quality service or faster speed. The platform is also used directly by the school as a learning and research tool. Finally, it allows us to raise awareness among local officials in rural areas about how increasing bandwidth can be a way of improving their local economy.

MJ: For local officials, we aim to provide a practical guide on standardized fiber deployment. The goal is not for Paris and Lyon to have fiber within five years while the rest of France still uses ADSL.

EUROP platform. Credits: Télécom Saint-Étienne

Could you provide some examples of partnerships?

JF: We carried out a study for Adista, a local telecommunications operator. They presented the network load they needed to bear for an event of national stature. Our role was to determine the necessary configuration to meet their needs.

We also have a partnership with IFOTEC, an SME creating innovative networks near Grenoble. We worked together to provide high-speed broadband access in difficult geographical areas. That is, where the distance to the network node is greater than it is in cities. The SME has created DSL offset techniques (part of the connection uses copper, but there is fiber at the end) which provides at least 20 Mb 80 kilometers away from the network node. This is the type of industrial companies we are aiming to innovate with, looking for innovative protocols or hardware.

What does the Carnot accreditation bring you?

JF: The Carnot label gives us visibility. SMEs are always a little hesitant in collaborating with academics. This label brings us credibility. In addition, the associated quality charter gives our contracts more substance.

What is the future of the platform?

JF: Our goal is to shift towards Openstack[1] technology, which is used in large data centers. The aim is to launch into Big Data and Cloud Computing. Many companies are wondering about how to operate their services in cloud mode. We are also looking into setting up configuration systems that are adapted to the Internet of Things. This technology requires an efficient network. The EUROP platform enables us to validate the necessary configurations.

[1] platform based on open-source software for cloud computing

[box type=”shadow” align=”” class=”” width=””]

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

Having first received the Carnot label in 2006, the Télécom & Société numérique Carnot institute is the first national “Information and Communication Science and Technology” Carnot institute. Home to over 2,000 researchers, it is focused on the technical, economic and social implications of the digital transition. In 2016, the Carnot label was renewed for the second consecutive time, demonstrating the quality of the innovations produced through the collaborations between researchers and companies. The institute encompasses Télécom ParisTech, IMT Atlantique, Télécom SudParis, Télécom, École de Management, Eurecom, Télécom Physique Strasbourg and Télécom Saint-Étienne, École Polytechnique (Lix and CMAP laboratories), Strate École de Design and Femto Engineering.[/box]

This operates as a digital platform and aims to replace the existing tools used by orthoptists, which are not very ergonomic. This technology consists solely of a computer, a pair of 3D glasses, and a video projector. It makes the technology more widely available by reducing the duration of tests. The glasses are made with shutter lenses, allowing the doctor to block each eye in synchronization with the material being projected. This othoptic tool is used to evaluate the different degrees of binocular vision. It aims to help adolescents who have difficulties with fixation or concentration.

This operates as a digital platform and aims to replace the existing tools used by orthoptists, which are not very ergonomic. This technology consists solely of a computer, a pair of 3D glasses, and a video projector. It makes the technology more widely available by reducing the duration of tests. The glasses are made with shutter lenses, allowing the doctor to block each eye in synchronization with the material being projected. This othoptic tool is used to evaluate the different degrees of binocular vision. It aims to help adolescents who have difficulties with fixation or concentration. The researchers have used liquid crystals for many industrial purposes (protection goggles, spectral filters, etc.). In fact, liquid crystals will soon be part of our immediate surroundings without us even knowing. They are used in flat screens, 3D and augmented reality goggles, or as a camouflage technique (Smart Skin). To create these possibilities, Arago’s manufacturing and testing facilities are unique in France (including over 150m² of cleanrooms).

The researchers have used liquid crystals for many industrial purposes (protection goggles, spectral filters, etc.). In fact, liquid crystals will soon be part of our immediate surroundings without us even knowing. They are used in flat screens, 3D and augmented reality goggles, or as a camouflage technique (Smart Skin). To create these possibilities, Arago’s manufacturing and testing facilities are unique in France (including over 150m² of cleanrooms).