Improving heating network performance through modeling

At the IMT “Energy in the Digital Revolution” conference held on April 28, Bruno Lacarrière, an energetics researcher with IMT Atlantique, presented modeling approaches for improving the management of heating networks. Combined with digital technology, these approaches support heating distribution networks in the transition towards smart management solutions.

The building sector accounts for 40% of European energy consumption. As a result, renovating this energy-intensive sector is an important measure in the law on the energy transition for green growth. This law aims to improve energy efficiency and reduce greenhouse gas emissions. In this context, heating networks currently account for approximately 10% of the heat distributed in Europe. These systems deliver heating and domestic hot water from all energy sources, although today the majority are fossil-fueled. “The heating networks use old technology that, for the most part, is not managed in an optimal manner. Just like smart grids for electrical networks, they must benefit from new technology to ensure better environmental and energy management,” explains Bruno Lacarrière, a researcher with IMT Atlantique.

Understanding the structure of an urban heating network

A heating network is made up of a set of pipes that run through the city, connecting energy sources to buildings. Its purpose is to transport heat over long distances while limiting loss. In a given network, there may be several sources (or production units). The sources may come from a waste incineration plant, a gas or biomass heating plant, an industrial residual surplus, or a geothermal power plant. These units are connected by pipelines carrying heat in the form of liquid water (or occasionally vapor) to substations. These substations then redistribute the heat to the different buildings.

These seemingly simple networks are in fact becoming increasingly complex. As cities are transforming, new energy sources and new consumers are being added. “We now have configurations that are more or less centralized, which are at times intermittent. What is the best way to manage the overall system? The complexity of these networks is similar to the configuration of electrical networks, on a smaller scale. This is why we are looking at the whether a “smart” approach could be used for heating networks,” explains Bruno Lacarrière.

Modeling and simulation for the a priori and a posteriori assessment of heating networks

To deal with this issue, researchers are working on a modeling approach for the heating networks and the demand. In order to develop the most reliable model, a minimum amount of information is required. For demand, the consumption data history for the buildings can be used to anticipate future needs. However, this data is not always available. The researchers can also develop physical models based on a minimal knowledge of the buildings’ characteristics. Yet some information remains unavailable. “We do not have access to all the buildings’ technical information. We also lack information on the inhabitants’ occupancy of the buildings,” Bruno Lacarrière points out. “We rely on simplified approaches, based on hypotheses or external references.”

For the heating networks, researchers assess the best use of the heat sources (fossil, renewable, intermittent, storage, excess heat…). This is carried out based on the a priori knowledge of the production units they are connected to. The entire network must provide the heat distribution energy service. And this must be done in a cost-effective and environmentally-friendly manner. “Our models allow us to simulate the entire system, taking into account the constraints and characteristics of the sources and the distribution. The demand then becomes a constraint.”

The models that are developed are then used in various applications. The demand simulation is used to measure the direct and indirect impacts climate change will have on a neighborhood. It makes it possible to assess the heat networks in a mild climate scenario and with high performance buildings. The heat network models are used to improve the management and operation strategies for the existing networks. Together, both types of models help determine the potential effectiveness of deploying information and communication technology for smart heating networks.

The move towards smart heating networks

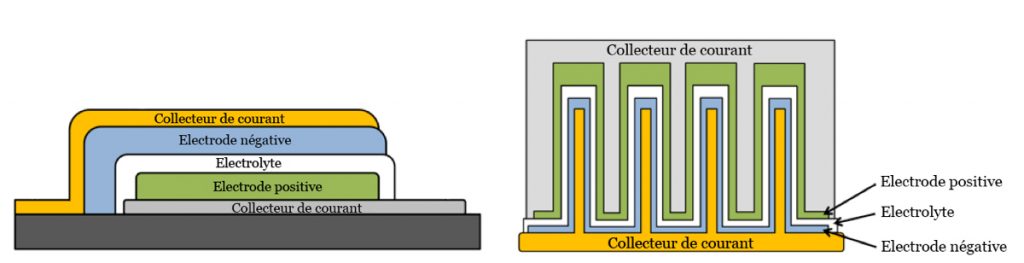

Heating networks are entering their fourth generation. This means that they are operating at lower temperatures. “We are therefore looking at the idea of networks with different temperature levels, while examining how this would affect the overall operation,” the researcher adds.

In addition to the modelling approach, the use of information and communication technology allows for an increase in the networks’ efficiency, as was the case for electricity (smart monitoring, smart control…). “We are assessing this potential based on the technology’s capacity to better meet demand at the right cost,” Bruno Lacarrière explains.

Deploying this technology in the substations, and the information provided by the simulation tools, go hand in hand with the prospect of deploying more decentralized production or storage units, turning consumers into consumer-producers [1], and even connecting to networks of other energy forms (e.g. electricity networks), thus reinforcing the concept of smart networks and the need for related research.

[1] The consumers become energy consumers and/or producers in an intermittent manner. This is due to the deployment of decentralized production systems (e.g. solar panels).

This article is part of our dossier Digital technology and energy: inseparable transitions!

Also read on I’MTech