Remote sensing explained: from agriculture to major disasters

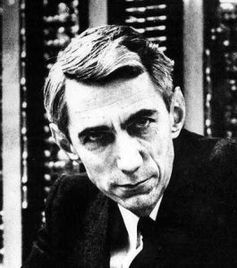

There are numerous applications for remote sensing, from precision agriculture to helping with the deployment of aid in major disasters. Grégoire Mercier, a researcher at IMT Atlantique, explains the key notions of this remote observation method using examples from his research.

Detecting invisible objects from space… this is now possible through remote sensing. This remote observation method is currently used in monitoring air quality in urban areas, monitoring ecosystems, detecting illegal fishing etc. Its applications are as numerous as the territories that can be covered. Its aim: to spatialize information that would otherwise be located by measurements on the ground.

Over the past few years, Brittany has become a key player in certain remote sensing topics in France and Europe through GIS Bretel and the Vigisat observation platform, “We are going through an interesting period for remote sensing, because we are carrying out missions with an operational purpose”, explains Grégoire Mercier, a researcher at IMT Atlantique who specializes in remote sensing image processing. “We can respond to issues concerning civil society and not just show that we can observe clouds using images” he adds. With the help of our expert, we will provide an overview of the key elements of remote sensing, from methods to applications.

Retracing the history of signals in remote sensing

Grégoire Mercier defines remote sensing as “any remote observation for which electromagnetic radiation is used to study the interaction between waves and matter. Depending on the result, we obtain an understanding of the object which has interacted with the wave”.

Photons are a key element in both spatial and airborne remote sensing. Thousands of them travel through space at the speed of light until they reach the Earth’s atmosphere. At this point, things become more complicated due to the atmosphere itself, clouds and aerosols. The atmosphere is full of obstacles which may prevent photons reaching the Earth’s surface. For example, when a photon comes into contact with a particle or water droplet, it is partially reflected and/or absorbed and sends new waves out in random directions. If it successfully reaches the ground, what happens next depends on where it lands. Vegetation, oceans, lakes or buildings… the reflected radiation will differ according to the object struck.

Every element has its own spectral signature which later enables it to be identified on the remote sensing images obtained using an in-built sensor on a satellite, aircraft or drone.

Spectral response and remote observations

Every object has a unique signature. “When we observe chlorophyll, lots of things appear in green, we see absorption of red and, a step further on, we observe a very specific response in the near-infrared region”, explains Grégoire Mercier. Observation of these spectral responses indicates that the remotely observed zone is of a vegetal nature. However, these observations are adjusted according to the moisture and the presence of pathosystems (a bacterial disease in the plant). The latter modify the plant’s spectral “ID card”. This is how researchers detect hydric stress or infections before the effects become visible to the naked eye. The process is particularly useful in precision agriculture.

Airborne remote sensing provides information on practices and the evolution of landscapes. “At IMT Atlantique we worked in collaboration with the COSTEL laboratory on the characterization of wetland areas in agriculture. The aim was to create a tool for operational purposes. We were able to prove that the use of hedges helped prevent surface run-off and therefore the pollution of water courses.”

Active/passive remote sensing and wavelengths

There are two types of remote sensing depending on the type of sensor used. When we use the sun’s radiation for observation, we talk of passive remote sensing. In these cases, the sensors used are referred to as “optic”. The wavelengths in question (typically between 400 and 2,500 nanometers) allow lenses to be used. “The electromagnetic wave interacts with the molecular energy level on a nanometric scale, which enables us to observe the constituents directly,” explains Grégoire Mercier. This is how the composition of the Earth’s atmosphere can be observed, for example.

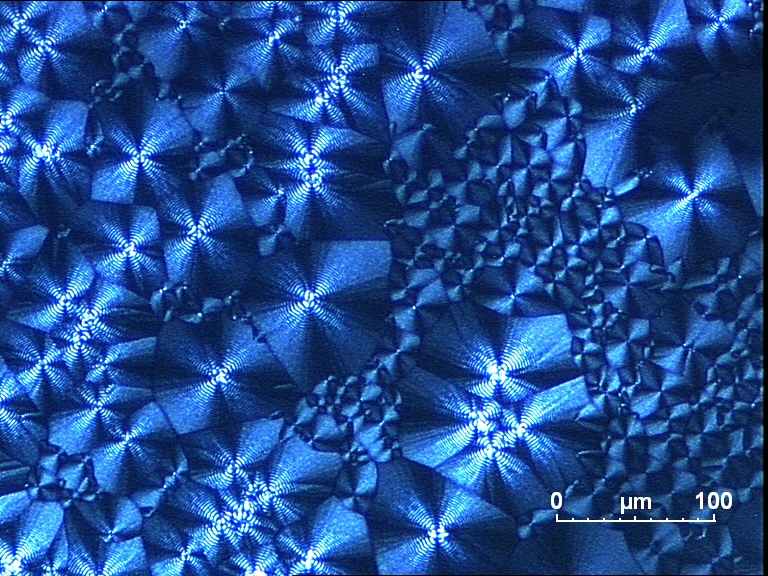

But observations are not purely limited to the visible field of the electromagnetic spectrum. The aim is to go beyond the human visual system with observations in the thermal infrared range (up to 5 mm in wavelength) and of microwaves (centimetric or decimetric wavelengths). “When we use wavelengths that are no longer nanometric, but centimetric, the wave/matter interaction with these electromagnetic waves is completely different”, explains Grégoire Mercier.

These interactions are characteristic of radar observations. This time, it is a question of active remote sensing because a wave is emitted toward the surface by the sensor before it receives the response. “For these wavelengths (from 1 centimeter to 1 meter), everything happens as though we were blind and touching the surface with a hand the size of the wavelength. If the surface is flat, we won’t see anything because we won’t feel anything. The texture of an element provides information.” In other words, radar observation of the sea’s surface reveals ripples corresponding to capillary waves. If we look at a lake, on the other hand, nothing can be seen. This helps scientists identify what they are observing.

Image processing and applications for large-scale disasters

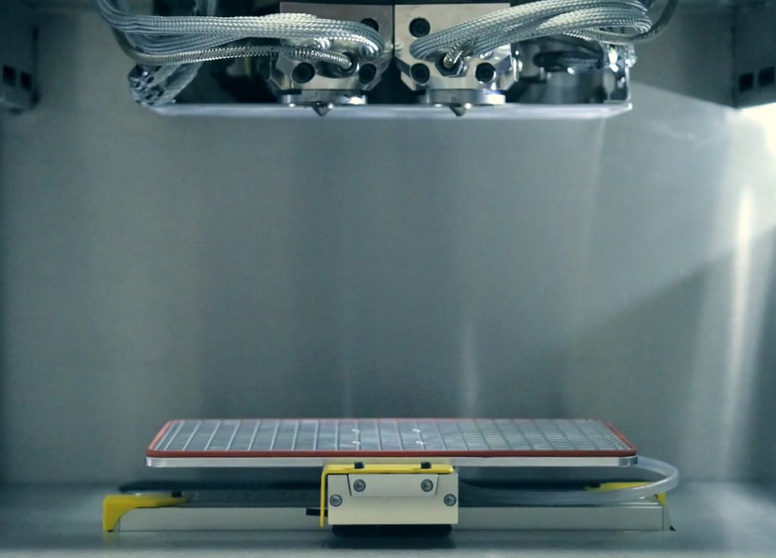

Grégoire Mercier has made improving sensing methods part of his daily work. “My research is based on operational methods that aim to detect changes with a high level of reliability”, explains Grégoire Mercier. More particularly, the researcher addresses image analysis in time-related applications. He has collaborated with the CNES on the creation of tools used during major disasters.

Initiated in 2000 by the CNES and ESA, the international charter on “Space and natural disasters” currently unites 16 space agencies from all over the world. The charter can be activated following a major natural or industrial disaster depending on the level of severity. “There is always one space agency on watch. When the charter is activated, it must do everything possible to update the map of the affected area”, explains Grégoire Mercier. To obtain this post-disaster map, the space agency requisitions any available satellite, which it uses to map the zone before the deployment of civilian security. The objective must generally be achieved in three hours.

“Rapid mapping does not allow you to choose the most suitable sensor or the best perspective. The observation then has to be compared to the one corresponding to the previous situation, which can be found in databases. The problem is that the images will probably not have been produced by the same sensor and will not have the same spatial resolution, so the idea is to implement tools that will facilitate comparison of the two images and the management of heterogeneous data. That’s where we come in,” Grégoire Mercier continues.

Also see the video on I’MTech: Communicating in emergencies and natural disasters

Having exceeded the initial aims of the SPOT satellite (Satellite for Observation of the Earth), remote sensing has sufficiently proven its worth for it to become a vital tool for the observation of territories. The task is now to establish operational image processing methodologies, as proposed by the ANR PHOENIX project.

[divider style=”normal” top=”20″ bottom=”20″]

Remote sensing for monitoring the natural evolution of a landscape: the ANR PHOENIX project

Grégoire Mercier is involved in the ANR PHOENIX project. Launched in 2015, the project notably aims to establish reliable remote sensing methodologies which will be used in characterizing the natural evolution of landscapes. In this way, it will be possible to analyze large-scale structures such as alpine glaciers and the Amazonian rainforest at different periods to determine the impact of various types of changes on their evolution. The use of satellite data for monitoring the environment will allow analysis of its current state and forecasting of its future state. Find out more

[divider style=”normal” top=”20″ bottom=”20″]

Also read on I’MTech:

[one_half]

[/one_half][one_half_last]

[/one_half_last]

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006