Rethinking ethics in social networks research

Antonio A. Casilli, Télécom ParisTech – Institut Mines-Télécom, University of Paris-Saclay and Paola Tubaro, Centre national de la recherche scientifique (CNRS)

[dropcap]R[/dropcap]esearch into social media is booming, fueled by increasingly powerful computational and visualization tools. However, it also raises some ethical and deontological issues that tend to escape the existing regulatory framework. The economic implications of large scale data platforms, the active participation of members of networks, the spectrum of mass surveillance, the effect on health, the role of artificial intelligence: a wealth of questions all needing answers. A workshop running from December 5-6, 2017 at Paris-Saclay, organized in collaboration with three international research groups, hopes to make progress in this area.

Social Networks, what are we talking about?

The expression “social network” has become commonly used, but those that use it to refer to social media such as Facebook or Instagram are often ignorant about its origin and true meaning. Studies into social networks began long before the dawn of the digital age. Since the 1930s, sociologists have been conducting studies that attempt to explain the structure of the relationships that connect individuals and groups: their “networks”. This could be, for example, relationships based on advice between employees of a business, or friendships between pupils in a school. These networks can be represented as points (the pupils) connected by lines (the relationships).

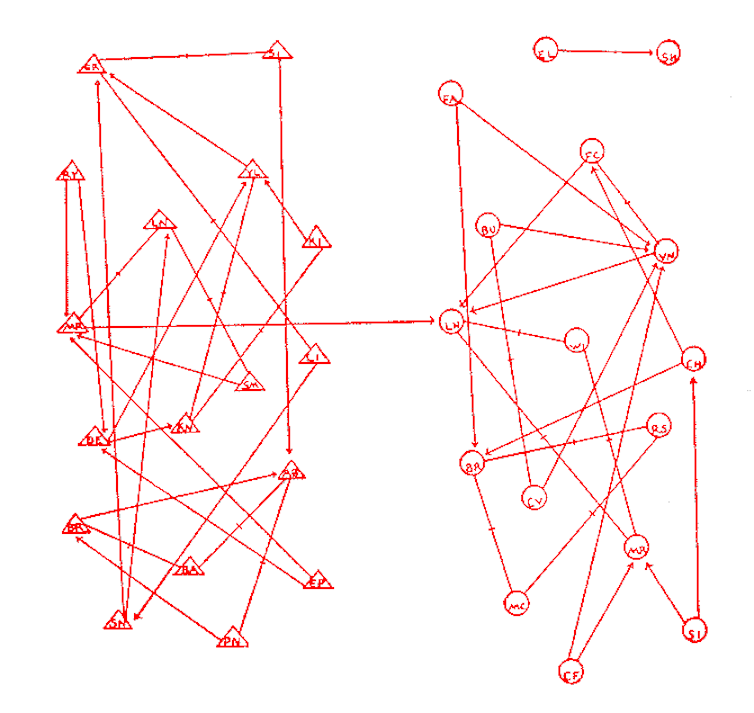

A graphic representation of a social network (friendships between pupils at a school), created by J.L. Moreno in 1934. Circles = girls, triangles = boys, arrows = friendships. J.L. Moreno, 1934, CC BY

Well before any studies into the social aspects of Facebook and Twitter, this research shed significant light on the topic. For example, the role of spouses in a marriage; the importance of “weak connections” in job hunting; the “informal” organization of a business; the diffusion of innovation; the education of political and social elites; and mutual assistance and social support when faced with ageing or illness. The designers of digital platforms such as Facebook now adopt some of the analytical principles that this research was based on, founded on mathematical graph theory (although they often pay less attention to the associated social issues).

Researchers in this field understood very quickly that the classic principles of research ethics (especially the informed consent of participants in a study and the anonymization of any data relating to them) were not easy to guarantee. In social network research, the focus is never on one sole individual, but rather on the links between the participant and other people. If the other people are not involved in the study, it is hard to see how their consent can be obtained. Also, the results may be hard to anonymize, as visuals can often be revealing, even when there is no associated personal identification.

Digital ethics: a minefield

Academics have been pondering these ethical problems for a quite some time: in 2005, the journel Social Networks dedicated an issue to these questions. The dilemmas faced by researchers are exacerbated today by the increased availability of relational data which has been collected and used by digital giants such as Facebook and Google. New problems arise as soon as the lines between “public” and “private” spheres become blurred. To what extent do we need consent to access the messages that a person sends to their contacts, their “retweets” or their “likes” on friends’ walls?

Information sources are often the property of commercial companies, and the algorithms these companies use tend to offer a biased perspective on the observations. For example, can a contact made by a user through their own initiative be interpreted in the same way as a contact made on the advice of an automated recommendation system? In short, data doesn’t speak for itself, and we must question the conditions of its use and the ways it is created before thinking about processing it. These dimensions are heavily influenced by economic and technical choices as well as by the software architecture imposed by platforms.

But is negotiation between researchers (especially in the public sector) and platforms (which sometimes stem from major multinational companies) really possible? Does access to proprietary data risk being restricted or unequally distributed (potentially at a disadvantage to public research, especially when it doesn’t correspond to the objectives and priorities of investors)?

Other problems emerge when we consider that researchers may even resort to paid crowdsourcing for data production, using platforms such as Amazon Mechanical Turk to ask the masses to respond to a questionnaire, or even to upload their online contact lists. However, these services raise questions about old beliefs in terms of working conditions and appropriation of a product. The ensuing uncertainty hinders research which could potentially have positive impacts on knowledge and society in a general sense.

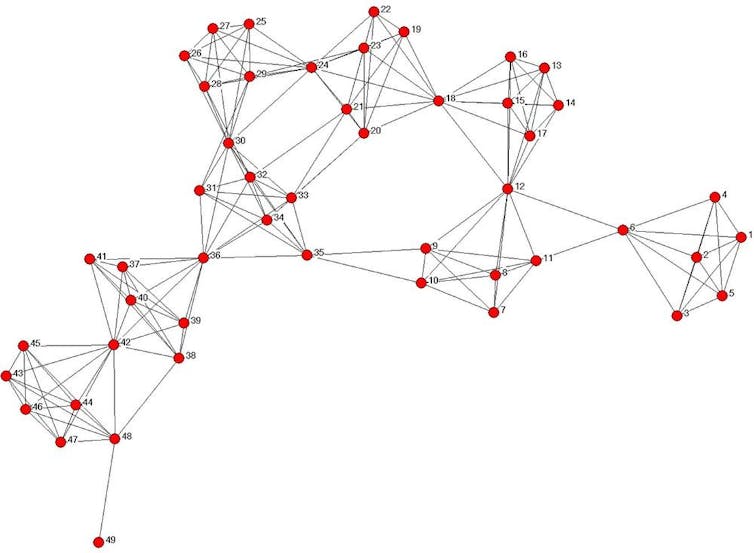

The potential for misappropriation of research results for political or economic ends is multiplied by the availability of online communication and publication tools, which are now used by many researchers. Although the interest among the military and police in social network analysis is already well known (Osama Bin Laden was located and neutralized following the application of social network analysis principles), these appropriations are becoming even more common today, and are less easy for researchers to control. There is an undeniable risk that lies in the use of these principles to restrict civil and democratic movements.

A simulation of the structure of an Al-Qaeda network, “Social Network Analysis for Startups” (fig. 1.7), 2011. Reproduced here with permission from the authors. Kouznetsov A., Tsvetovat M., CC BY

Celebrating researchers

To break this stalemate, the solution is not to increase the number of restrictions which would just aggravate the constraints that are already inhibiting research. On the contrary, we must create an environment of trust, so that researchers can explore the scope and importance of social networks online and offline, as they are essential in making the most of prominent economic and social phenomena, whilst still respecting people’s rights.

The active role of researchers must be highlighted. Rather than remaining subject to predefined rules, they need to participate in the co-creation of an adequate ethical and deontological framework, drawing on their experience and reflections. This bottom-up approach integrates the contributions of not just academics but also the public, civil society associations and representatives from public and private research bodies. These ideas and reflections could then be brought forward to those responsible for establishing regulations (such as ethics committees)

An international workshop in Paris

Poster for the RECSNA17 Conference

Such was the focus of the workshop Recent ethical challenges in social-network analysis. The event was organized in collaboration with international teams (The Social Network Analysis Group from the British Sociological Association, BSA-SNAG; Réseau thématique n. 26 “Social Networks” from the French Sociological Association; and the European Network for Digital Labor Studies (ENDLS)), with support from Maison des Sciences de l’Homme de Paris-Saclay and Institut d’études avancées de Paris. The conference will be held on December 5-6. For more information and to sign up, please consult the event website. Antonio A. Casilli, Associate Professor at Télécom ParisTech and research fellow at Centre Edgar Morin (EHESS), Télécom ParisTech – Institut Mines-Télécom, University of Paris-Saclay and Paola Tubaro, Head of Research at LRI, a Computing Research Laboratory at CNRS. Teacher at ENS, Centre national de la recherche scientifique (CNRS).

The original version of this article was published on The Conversation France.

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006