Matthieu Lauras

IMT Mines Albi | #SupplyChain #IndustrialEngineering

[toggle title=”Find all his articles on I’MTech” state=”open”]

[/toggle]

IMT Mines Albi | #SupplyChain #IndustrialEngineering

[toggle title=”Find all his articles on I’MTech” state=”open”]

[/toggle]

Last name, first name, e-mail address, social media, photographs — your smartphone is a complete summary of your personal information. In the near future, this extremely personal device-tool could even take on the role of digital passport. A number of biometric systems are being explored in order to secure access to these devices. Facial, digital, or iris recognition have the advantage of being recognized by the authorities, making them more popular options, even for research. Jean-Luc Dugelay is a researcher specialized in image processing at Eurecom. He is working with Chiara Galdi to develop an algorithm designed especially for iris recognition on smartphones. The initial results of the study were published in May 2017 in Pattern Recognition Letters. Their objective? Develop an instant, easy-to-use system for mobile devices.

Biometric iris recognition generally uses infrared light, which allows for greater visibility of the characteristics which differentiate one eye from another. “To create a system for the general public, we have to consider the type of technology people have. We have therefore adopted a technique using visible light so as to ensure compatibility with mobile phones,” explains Jean-Luc Dugelay.

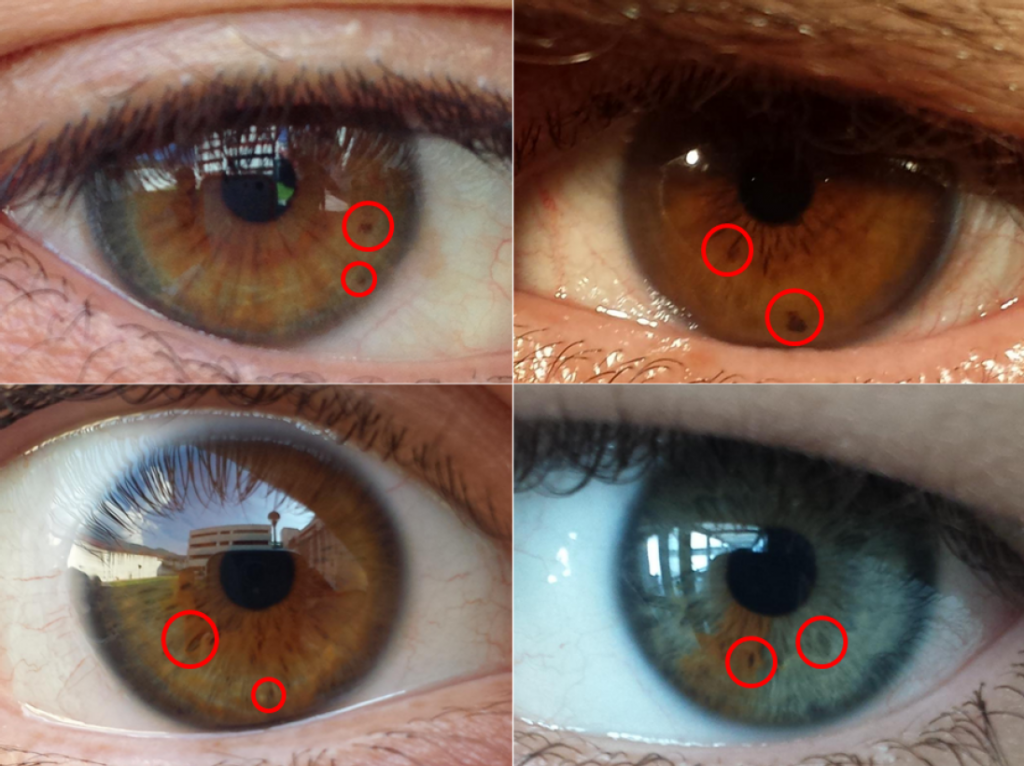

Examples of color spots

The result is the FIRE (Fast Iris REcognition) algorithm, which is based on an evaluation of three parameters of the eye: color, texture, and spots. In everyday life, eye color is approximated by generic shades like blue, green or brown. In FIRE, it is defined by a colorimetric composition diagram. Eye texture corresponds to the ridges and ligaments that form the patterns of the iris. Finally, spots are the small dots of color within the iris. Together, these three parameters are what make the eyes of one individual unique from all others.

When images of irises from databases were used to test the FIRE algorithm, variations in lighting conditions from different photographs created difficulties. To remove variations in brightness, the researcher applied a technique to standardize the colors. “The best-known color space is red-green-blue (RGB) but other systems exist, such as LAB. This is space where color is expressed according to the lightness ‘L,’ and A and B, which are chromatic components. We are focusing on these last two aspects rather than the overall definition of color, which allows us to exclude lighting conditions,” explains Jean-Luc Dugelay.

An algorithm then carries out an in-depth analysis of each of the three parameters of the eye. In order to compare two irises, each parameter is studied twice: once on the eye being tested, and once on the reference eye. Distance calculations are then established to represent the degree of similarity between the two irises. These three calculations result in scores which are then merged together by a single algorithm. However, the three parameters do not have the same degree of reliability in terms of distinguishing between two irises. Texture is a defining element, while color is a less discriminating characteristic. This is why, in merging the scores to produce the final calculation, each parameter is weighted according to how effective it is in comparison to the others.

This algorithm can be used according to two possible configurations which determine its execution time. In the case of authentication, it is used to compare the dimensions of the iris with those of the person who owns the phone. This procedure could be used to unlock a smartphone or confirm bank transactions. The algorithm gives a result in one second. However, when used for identification purposes, the issue is not knowing if the iris is your own, but rather to whom it corresponds. The algorithm could therefore be used for identity verification purposes. This is the basis for the H2020 PROTECT project in which Eurecom is taking part. Individuals would no longer be required to get out of their vehicles when crossing a border, for example, since they could identify themselves at the checkpoint from their mobile phones.

Although FIRE has successfully demonstrated that the iris recognition technique can be adapted for visible light and mobile devices, protocol issues must still be studied before making this system available to the general public. “Even if the algorithm never made mistakes in visible light, it would also have to be proven to be reliable in terms of performance and strong enough to withstand attacks. This use of biometrics also raises questions about privacy: what information is transmitted, to whom, who could store it etc.,” adds Jean-Luc Dugelay.

Several prospects are possible for the future. First of all, the security of the system could be increased. “Each sensor associated with a camera has a specific noise which distinguishes it from all other sensors. It’s like digital ballistics. The system could then verify that it is indeed your iris and in addition, verify that it is your mobile phone based on the noise in the image. This would make the protocol more difficult to pirate,” explains Jean-Luc Dugelay. Other possible solutions may emerge in the future, but in the meantime, the algorithmic portion developed by the Eurocom team is well on its way to becoming operational.

The building sector accounts for 40% of European energy consumption. As a result, renovating this energy-intensive sector is an important measure in the law on the energy transition for green growth. This law aims to improve energy efficiency and reduce greenhouse gas emissions. In this context, heating networks currently account for approximately 10% of the heat distributed in Europe. These systems deliver heating and domestic hot water from all energy sources, although today the majority are fossil-fueled. “The heating networks use old technology that, for the most part, is not managed in an optimal manner. Just like smart grids for electrical networks, they must benefit from new technology to ensure better environmental and energy management,” explains Bruno Lacarrière, a researcher with IMT Atlantique.

A heating network is made up of a set of pipes that run through the city, connecting energy sources to buildings. Its purpose is to transport heat over long distances while limiting loss. In a given network, there may be several sources (or production units). The sources may come from a waste incineration plant, a gas or biomass heating plant, an industrial residual surplus, or a geothermal power plant. These units are connected by pipelines carrying heat in the form of liquid water (or occasionally vapor) to substations. These substations then redistribute the heat to the different buildings.

These seemingly simple networks are in fact becoming increasingly complex. As cities are transforming, new energy sources and new consumers are being added. “We now have configurations that are more or less centralized, which are at times intermittent. What is the best way to manage the overall system? The complexity of these networks is similar to the configuration of electrical networks, on a smaller scale. This is why we are looking at the whether a “smart” approach could be used for heating networks,” explains Bruno Lacarrière.

To deal with this issue, researchers are working on a modeling approach for the heating networks and the demand. In order to develop the most reliable model, a minimum amount of information is required. For demand, the consumption data history for the buildings can be used to anticipate future needs. However, this data is not always available. The researchers can also develop physical models based on a minimal knowledge of the buildings’ characteristics. Yet some information remains unavailable. “We do not have access to all the buildings’ technical information. We also lack information on the inhabitants’ occupancy of the buildings,” Bruno Lacarrière points out. “We rely on simplified approaches, based on hypotheses or external references.”

For the heating networks, researchers assess the best use of the heat sources (fossil, renewable, intermittent, storage, excess heat…). This is carried out based on the a priori knowledge of the production units they are connected to. The entire network must provide the heat distribution energy service. And this must be done in a cost-effective and environmentally-friendly manner. “Our models allow us to simulate the entire system, taking into account the constraints and characteristics of the sources and the distribution. The demand then becomes a constraint.”

The models that are developed are then used in various applications. The demand simulation is used to measure the direct and indirect impacts climate change will have on a neighborhood. It makes it possible to assess the heat networks in a mild climate scenario and with high performance buildings. The heat network models are used to improve the management and operation strategies for the existing networks. Together, both types of models help determine the potential effectiveness of deploying information and communication technology for smart heating networks.

Heating networks are entering their fourth generation. This means that they are operating at lower temperatures. “We are therefore looking at the idea of networks with different temperature levels, while examining how this would affect the overall operation,” the researcher adds.

In addition to the modelling approach, the use of information and communication technology allows for an increase in the networks’ efficiency, as was the case for electricity (smart monitoring, smart control…). “We are assessing this potential based on the technology’s capacity to better meet demand at the right cost,” Bruno Lacarrière explains.

Deploying this technology in the substations, and the information provided by the simulation tools, go hand in hand with the prospect of deploying more decentralized production or storage units, turning consumers into consumer-producers [1], and even connecting to networks of other energy forms (e.g. electricity networks), thus reinforcing the concept of smart networks and the need for related research.

[1] The consumers become energy consumers and/or producers in an intermittent manner. This is due to the deployment of decentralized production systems (e.g. solar panels).

This article is part of our dossier Digital technology and energy: inseparable transitions!

Also read on I’MTech

Jay Humphrey: A general definition of biomechanics is the development, extension, and application of mechanics to study living things and the materials or structures with which they interact. For the general public, however, it is also good to point out that biomechanics is important from the level of the whole body to organs, tissues, cells, and evens proteins! Mechanics helps us to understand how proteins fold or how they interact as well as how cells and tissues respond to applied loads. There is even a new area of application we call mechanochemistry, where scientists study how molecular mechanics influences the rate of reactions in the body. Biomechanics is thus a very broad field.

JH: In a way, biomechanics dates back to antiquity. When humans first picked up a stick and used it to straighten up, it was biomechanics. But the field as we know it today emerged in the mid 1960’s, with early studies on cells — the red blood cells were the first to be studied. So, we have been interested in detailed tissue and cells mechanics for only about 50 years. Protein mechanics is even newer.

JH: There are probably five reasons why biomechanics emerged in the mid 60’s. First, the post-World War II era included a renaissance in mechanics; scientists gained a more complete understanding of the nonlinear field theories. At the same time, computers emerged, which were needed to solve mathematically complex problems in biology and mechanics — this is a second reason. Third, numerical methods, in particular finite element methods, appeared and helped in understanding system dynamics. Another important reason was the space race and the question, ‘How will people respond in outer space, in a zero-gravity environment?’, which is fundamentally a biomechanical question. And finally, this was also the period in which key molecular structures were discovered, like that for DNA (double helix) or collagen (triple helix), the most abundant protein in our bodies. This raised questions about their biomechanical properties.

JH: Today, technological advances give us the possibility to perform high-resolution measurements and imaging. At the same time, there have been great advances in understanding the genome, and how mechanics influence gene expression. I have been a strong advocate of relating biomechanics – which relies on theoretical principles and concepts of mechanics – to what we call today mechanobiology — how cells respond to mechanical stimuli. There has been interest in this relationship since the mid 70’s, but we have only understood better the way a cell responds to its mechanical environment by changing the genes it expresses since the 90’s.

JH: Yes, biomechanics benefited tremendously from interdisciplinarity. Many fields and professions must work together: engineers, mathematicians, biochemists, clinicians, and material scientists to name a few. And again, this has been improved through technology: the internet allowed better international collaborations, including web-based exchanges of data and computer programs, both of which increase knowledge.

JH: Yes, and it is interesting how it came about. I have a colleague in Italy, Katia Genovese, who is an expert in optical engineering. She developed an experimental device to improve imaging capabilities during mechanical testing of arteries. We worked with her to increase its applicability to vascular mechanics studies, but we also needed someone with expertise in numerical methods to analyse and interpret the data. Hence, we partnered with Stéphane Avril who had these skills. The three of us could then describe vascular mechanics in a way that was not possible before; not one of us could have done it alone, however, we needed to collaborate. Working together, we developed a new methodology and now we use it to better understand artery dissections and aneurysms. For me, the Honoris causa title I have been awarded recognizes the importance of this international collaboration in some way, and I am very pleased for that.

JH: I am proud of a new theory that we proposed, called a ‘constrained mixture theory’ for soft tissue growth and remodelling. It allows one not only to describe a cell or tissue at its current time and place, but also how it can evolve, how it will change when subjected to mechanical loads. The word ‘mixture’ is important for tissues consist of multiple constituents mixed together: for example, smooth muscle cells, collagen fibers, and elastic fibers in arteries. This is what we call a mixture. It is through interactions among these constituents, as well as through individual properties of each, that the tissue function is achieved. Based on a precise description of these properties, we can describe for instance how an artery will be impacted by a disease, and how it will be altered due to changes in blood circulation. I think this type of predictive capability will help us design better medical devices and therapeutic interventions.

JH: ‘Game changer’ is a strong word, but our research advances definitely have some clear clinical application. The method we developed to predict where and when a blood clot will form in an aneurysm has the potential to better understand and predict patient outcome. The constrained mixture theory could also have real application in the emerging area of tissue engineering. For example, we are working with Dr. Chris Breuer, a paediatric expert at Nationwide Children’s Hospital in Columbus Ohio, on the use of our theory to design polymeric scaffolds for replacing blood vessels in children with congenital defects. The idea is that the synthetic component will slowly degrade and be replaced by body’s own cells and tissues. Clinical trials are in progress in the US, and we are very excited about this project.

JH: About seven years ago, my work was still fundamental science. I then decided to move to Yale University to interact more closely with medical colleagues. So my interest in clinical application is recent. But since we are talking about how biomechanics could be a game changer, I can give you two major breakthroughs made by some colleagues at Stanford University that show how medicine is impacted. Dr. Alison Marsden uses a computational biomechanical model to improve surgical planning, including that for the same tissue engineered artery that we are working on. And Dr. Charles Taylor has started a new company called HeartFlow that he hopes will allow computational biomechanics to replace a very invasive diagnostic approach with a non-invasive approach. There is great promise in this idea and ones like it.

JH: I plan to focus on three main areas for the future. First is designing better vascular grafts using computational methods. I also hope to increase our understanding of aneurysms, from a biomechanical and a genetic basis. And third is understanding the role of blood clots in thrombosis. These are my goals for the years to come.

[dropcap]W[/dropcap]hat if one transition was inextricably linked with another? Faced with environmental challenges, population growth and the emergence of new uses, a transformation is underway in the energy sector. Renewable resources are playing a larger role in the production of the energy mix, advances in housing have helped reduce heat loss and modes of transportation are changing their models to limit the use of fossil fuels. But even beyond these major metamorphoses, the energy transition in progress is intertwined with that of digital technology. Electrical grids, like heat networks, are becoming “smart.” Modeling is now seen as indispensable from the earliest stages of design or renovation or buildings.

The line dividing these two transitions is indeed so fine that it is becoming difficult to determine to which category belong the changes taking place in the world of connected devices and telecommunications. For mobile phone operators, power supply management for mobile networks is a crucial issue. The proportion of renewable energy must be increased, but this leads to a lower quality of service. How can the right balance be determined? And telephones themselves pose challenges for improving energy autonomy, in terms of both hardware and software.

This interconnection illustrates the complexity of the changes taking shape in contemporary societies. In this report we seek to present issues situated at the interface between energy and digital technology. Through research carried out in the laboratories of IMT graduate schools, we outline some of the major challenges currently facing civil society, economic stakeholders and public policymakers.

For consumers, the forces at play in the energy sector may appear complex. Often reduced to a sort of technological optimism without taking account of scientific reality, they are influenced by significant political and economic issues. The first part of this report helps reframe the debate while providing an overview of the energy transition through an interview with Bernard Bourges, a researcher who specializes in this subject. A European SEAS project is explained as a concrete example of the transformations underway in order to provide a look at the reality behind the promises of smart grids.

[one_half]

[/one_half][one_half_last]

[/one_half_last]

The second part of the report focuses on heat networks, which, like electric networks, can also be improved with the help of algorithms. Heat networks represent 9% of the heat distributed in Europe and can therefore represent a catalyst for reducing energy costs in buildings. Bruno Lacarrière’s research illustrates the importance of digital modeling in the optimization of these networks (article to come). And because reducing heat loss is also important at the level of individual buildings, we take a closer look at Sanda Leteriu’s research on how to improve energy performance for homes.

[one_half]

[/one_half][one_half_last]

[/one_half_last]

The report concludes with a third section dedicated to the telecommunications sector. An overview of Loutfi Nuaymi’s work highlights the role played by operators in optimizing the energy efficiency of their networks and demonstrates how important algorithms are becoming for them. We also examine how electric consumption can be regulated by reducing the demand for calculations in our mobile phones, with a look at research by Maurice Gagnaire. Finally, since connected devices require ever-more powerful batteries, the last article explores a new generation of lithium batteries, and the high hopes for the technologies being developed in Thierry Djenizian’s laboratory.

[one_half]

[/one_half][one_half_last]

[/one_half_last]

[divider style=”normal” top=”20″ bottom=”20″]

To further explore this topic:

To learn more about how the digital and energy transitions are intertwined, we suggest these related articles from the IMTech archives:

5G will also consume less energy

In Nantes the smart city becomes a reality with mySMARTLife

Data centers: taking up the energy challenge

The bitcoin and blockchain: energy hogs

[divider style=”normal” top=”20″ bottom=”20″]

Good things come to those who wait. Seven years after the Grenelle 2 law, a decree published on May 10 requires buildings (see insert) used for the private and public service sector to improve their energy performance. The text sets a requirement to reduce consumption by 25% by 2020 and by 40% by 2030.[1] To do so, reliable, easy-to-use models must be established in order to predict the energy behavior of buildings in near-real-time. This is the goal of research being conducted by Balsam Ajib, a PhD student supervised by Sanda Lefteriu and Stéphane Lecoeuche of IMT Lille-Douai as well as by Antoine Caucheteux of Cerema.

State-of-the-art experimental models for evaluating energy performance of buildings use models with what are referred to as “linear” structures. This means that input variables for the model (weather, radiation, heating power etc.) are only linked to the output of this same model (temperature of a room etc.) through a linear equation. However, a number of phenomena which occur within a room, and therefore within a system, can temporarily disrupt its thermal equilibrium. For example, a large number of individuals inside a building will lead to a rise in temperature. The same is true when the sun shines on a building when its shutters are open.

Based on this observation, researchers propose using what is called a “commutation” model, which takes account of discrete events occurring at a given moment which influence the continuous behavior of the system being studied (change in temperature). “For a building, events like opening/closing windows or doors are commutations (0 or 1) which disrupt the dynamics of the system. But we can separate these actions from linear behavior in order to identify their impacts more clearly,” explains the researcher. To do so, she has developed several models, each of which correspond to a situation. “We estimate each configuration, for example a situation in which the door and windows are closed and heat is set at 20°C corresponds to one model. If we change the temperature to 22°C, we identify another and so on,” adds Sanda Lefteriu.

To create these scenarios, researchers use real data collected inside buildings following measurement programs. Sensors were placed on the campus of IMT Lille-Douai and in passive houses which are part of the INCAS platform in Chambéry. These uninhabited residences offer a completely controlled site for experimenting since all the parameters related to the building (structure, materials) are known. These rare infrastructures make it possible to set up physical models, meaning models built according to the specific characteristics of the infrastructures being studied. “This information is rarely available so that’s why we are now working on mathematical modeling which is easier to implement,” explains Sanda Lefteriu.

“We’re only at the feasibility phase but these models could be used to estimate heating power and therefore energy performance of buildings in real time,” adds the researcher. Applications will be put in place in social housing as part of the ShINE European project in which IMT Lille-Douai is taking part. The goal of this project is to reduce carbon emissions from housing.

These tools will be used for existing buildings. Once the models are operational, control algorithms installed on robots will be placed in the infrastructures. Finally, another series of tools will be used to link physical conditions with observations in order to focus new research. “We still have to identify which physical parameters change when we observe a new dynamic,” says Sanda Lefteriu. These models remain to be built, just like the buildings which they will directly serve.

[1] Buildings currently represent 40-45% of energy spending in France across all sectors. Find out more+ about key energy figures in France.

This article is part of our dossier Digital technology and energy: inseparable transitions!

[box type=”shadow” align=”” class=”” width=””]

The energy performance of a building includes its energy consumption and its impact in terms of greenhouse gas emissions. Consideration is given to the hot water supply system, heating, lighting and ventilation. Other building characteristics to be assessed include insulation, location and orientation. An energy performance certificate is a standardized way to measure how much energy is actually consumed or estimated to be consumed according to standard use of the infrastructure. [/box]

Thierry Djenizian: First of all, it’s a matter of miniaturization. Since the 1970s the Moore law which predicts an increase in performance of microelectronic devices with their miniaturization has been upheld. But in the meantime, the energy aspect has not really kept up. We are now facing a problem: we can manufacture very sophisticated sub-micrometric components, but the energy sources we have to power them are not integrated in the circuits because they take up too much space. We are therefore trying to design micro-batteries which can be integrated within the circuits like other technological building blocks. They are highly anticipated for the development of connected devices, including a large number of wearable applications (smart textiles for example), medical devices, etc.

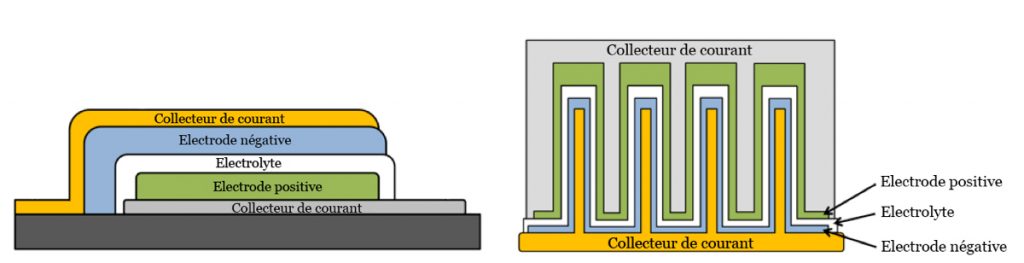

TD: A battery is composed of three elements: two electrodes and an electrolyte separating them. In the case of micro-batteries, it is essentially the contact surface between the electrodes and the electrolyte that determines storage performances: the greater the surface, the better the performance. But in decreasing the size of batteries, and therefore, the electrodes and the electrolyte, there comes a point when the contact surface is too small and battery performance is decreased.

TD: One solution is to transition from 2D geometry in which the two electrodes are thin layers separated by a third thin electrolyte layer, to a 3D structure. By using an architecture consisting of columns or tubes which are smaller than a micrometer, covered by the three components of the battery, we can significantly increase contact surfaces (see illustration below). We are currently able to produce this type of structure on the micrometric scale and we are working on reaching the nanometric scale by using titanium nanotubes.

On the left, a battery with a 2D structure. On the right, a battery with a 3D structure: the contact surface between the electrodes and the electrolyte has been significantly increased.

TD: Let’s take a look at the example of a low battery. One of the electrodes is composed of lithium, nickel, manganese and oxygen. When you charge this battery by plugging it in, for example, the additional electrons set off an electrochemical reaction which frees the lithium from this electrode in the form of ions. The lithium ions migrate through the electrolyte and insert themselves into the nanotubes which make up the other electrode. When all the nanotube sites which can hold lithium have been filled, the battery is charged. During the discharging phase, a spontaneous electrochemical reaction is produced, freeing the lithium ions from the nanotubes toward the nickel-manganese-oxygen electrode thereby generating the desired current.

TD: When a battery is working, great structural modifications take place; the materials swell and shrink in size due to the reversible insertion of lithium ions. And I’m not talking about small variations in size: the size of an electrode can become eight times larger in the case of 2D batteries which use silicon! Nanotubes provide a way to reduce this phenomenon and therefore help prolong the lifetime of these batteries. In addition, we are also carrying out research on electrolytes based on self-repairing polymers. One of the consequences of this swelling is that the contact interfaces between the electrodes and the electrolyte are altered. With an electrolyte that repairs itself, the damage will be limited.

TD: One of the key issues for microelectronic components is flexibility. Batteries are no exception to this rule, and we would like to make them stretchable in order to meet certain requirements. However, the new lithium batteries we are discussing here are not yet stretchable: they fracture when subjected to mechanical stress. We are working on making the structure stretchable by modifying the geometry of the electrodes. The idea is to have a spring-like behavior: coupled with a self-repairing electrolyte, after deformation, batteries return to their initial position without suffering irreversible damage. We have a patent pending for this type of innovation. This could represent a real solution for making autonomous electronic circuits both flexible and stretchable, in order to satisfy a number of applications, such as smart electronic textiles.

This article is part of our dossier Digital technology and energy: inseparable transitions!

“Woe is me! My battery is dead!” So goes the thinking of dismayed users everywhere when they see the battery icon on their mobile phones turn red. Admittedly, smartphone battery life is a rather sensitive subject. Rare are those who use their smartphones for the normal range of purposes — as a telephone, for web browsing, social media, streaming videos, etc. — whose batteries last longer than 24 hours. Extending battery life is a real challenge, especially in light of the emergence of 5G, which will open the door for new energy-intensive uses such as ultra HD streaming or virtual reality, not to mention the use of devices as data aggregators for the Internet of Things (IoT). The Next Generation Mobile Networks Alliance (NGMN) has issued a recommendation to extend mobile phone battery life to three days between charges.

There are two major approaches possible in order to achieve this objective: develop a new generation of batteries, or find a way for smartphones to consume less battery power. In the laboratories of Télécom ParisTech, Maurice Gagnaire, a researcher in the field of cloud computing and energy-efficient data networks, is exploring the second option. “Mobile devices consume a great amount of energy,” he says. “In addition to having to carry out all the computations for the applications being used, they are also constantly working on connectivity in the background, in order to determine which base station to connect to and the optimal speed for communication.” The solution being explored by Maurice Gagnaire and his team is based on reducing smartphones’ energy consumption for computations related to applications. The scientists started out by establishing a hierarchy of applications according to their energy demands as well as to their requirements in terms of response time. A tool used to convert an audio sequence into a written text, for example, does not present the same constraints as a virus detection tool or an online game.

Once they had carried out this first step, the researchers were ready to tackle the real issue — saving energy. To do so, they developed a mobile cloud computing solution in which the most frequently-used and energy-consuming software tools are supposed to be available in nearby servers, called cloudlets. Then, when a telephone has to carry out a computation for one of its applications, it offloads it to the cloudlet in order to conserve battery power. Two major tests determine whether to offload the computation. The first one is based on an energy assessment: how much battery life will be gained? This depends on the effective capacity of the radio interface at a particular location and time. The second test involves quality expectations for user experience: will use of the application be significantly impacted or not?

Together, these two tests form the basis for the MAO (Mobile Applications Offloading) algorithm developed by Telecom ParisTech. The difficulty in its development arose from its interdependence with such diverse aspects as hardware architecture of mobile phone circuitry, protocols used for radio interface, and factoring in user mobility. In the end, “the principle is similar to what you find in airports where you connect to a local server located at the Wi-Fi spot,” explains Maurice Gagnaire. But in the case of energy savings, the service is intended to be “universal” and not linked to a precise geographic area as is the case for airports. In addition to offering browsing or tourism services, cloudlets would host a duplicate of the most widely-used applications. When a telephone has low battery power, or when it is responding to great user demand for several applications, the MAO algorithm makes it possible to autonomously offload computations from the mobile device to cloudlets.

Through a collaboration with researchers from Arizona State University in Tempe (USA), the theoretical scenarios studied in Paris were implemented in real-life situations. The preliminary results show that for the most demanding applications, such as voice recognition, a 90% reduction in energy consumption can be obtained when the cloudlet is located at the base of the antenna in the center of the cell. The experiments underscored the great impact of the self-learning function of the database linked to the voice recognition tool on the performances of the MAO algorithm.

Extending the use of the MAO algorithm to a broad range of applications could expand the scale of the solution. In the future, Maurice Gagnaire plans to explore the externalization of certain tasks carried out by graphics processers (or GPUs) in charge of managing smartphones’ high-definition touchscreens. Mobile game developers should be particularly interested in this approach.

More generally, Maurice Gagnaire’s team now hopes to collaborate with a network operator or equipment manufacturer. A partnership would provide an opportunity to test the MAO algorithm and cloudlets on a real use case and therefore determine the large-scale benefits for users. It would also offer operators new perspectives for next-generation base stations, which will have to be created to accompany the development of 5G planned for 2020.

This article is part of our dossier Digital technology and energy: inseparable transitions!

20,000: the average number of relay antennae owned by a mobile operator in France. Also called “base stations,” they represent 70% of the energy bill for telecommunications operators. Since each station transmits with a power of approximately 1 kW, reducing their demand for electricity is a crucial issue for operators in order to improve the energy efficiency of their networks. To achieve this objective, the sector is currently focusing more on technological advances in hardware than on the software component. Due to the latest advances, a recent base station consumes significantly less energy for data throughout that is nearly a hundred times higher. But new algorithms that promise energy savings are being developed, including some which simply involve… switching off base stations at certain scheduled times!

This solution may seem radical since switching off a base station in a cellular network means potentially preventing users within a cell from accessing the service. Loutfi Nuaymi, a researcher in telecommunications at IMT Atlantique, is studying this topic, in collaboration with Orange. He explains that, “base stations would only be switched off during low-load times, and in urban areas where there is greater overlap between cells.” In large cities, switching off a base station from 3 to 5am would have almost no consequence, since users are likely to be located in areas covered by at least one other base station, if not more.

Here, the role of algorithms is twofold. First of all, they would manage the switching off of antennas when user demand is lowest (at night) while maintaining sufficient network coverage. Secondly, they would gradually switch the base stations back on when users reconnect (in the morning) and up to peak hours during which all cells must be activated. This technique could prove to be particularly effective in saving energy since base stations currently remain switched on at all times, even during off-peak hours.

Loutfi Nuaymi points out that, “the use of such algorithms for putting base stations on standby mode is taking time to reach operators.” Their reluctance is understandable, since interruptions in service are by their very definition the greatest fear of telecom companies. Today, certain operators could put one base station out of ten on standby mode in dense urban areas in the middle of the night. But the IMT Atlantique researcher is confident in the quality of his work and asserts that it is possible “to go even further, while still ensuring high quality service.”

Gradually switching base stations on or off in the morning and at night according to user demand is an effective energy-saving solution for operators.

While energy management algorithms already allow for significant energy savings in 4G networks, their contributions will be even greater over the next five years, with 5G technology leading to the creation of even more cells to manage. The new generation will be based on a large number of femtocells covering areas as small as ten meters — in addition to traditional macrocells with a one-kilometer area of coverage.

Femtocells consume significantly less energy, but given the large number of these cells, it may be advantageous to switch them off when not in use, especially since they are not used as the primary source of data transmission, but rather to support macrocells. Switching them off would not in any way prevent users from accessing the service. Loutfi Nuaymi describes one way this could work. “It could be based on a system in which a user’s device will be detected by the operator when it enters a femtocell. The operator’s energy management algorithm could then calculate whether it is advantageous to switch on the femtocell, by factoring in, for example, the cost of start-up or the availability of the macrocell. If it is not overloaded, there is no reason to switch on the femtocell.”

The value of these algorithms lies in their capacity to calculate cost/benefit ratios according to a model which takes account of a maximum number of parameters. They can therefore provide autonomy, flexibility, and quick performance in base station management. Researchers at IMT Atlantique are building on this decision-making support principle and going a step further than simply determining if base stations should be switched on or put on standby mode. In addition to limiting energy consumption, they are developing other algorithms for optimizing the energy mix used to power the network.

They begin with the observations that renewable sources of energy are less expensive, and if operators equip themselves with solar panels or wind turbines, they must also store the energy produced to make up for periodic variations in sunshine and the sporadic nature of wind. So, how can an operator decide between using stored energy, energy supplied by its own solar or wind facilities, or energy from the traditional grid, which may rely on a varying degree of smart technology? Loutfi Nuaymi and his team are also working on user cases related to this question and have joined forces with industrial partners to test and develop algorithms which could provide some answers.

“One of the very concrete questions operators ask is what size battery is best to use for storage,” says the researcher. “Huge batteries cost as much as what operators save by replacing fossil fuels with renewable energy sources. But if the batteries are too small, they will have storage problems. We’re developing algorithmic tools to help operators make these choices, and determine the right size according to their needs, type of battery used, and production capacity.”

Another question: is it more profitable to equip each base station with its own solar panel or wind turbine, or rather, create an energy farm to supply power to several antennas? The question is still being explored but preliminary findings suggest that no single organization is clearly preferential when it comes to solar panels. Wind turbines, however, are imposing objects which are sometimes refused by neighbors, making it preferential to group them together.

Once this type of constraint has been ruled out, operators must calculate the maximum proportion of renewable energies to include in the energy mix with the least possible consequences on quality of mobile service. Sunshine and wind speed are sporadic by nature. For an operator, a sudden drop in production at a wind or solar power farm could have direct consequences on network availability — no energy means no working base stations.

Loutfi Nuaymi admits that these limitations reveal the complexity of developing algorithms, “We cannot simply consider the cost of the operators’ energy bills. We must also take account of the minimum proportion of renewable energies they are willing to use so that their practices correspond to consumer expectations, the average speed of distribution to satisfy users, etc.”

Results from research in this field show that in most cases, the proportion of renewable energies used in the energy mix can be raised to 40%, with only an 8% drop in quality of service as a result. In off-peak hours, this represents only a slight deterioration and does not have a significant effect on network users’ ability to access the service.

And even if a drastic reduction in quality of service should occur, Loutfi Nuaymi has some solutions. “We have worked on a model for a mobile subscription that delays calls if the network is not available. The idea is based on the principle of overbooking planes. Voluntary subscribers — who, of course, do not have to choose this subscription— accept the risk of the network being temporarily unavailable and, in return, receive financial compensation if it affects their use.”

Although this new subscription format is only one possible solution for operators, and is still a long way from becoming reality, it shows how the field of telecommunications may be transformed in response to energy issues. Questions have arisen at times about the outlook for mobile operators. Given the energy consumed by their facilities and the rise of smart grids which make it possible to manage both self-production and the resale of electricity, these digital players could, over time, come to play a significant role in the energy sector.

“It is an open-ended question and there is a great deal of debate on the topic,” says Loutfi Nuaymi. “For some, energy is an entirely different line of business, while others see no reason why they should not sell the energy collected.” The controversy could be settled by new scientific studies in which the researcher is participating. “We are already performing technical-economic calculations in order to study operators’ prospects.” The highly-anticipated results could significantly transformation the energy and telecommunications market.

This article is part of our dossier Digital technology and energy: inseparable transitions!