OISPG: Promoting open innovation in Europe

On January 1st, 2017, Pierre Simay was appointed as the new OISPG Rapporteur. This group of experts from the European Commission supports and promotes open innovation practices, particularly in the context of the Horizon 2020 program.

On January 1st, 2017, Pierre Simay was appointed as the new OISPG Rapporteur. This group of experts from the European Commission supports and promotes open innovation practices, particularly in the context of the Horizon 2020 program.

“Today’s companies can no longer innovate alone. They exist in innovation ecosystems in which the collaborative model is prioritized,” explains Pierre Simay, Coordinator for International Relations at IMT. Open innovation is a way of viewing research and innovation strategy as being open to external contributions through collaboration with third parties.

The Horizon 2020 framework program pools all the European Union funding for research and innovation. The program receives funding of nearly €80 billion for a 7-year period (2014-2020). Each year, calls for tenders are published to finance research and innovation projects (individual and collaborative projects). The European Commission services in charge of Horizon 2020 have established external advisory groups to advise them in the preparation of the calls for proposals. Since 2010, IMT has been actively involved in the expert group on open innovation, OISPG – Open Innovation Strategy and Policy Group. Pierre Simay, the recently appointed OISPG Rapporteur, presents this group and the role played by IMT within the group.

What is the OISPG?

Pierre Simay: OISPG is a DG CONNECT expert group, the European Commission’s Directorate General for Information and Communication Technology. The open innovation phenomenon has increased over the past few years, with the appearance of more collaborative and open models. These models are based, for example, on user participation in research projects and the development of living labs in Europe (EnoLL network). I should also mention the new research and innovation ecosystems that have emerged around platforms and infrastructures. This is the case for the European “Fiware” initiative which, by making copyright-free software building block platforms available to developers and SMEs, seeks to facilitate the creation and roll-out of the internet applications of the future in what are referred to as the vertical markets (healthcare, energy, transportation, etc.).

Open innovation refers to several concepts and practices – joint laboratories, collaborative projects, crowdsourcing, user innovation, entrepreneurship, hackathons, technological innovation platforms, and Fablabs which are still relatively new and require increasingly cross-sectoral collaborative efforts. Take farms of the future, for example, with precision agriculture that requires cooperation between farms and companies in the ICT sector (robotics, drones, satellite imagery, sensors, big data…) for the deployment and integration of agronomic information systems. OISPG was created in response to these kinds of challenges.

Our mission focuses on two main areas. The first is to advise the major decision-makers of the European Commission on open innovation matters. The second is to encourage major private and public European stakeholders to adopt open innovation, particularly through the broad dissemination of the practical examples and best practices featured in the OISPG reports and publications. To accomplish its mission, OISPG has been organized around a panel of 20 European experts from the industry (INTEL, Atos Origin, CGI, Nokia, Mastercard…), the academic world (Amsterdam University of Applied Sciences, ESADE, IMT…), and the institutional sector (DG CONNECT, the European Committee of the Regions, Enoll, ELIG…).

What does your role within this group involve?

PS: My role is to promote the group’s work and maintain links with the European Commission experts who question us about the current issues related to the Horizon 2020 program and who seek an external perspective on open innovation and its practices. Examples include policy that is being established in the area of digital innovation hubs, and reflections on blockchain technology and the collaborative issues it involves. OISPG must also propose initiatives to improve the definition of collaborative approaches and the assessment criteria used by the Commission in financing Horizon 2020 projects. In Europe, we still suffer from cumbersome and rigid administrative procedures, which are not always compatible with the nature of innovation and its current demands: speed and flexibility.

My role also includes supporting DG CONNECT in organizing its annual conference on open innovation (OI 2.0). This year, it will be held from June 13 to 14 in Cluj-Napoca, Romania. During the conference, political decision-makers, professionals, theorists and practitioners will be able to exchange and work together on the role and impacts of open innovation.

What issues/opportunities exist for IMT as a member of this group?

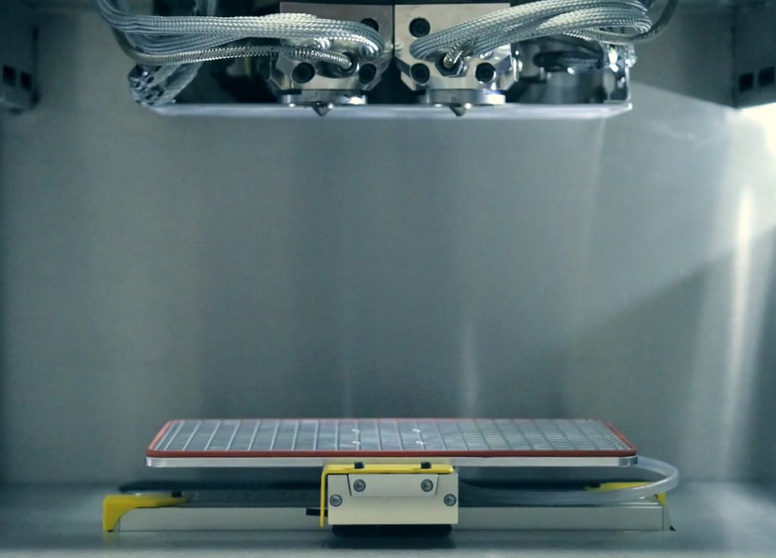

PS: IMT is actively involved in open innovation, with major programs such as those of the Fondation Télécom (FIRST program), our Carnot institutes and our experimentation platforms (for example, the TeraLab for Big Data). Our participation in OISPG positions us at the heart of European collaborative innovation issues, enables us to meet with political decision-makers and numerous European research and innovation stakeholders to create partnerships and projects. This also allows us to promote our expertise internationally.