How the SEAS project is redefining the energy market

The current energy transition has brought with it new energy production and consumption modes. Coordinated by Engie, the european SEAS project aims to foster these changes to create a more responsible energy market. SEAS is seeking to invent the future of energy usage by facilitating the integration of new economic stakeholders to redistribute energy, as well as increasing the energy management options offered to individuals. These ideas have been made possible through the contributions of researchers from several IMT graduate schools (IMT Atlantique, Mines Saint-Étienne, Télécom ParisTech and Télécom SudParis). Among these contributions, two innovations are supported by IMT Atlantique and Mines Saint-Étienne.

“An increasing number of people are installing their own energy production tools, such as solar panels. This breaks with the traditional energy model of producer-distributor-consumer”. Redefining the stakeholders in the energy chain, as noted by Guillaume Habault, IMT Atlantique computer science researcher, is at the heart of the issue addressed by the Smart Energy Aware Systems (SEAS) project. The project was completed in December, after three years of research as part of the European ITEA program. It brought together 34 partners from 7 countries, one of which was IMT in France. On 11 May, the SEAS project won the ITEA Award of Excellence, in acknowledgement for the high quality of its results.

The project is especially promising as it does not only involve individuals wanting to produce their own energy using solar panels. New installations such as wind turbines provide new sources of energy on a local scale. However, this creates complications for stakeholders in the chain such as network operators: their energy production is erratic, as it is dependent on the seasons and the weather. Yet it is important to be able to foresee energy production in the very short term in order to ensure that every consumer is supplied. Overestimating the production of a wind farm or a neighborhood equipped with solar panels means taking the risk of not having enough energy to cope with a lack in production, and ultimately causing power cuts for residents. “Conversely, underestimating production means having to store or dispatch the surplus energy elsewhere. Poor planning can create problems in the network, and even reduce the lifespan of some equipment” the researcher warns.

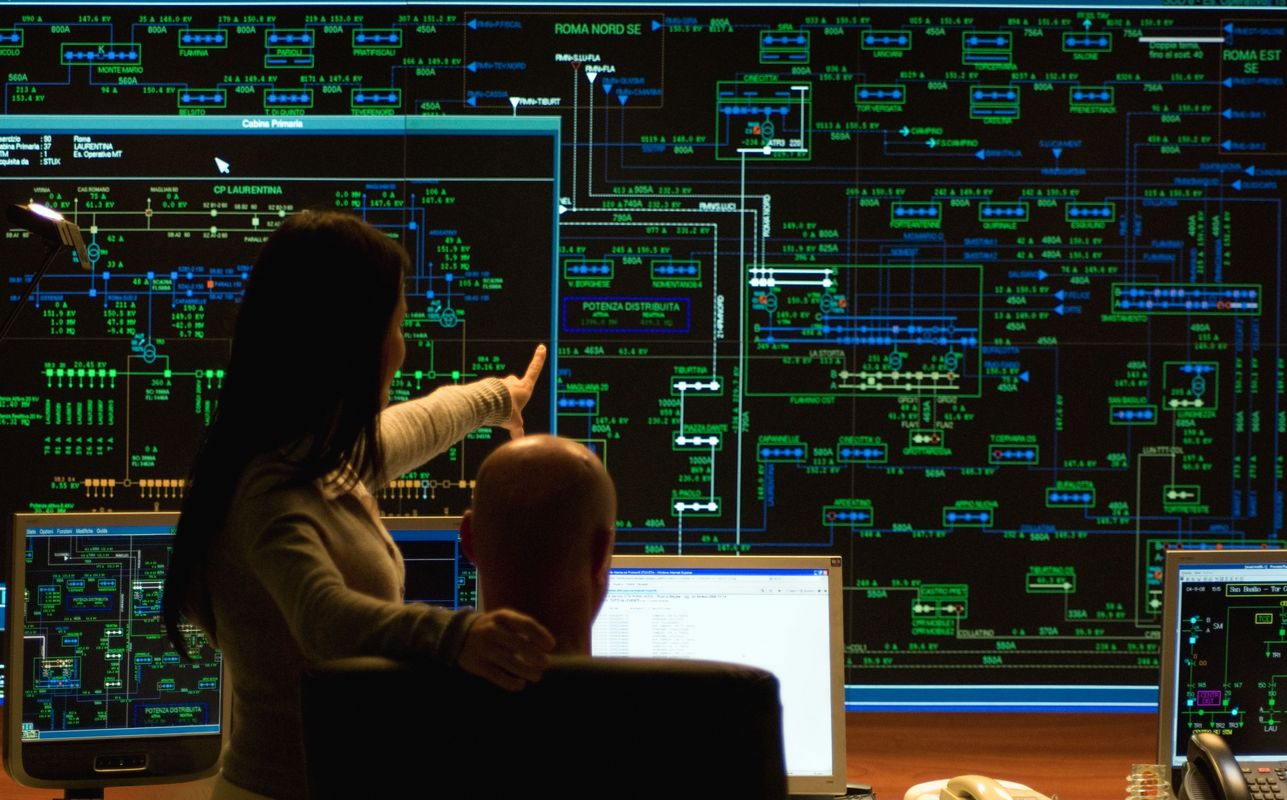

An architecture for smart energy grid management

Among the outcomes of the SEAS is a communication architecture capable of gathering all information from different production and consumption modes locally, almost in real time. “The ideal goal is to be able to inform the network in 1-hour segments: with this length of time, we can avoid getting information about user consumption that is excessively precise, while anticipating cases of over- or under-consumption,” explains Guillaume Habault, the creator of the architecture.

For individuals, SEAS may take the form of an electric device that can transmit information about their consumption and production to their electricity provider. “This type of data will allow people to optimize their power bills,” the researcher explains. “By having perfect knowledge of the local energy production and demand at a given moment, residents will be able to tell if they should store the energy they produce, or redistribute it on the network. With the help of the network, they may also decide what time would be the most economical to recharge their electric car, according to electricity prices, for instance.”

These data on the current state of a sub-network point to the emergence of new stakeholders, known as “flexibility operators”. First of all, because optimizing your consumption by adapting the way you use each appliance in the house requires specific equipment, and takes time. While it is easy to predict that energy will be more expensive at times of peak demand, such as in the evenings, it is more difficult to anticipate the price of electricity according to how strong the wind is blowing in a wind farm located several dozen kilometers away. It is safe to say that with suitable equipment, some individuals will be inclined to delegate their energy consumption optimization to third-party companies.

The perspectives of intelligent energy management offered by SEAS go beyond the context of the individual. If the inhabitants of a house are away on holiday, couldn’t the energy produced by their solar panels be used to supply the neighborhood, thus taking pressure off a power plant located a hundred kilometers away? Another example: refrigerators operate periodically, they don’t cool constantly, but rather at intervals. In a neighborhood, or a city, it would therefore be possible to intelligently shift the startup time of a group of these appliances to outside peak hours, so that an already heavily-used network can be concentrated on the heaters people switch on when they return home from work.

Companies are particularly keen to get these types of services. Load management allows them to temporarily switch off machines that are not essential to their service in exchange for a payment to those who are in charge of this load management. The SEAS architecture incorporates communication security in order to ensure trust between stakeholders. In particular, personal data are decentralized: each party owns their own data and can decide not only to allow a flexibility operator to have access to them, but can also determine their granularity and level of use. “an individual will have no trouble accepting that their refrigerator cools at different times from usual, but not that their television gets cut off while they are watching it,” says Guillaume Habault. “And companies will want to have even more control over whether machines are switched off or on.”

Objects that speak the same language

In order to achieve such efficient management of electricity grids, the SEAS project turned to the semantic web expertise of Mines Saint-Étienne. “The semantic web is a set of principals and formalisms that are intended to allow machines to exchange knowledge on the web”, explains Maxime Lefrançois, head researcher in developing the knowledge model for the SEAS project. This knowledge model is the pivotal language that allows objects to be interoperable in the context of energy network management.

“Up to now, each manufacturer had their own way of describing the world, and the machines made by each company evolved in their own worlds. With SEAS, we used the principles and formalisms of the semantic web to provide machines with a vocabulary allowing them to “talk energy”, to use open data that exists elsewhere on the web, or to use innovative optimization algorithms on the web”, says the researcher. In other words, SEAS proposes a common language enabling each entity to interpret a given message in the same way. Concretely, this involves giving each object a URL address, which can be consulted in order to obtain the information on it, in particular to find out what it can do and how to communicate with it. Maxime Lefrançois adds, “We also contributed to principles and formalisms of the semantic web with a series of projects aimed at making it more accessible to companies and machine designers, so that they could adapt their existing machines and web services to the SEAS model at a lower cost”.

Returning to a previous example, using this extension of the web makes it possible to adapt two refrigerators of different brands so that they can communicate, agree on the way they operate, and avoid creating a consumption peak by starting up at the same time. In terms of services, this will allow flexibility operators to create solutions without being limited by the languages specific to each brand. As for manufacturers, it is an opportunity for them to offer household energy management solutions that go beyond simple appliances.

Thanks to the semantic web, communication between machines can be more easily automated, improving the energy management service proposed to the customer. “All these projects point to a large-scale deployment,” says Maxime Lefrançois. Different levels of management can thus be envisioned. Firstly, for households, for coordinating appliances. Next, for neighborhoods, redistributing the energy produced by each individual according to their neighbors’ needs. Finally on a regional or even national scale, for coordinating load management for overall consumption, relieving networks in cases of extremely cold temperatures, for example. The SEAS project could therefore change things on many levels, offering new modes of more responsible energy consumption.

This article is part of our dossier Digital technology and energy: inseparable transitions!

[divider style=”normal” top=”20″ bottom=”20″]

SEAS wins an “ITEA Award of Excellence for Innovation and Business impact”

Coordinated by Engie, and with one of its main academic partners being IMT, SEAS won an award of excellence on May 11 at the Digital Innovation Forum 2017 in Amsterdam. This award recognizes the relevance of the innovation in terms of its impact on the industry.

Coordinated by Engie, and with one of its main academic partners being IMT, SEAS won an award of excellence on May 11 at the Digital Innovation Forum 2017 in Amsterdam. This award recognizes the relevance of the innovation in terms of its impact on the industry.

[divider style=”normal” top=”20″ bottom=”20″]

Author

Author Strategic Management of Innovation Networks

Strategic Management of Innovation Networks

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006