From the vestiges of the past to the narrative: reclaiming time to remember together

The 21st Century is marked by a profound change in our relationship with time, now merely perceived in terms of acceleration, speed, changes and emergencies. At Mines Nantes, the sociologist Sophie Bretesché has positioned herself at the interfaces between the past and the future, where memory and oblivion color our view of the present. In contexts undergoing changes, such as regions and organizations, she examines the vestiges, remnants of the past, giving a different perspective on the dialectic of oblivion and memory. She analyzes the essential role of collective memory and shared narrative in preserving identity in situations of organizational change and technological transformation.

A modern society marked by fleeting time

Procrastination Day, Slowness Day, getting reacquainted with boredom… many attempts have been made to try to slow down time and stop it slipping through our fingers. They convey a relationship with time that has been shattered, from the simple rhythm of Nature to that marked by the clock of the industrial era, now a combination of acceleration, motion and real time. This transformation is indicative of how modern society functions, where “that which is moving has substituted past experience, the flexible is replacing the established, the transgressive is ousting the transmitted“, observes Sophie Bretesché.

The sociologist starts from this simple question: the loss of time. What dynamics are involved in this phenomenon corresponding to an acceleration and a compression of work, family and leisure time, these objects of time reflective of our social practices?

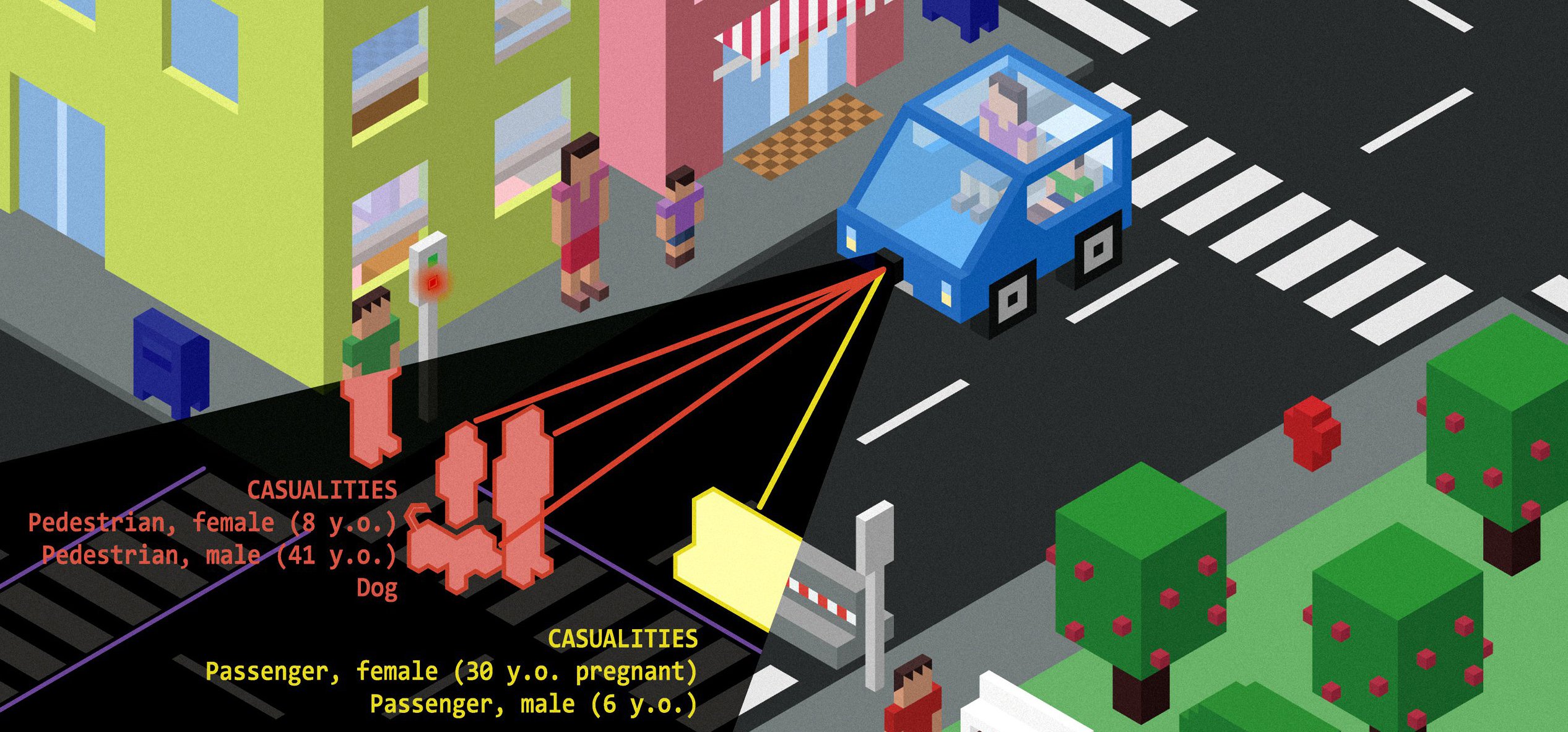

One reason frequently cited for this racing of time is the unavoidable presence of new technologies arriving synchronously into our lives, and the frenetic demand for increasingly high productivity. However, this explanation is lacking, confusing correlation and causality. The reality is that of a sum of constant technological and managerial changes which “prevents consolidation of knowledge and experience-building as a sum of knowledge” explains the researcher, who continues: “Surrounded, in the same unit of time, by components with distinct temporal rhythms and frameworks, the subject is both cut off from his/her past and dispossessed of any ability to conceive the future.”

To understand what is emerging and observed implicitly in reality, an unprecedented relationship with time-history and with our memory, the sociologist has adopted the theory that it is not so much acceleration that is posing a problem, but the ability of a society to remember and to forget. “Placing the focus on memory and oblivion“, accepting that “the present remains inhabited by vestiges of our past“, and grasping that “the processes of change produce material vestiges of the past which challenge the constants of the past“, are thus part of a process to regain control of time.

Study in search of vestiges of the past

“This fleeting time is observed clearly in organizations and regions undergoing changes“, notes Sophie Bretesché, and she took three of these fields of study as a starting point in her search for evidence. Starting from “that which grates, resists, arouses controversy, fields in which nobody can forget, or remember together“, she searches for vestiges which are material and tangible signs of an intersection between the past and the future. First of all, she meets executives faced with information flows which are impossible to regulate, and an organization in which the management structure has changed three times in ten years. The sociologist conducts interviews with the protagonists and provides a clearer understanding of the executives’ activities, demonstrating that professions have nonetheless continued to exist, following alternative paths. A third study conducted on residents in the vicinity of a former uranium mine leads her to meet witnesses of a bygone era. Waste formerly used by the local residents is now condemned for its inherent risks. These vestiges of the past are also those of a modern era where risk is the subject of harsh criticism.

In the three cases, the sociology study partakes in the stories of those involved, conducted over long periods. While a sociologist usually presents study results in the form of a cohesive narrative based on theories and interpretations, a social change expert does not piece together a story retrospectively, but analyzes movement and how humans in society construct their temporalities, with the sociological narrative becoming a structure for temporal mediation.

These different field studies demonstrate that it is necessary to “regain time for the sake of time“. This is a social issue, “to gain meaning, reclaim knowledge and give it meaning based on past experience.” Another result is emerging: behind the outwardly visible movements, repeated changes, we will find constants which tend to be forgotten, forms of organization. In addition, resistance to change, which is now stigmatized, could after all have positive virtues, as it is an expression of a deeply rooted culture, based on a collective identity that it would be a shame to deny ourselves.

A narrative built upon a rightful collective memory

This research led to Sophie Bretesché taking the helm at Mines Nantes of the “Emerging risks and technologies: from technological management to social regulation” Chair, set up in early 2016. Drawing on ten years of research between the social science and management department and the physics and chemistry laboratories at Mines Nantes, this chair focuses on forms of regulation of risk in the energy, environmental and digital sectors. The approach is an original one in that these questions are no longer considered from a scientific perspective alone, because it is a fact that these problems affect society.

The social acceptability of the atom in various regions demonstrated, for example, that the cultural relationship with risk cannot be standardized universally. While, in Western France, former uranium mines have been rehabilitated within lower-intensity industrial or agricultural management, they have been subject to moratoriums in the Limousin region, where their spaces are now closed-off. These lessons on the relationship with risk are compiled with a long-term view. In this instance, the initial real estate structures offer explanations bringing different stories to light which need to be pieced together in the form of narratives.

Indeed, in isolation, the vestiges of the past recorded during the studies do not yet form shared memories. They are merely individual perceptions, fragile due to their lack of transfer to the collective. “We remember because those around us help“, reminds the researcher, continuing: “the narrative is heralded as the search for the rightful memory“. In a future full of uncertainty, in “a liquid society diluted in permanent motion“, the necessary construction of collective narratives – and not storytelling – allows us to look to the future.

The researcher, who enjoys being at the interfaces of different worlds, takes delight in the moment when the vestiges of the past gradually make way for the narrative, where the threads of sometimes discordant stories start to become meaningful. The resulting embodied narrative is the counterpoint created from the tunes collected from the material vestiges of the past: “Accord is finally reached on a shared story“, in a way offering a new shared commodity.

With a longstanding interest in central narratives of the past, Sophie Bretesché expresses one wish: to convey and share these multiple experiences, times and tools for understanding, these histories of changes in progress, in a variety of forms such as the web documentary or the novel.

Sophie Bretesché is a Research Professor of Sociology at Mines Nantes. Head of the regional chair in “Risks, emerging technologies and regulation“, she is co-director of the NEEDS (Nuclear, Energy, Environment, Waste, Society) program and coordinates the social science section of the CNRS “Uranium Mining Regions” Workshop. Her research encompasses time and technologies, memory and changes, professional identities and business pathways. An author of 50 submissions in her field, co-director of two publications, “Fragiles competences” and “Le nucléaire au prisme du temps”, and author of “Le changement au défi de la mémoire“, published by Presses des Mines, she is also involved at the Paris Institute of Political Studies in two Executive Master’s programs (Leading change and Leadership pathways).

Sophie Bretesché is a Research Professor of Sociology at Mines Nantes. Head of the regional chair in “Risks, emerging technologies and regulation“, she is co-director of the NEEDS (Nuclear, Energy, Environment, Waste, Society) program and coordinates the social science section of the CNRS “Uranium Mining Regions” Workshop. Her research encompasses time and technologies, memory and changes, professional identities and business pathways. An author of 50 submissions in her field, co-director of two publications, “Fragiles competences” and “Le nucléaire au prisme du temps”, and author of “Le changement au défi de la mémoire“, published by Presses des Mines, she is also involved at the Paris Institute of Political Studies in two Executive Master’s programs (Leading change and Leadership pathways).

Guillaume Desbrosse, mediating between science and the public

Guillaume Desbrosse, mediating between science and the public