Removing pollutants from our homes

Indoor air is polluted with several volatile organic compounds, some of which are carcinogenic. Frédéric Thévenet, a researcher at Mines Douai, develops solutions for trapping and eliminating these pollutants, and for improving tests for air purifying devices.

We spend nearly 90% of our time inside: at home, at the office, at school, or in our car. Yet the air is not as clean as we think – it contains a category of substances called volatile organic compounds (VOCs), some of which are harmful. Fighting these VOCs is Frédéric Thévenet’s mission. Frédéric is a researcher with the Department of Atmospheric Sciences and Environmental Engineering (SAGE) at Mines Douai, a lab specialized in analytical chemistry capable of analyzing trace molecules.

Proven carcinogens

VOCs are gaseous organic molecules emitted in indoor environments from construction materials, paint and glue on furniture, cleaning and hygiene products, and even from cooking. One specific molecule is a particular cause for concern: formaldehyde, both a proven carcinogen and the compound with the highest concentration levels. Guideline values exist (concentration levels that must not be exceeded) for formaldehyde, but they are not yet mandatory.

The first way to reduce VOCs is through commonsense measures: limit sources by choosing materials and furniture with low emissions, choose cleaning products carefully and, above all, ventilate frequently with outdoor air. But sometimes this is not enough. This is where Frédéric Thévenet comes into play: he develops solutions for eliminating these VOCs.

Trap and destroy

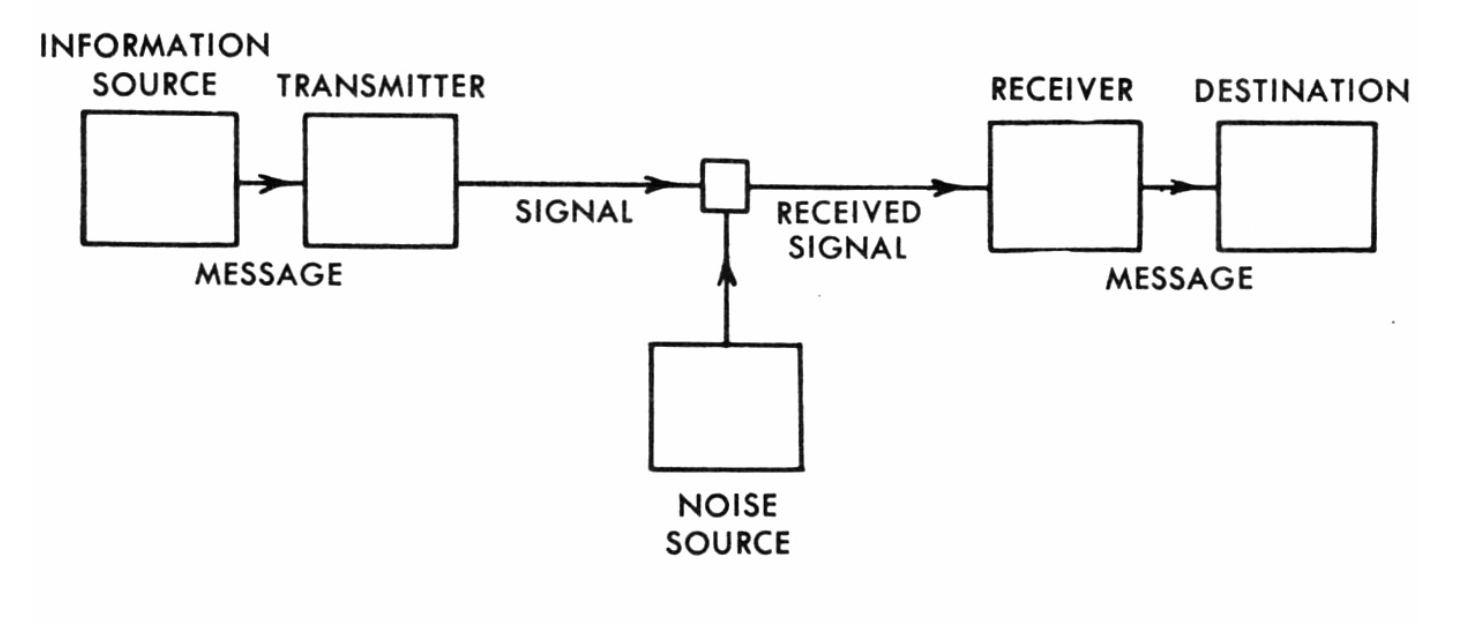

There are two methods for reducing VOCs in the air. They can be trapped on a surface through adsorption (the molecules bind irreversibly to the surface), and the traps are then replenished. The compounds can also be trapped and destroyed immediately, generally through oxidation, by using light (photocatalysis). “But in this case, you must make sure the VOCs have been completely destroyed; they decompose into water and CO2, which are harmless,” the researcher explains. “Sometimes the VOCs are only partially destroyed, thus generating by-products that are also dangerous.”

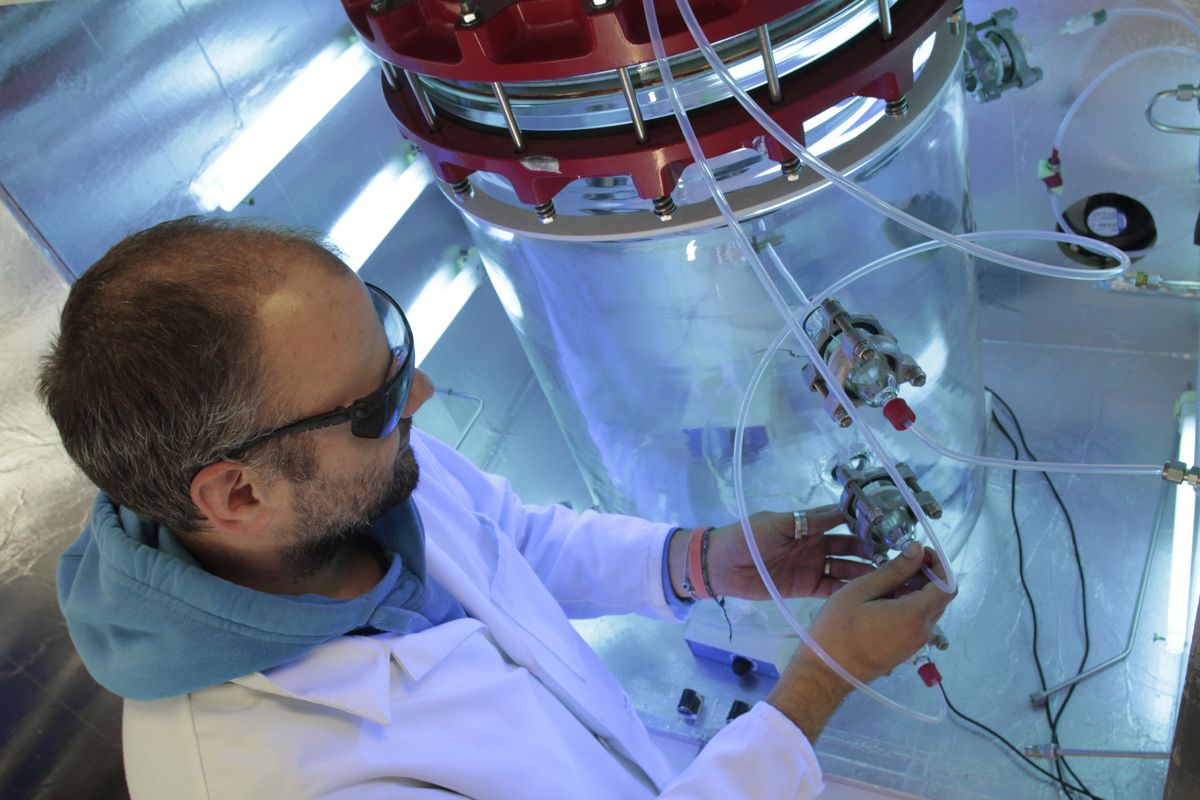

At the SAGE Department, Frédéric works in complementary fashion with his colleagues from the VOC metrology team. They take their measurement devices to the field. He prefers to reproduce the reality of the field in the laboratory: he created an experimental room measuring 40 cubic meters, called IRINA (Innovative Room for INdoor Air studies), where he recreates different types of atmospheres and tests procedures for capturing and destroying VOCs. These procedures are at varying stages of development: Frédéric tests technology already available on the market that the ADEME (The French Environment and Energy Management Agency) wants to evaluate, as well as adsorbent materials developed by manufacturers who are looking to improve the composition. He also works on even earlier stages, developing his own solutions in the laboratory. “For example, we test the regeneration of adsorbents using different techniques, particularly with plasma,” he explains.

[box type=”shadow” align=”” class=”” width=””]

A long-overdue law

Only laws and standards will force manufacturers to develop effective solutions for eliminating volatile organic compounds. Yet current legislation is not up to par. Decree no. 2011-1727 of 2 December 2011 on guideline values for formaldehyde and benzene in indoor air provides that the concentration levels of these two VOCs must not exceed certain limits in establishments open to the public: 30 µg/m³ for formaldehyde and 5 µg/m³ for benzene, for long-term exposure. However, this law has not yet come into force, since the decrees implementing this measure have not yet been issued. The number of locations affected by this law make it very difficult to implement. The law’s implementation has been postponed until 2018, and even this date remains uncertain.

Furthermore, the Decree of 19 April 2011 on labelling volatile pollutant emissions for construction products, wall cladding, floor coverings, and paint and varnishes is aimed at better informing consumers on VOC emissions from construction materials, paint and varnishes. These products must include a label indicating the emission levels for 11 substances, on a four-category scale ranging from A+ to C, based on the energy label model for household appliances.[/box]

Improving the standards

What are the results? For now, the most interesting results are related to adsorbent construction materials, for example, when they are designed to become VOC traps. “They don’t consume energy, and show good results in terms of long-term trapping, despite variations due to seasonal conditions (temperature and humidity),” explains Frédéric. “When these materials are well designed, they do not release the emissions they trap.” All these materials are tested in realistic conditions, by verifying how these partitions perform when they are painted, for example.

As well as testing the materials themselves, the research is also aimed at improving the standards governing anti-VOC measures, which seek to come as close as possible to real operating conditions. “We were able to create a list of precise recommendations for qualifying the treatments,” the researcher adds. The goal was to obtain standards that truly prove the devices’ effectiveness. Yet today, this is far from the case. An investigation published in the magazine Que Choisir in May 2013 showed that most of the air purifiers sold in stores were ineffective, or even negatively affected the air quality by producing secondary pollutants. There was therefore an urgent need to establish a more scientific approach in this area.

A passion for research

For some, becoming a researcher is the fulfilment of a childhood dream. Others are led to the profession through chance and the people they happen to meet. Frédéric Thévenet did not initially see himself as a researcher. His traditional career path, taking preparatory classes for an engineering school (Polytech’ Lyon), was initially leading him towards a future in engineering. Yet a chance meeting caused him to change his mind. During his second year at Polytech’, he did an internship at a research lab under the supervision of Dominique Vouagner, a researcher who was passionate about her work at the Institut Lumière Matière (ILM), a joint research unit affiliated with the Claude Bernard University Lyon 1 and CNRS. “I thought it was wonderful, the drive to search, to question, the experimental aspect… It inspired me to earn my DEA (now a Master 2) and apply for a thesis grant.” He was awarded a grant from ADEME on the subject of air treatment… although his studies had focused on material sciences. Still, it was a logical choice, since materials play a key role in capturing pollutants. Frédéric does not regret this choice: “Research is a very inspiring activity, involving certain constraints, but also much room for freedom and creativity.”