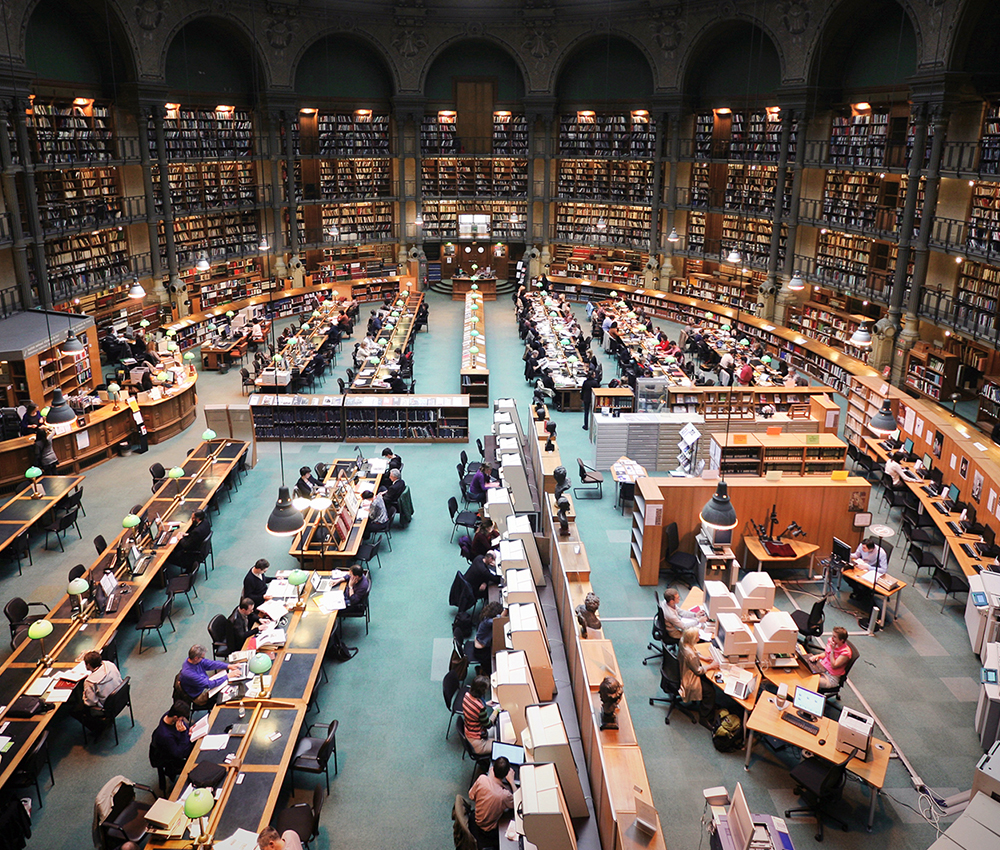

As a repository of French culture, the Bibliothèque Nationale de France (BnF, the French national Library) has always sought to know and understand its users. This is no easy task, especially when it comes to studying the individuals who use Gallica, its digital library. To learn more about them, without limiting itself to interviewing sample individuals, the BnF has joined forces with Télécom ParisTech, taking advantage of its multidisciplinary expertise. To meet this challenge, the scientists are working with IMT’s TeraLab platform to collect and process big data.

[divider style=”normal” top=”20″ bottom=”20″]

[dropcap]O[/dropcap]ften seen as a driving force for technological innovation, could big data also represent an epistemological revolution? The use of big data in experimental sciences is nothing new; it has already proven its worth. But the humanities have not been left behind. In April 2016, the Bibliothèque Nationale de France (BnF) leveraged its longstanding partnership with Télécom ParisTech (see box below) to carry out research on the users of Gallica — its free, online library of digital documents. The methodology used is based in part on the analysis of large quantities of data collected when users visit the website.

Every time a user visits the website, the BnF server records a log of all actions carried out by the individual on Gallica. This information includes pages opened on the website, time spent on the site, links clicked on the page, documents downloaded etc. These logs, which are anonymized in compliance with the regulations established the CNIL (French Data Protection Authority), therefore provide a complete map of the user’s journey, from arriving at Gallica to leaving the website.

With 14 million visits per year, this information represents a large volume of data to process, especially since it must be correlated with the records of the 4 million documents available for consultation on the site — which include the type of document, creation date, author etc. — which also provide valuable information for understanding users and their interest in documents. Carrying out sociological fieldwork alone, by interviewing larger or smaller samples of users, is not enough to capture the great diversity and complexity of today’s online user journeys.

Researchers at Télécom ParisTech therefore took a multidisciplinary approach. Sociologist Valérie Beaudouin teamed up with François Roueff to establish a dialogue between the sociological analysis of uses through field research, on one hand, and data mining and modeling on the other. “Adding this big data component allows us to use the information contained in the logs and records to determine the typical behavioral profiles and behavior of Gallica users,” explains Valérie Beaudouin. The data is collected and processed on IMT’s TeraLab platform. The platform provides researchers with a turnkey working environment that can be tailored to their needs and offers more advanced features than commercially-available data processing tools.

Also read on I’MTech TeraLab and La Poste have teamed up to fight package fraud

What are the different profiles of Gallica users?

François Roueff and his team were tasked with using the information available to develop unsupervised learning algorithms in order to identify categories of behavior within the large volume of data. After six months of work, the first results appeared. The initial finding was that only 10 to 15% of Gallica users’ browsing activity involves consulting several digital documents. The remaining 85 to 90% of users represent occasional visits, for a specific document.

“We observed some very interesting things about the 10 to 15% of Gallica users involved,” says François Roueff. “If we analyze the Gallica sessions in terms of variety of types of documents (monographs, press, photographs etc.), eight out of ten categories only use a single type,” he says. This reflects a tropism on the part of users toward a certain form of media. When it comes to consulting documents, in general there is little variation in the ways in which Gallica users obtain information. Some search for information about a given topic solely by consulting photographs, while others consult solely press articles.

According to Valérie Beaudouin, the central focus of this research lies in understanding such behavior. “Using these results, we develop hypotheses, which must then be confirmed by comparing them with other survey methodologies,” she explains. Data analysis is therefore supplemented by an online questionnaire to be filled out by Gallica users, field surveys among users, and even by equipping certain users with video cameras to monitor their activity in front of their screens.

[tie_full_img] [/tie_full_img]

[/tie_full_img]

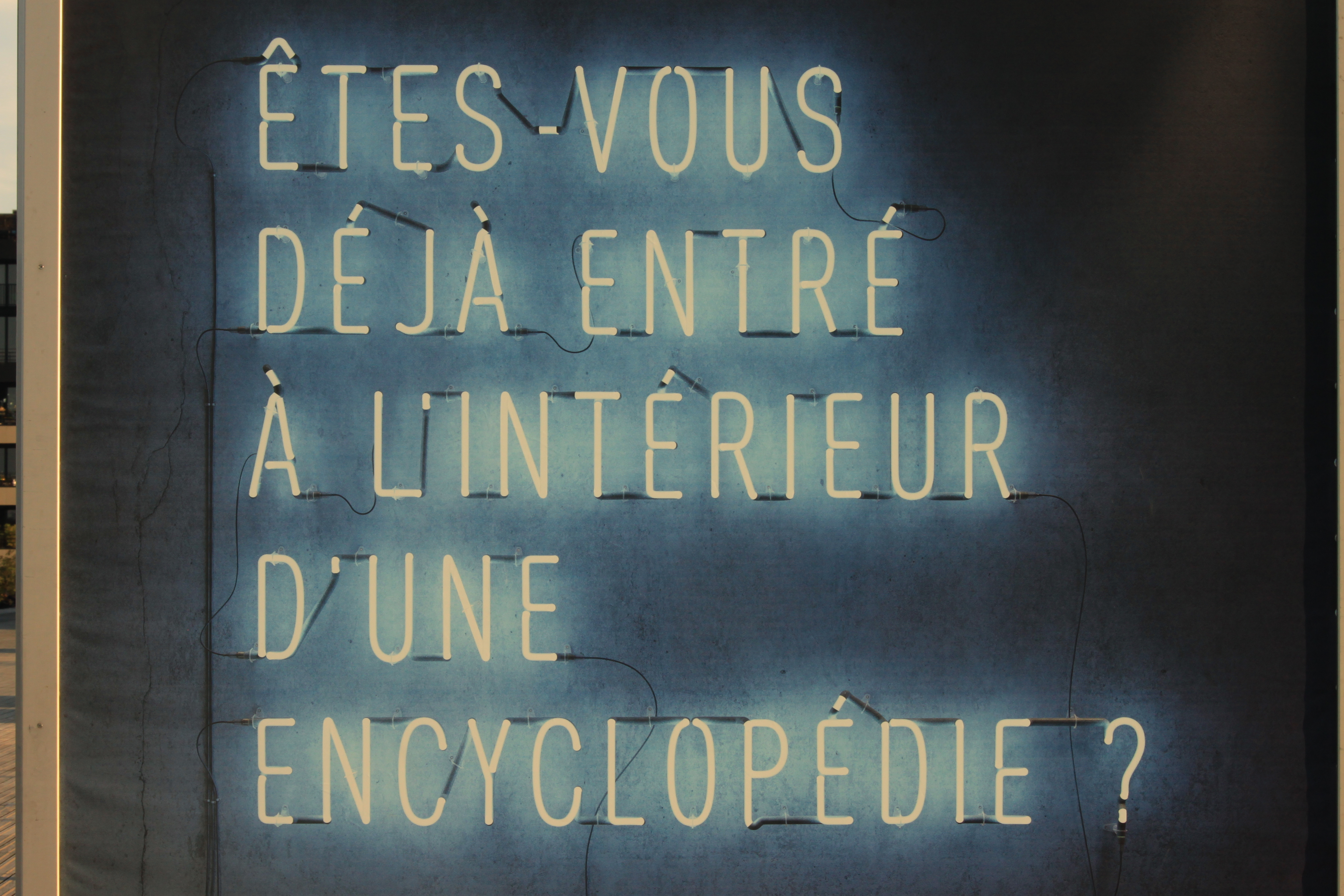

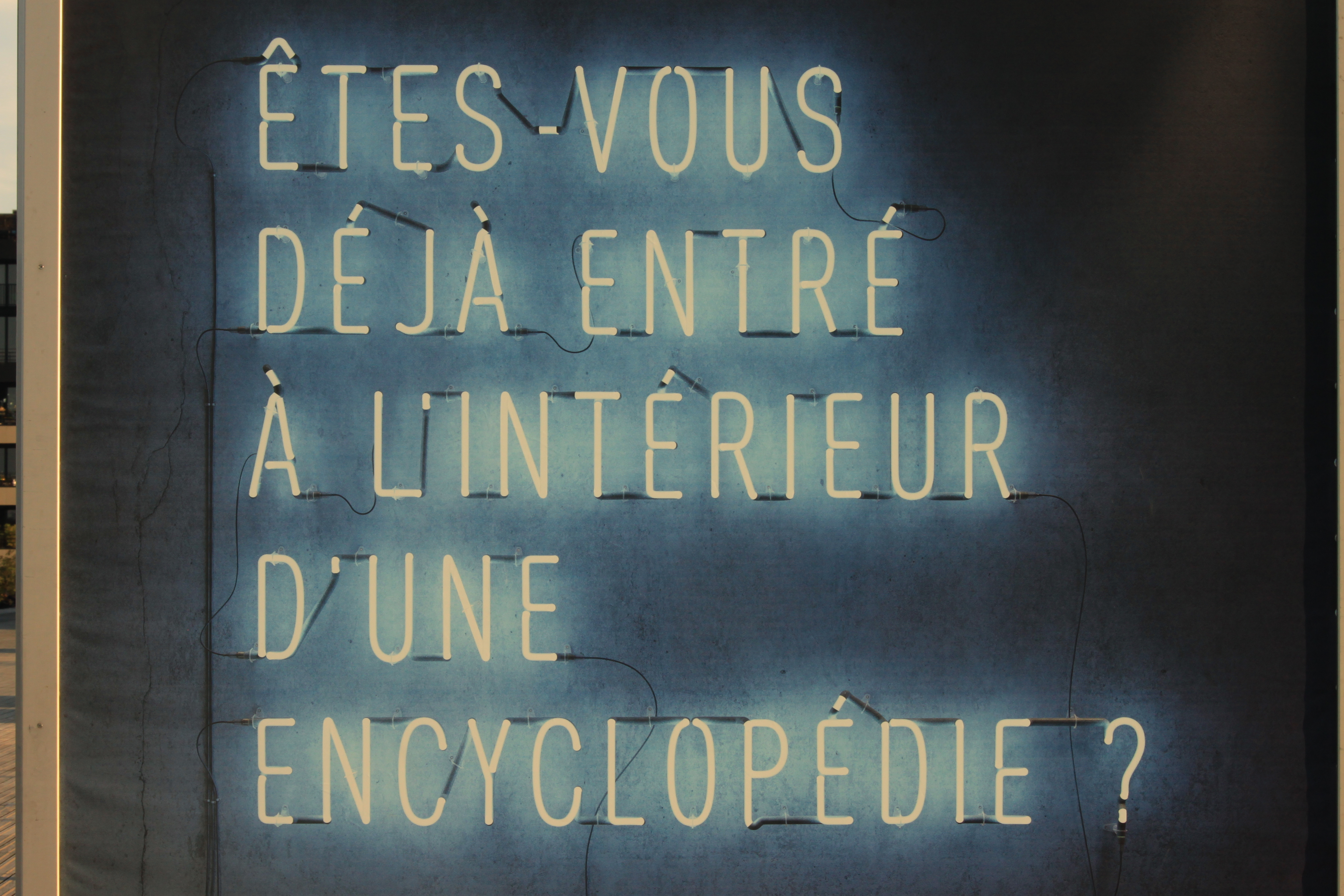

Photo from a poster for the Bibliothèque Nationale de France (BnF), October 2016. For the institution, making culture available to the public is a crucial mission, and that means digital resources must be made available in a way that reflects users’ needs.

“Field studies have allowed us to understand, for example, that certain groups of Gallica users prefer downloading documents so they can read them offline, while others would rather consult them online to benefit from the high-quality zoom feature,” she says. The Télécom ParisTech team also noticed that in order to find a document on the digital library website, some users preferred to use Google and include the word “Gallica” in their search, instead of using the website’s internal engine.

Confirming the hypotheses also means working closely with teams at BnF, who provide knowledge about the institution and the technical tools available to users. Philippe Chevallier, project manager for the Strategy and Research delegation of the cultural institution, attests to the value of dialogue with the researchers: “Through our discussions with Valérie Beaudouin, we learned how to take advantage of the information collected by community managers about individuals who are active on social media, as well as user feedback received by email.”

Analyzing user communities: a crucial challenge for institutions

The project has provided BnF with insight into how existing resources can be used to analyze users. This is another source of satisfaction for Philippe Chevallier, who is committed to the success of the project. “This project is the proof that knowledge about user communities can be a research challenge,” he says with excitement. “It’s too important an issue for an institution like ours, so we need to dedicate time to studying it and leverage real scientific expertise,” he adds.

And when it comes to Gallica, the mission is even more crucial. It is impossible to see Gallica users, whereas the predominant profile of users of BnF’s physical locations can be observed. “A wide range of tools are now available for companies and institutions to easily collect information about online uses or opinions: e-reputation tools, web analytics tools etc. Some of these tools are useful, but they offer limited possibilities for controlling their methods and, consequently, their results. Our responsibility is to provide the library with meaningful, valuable information about its users and to do so, we need to collaborate with the research community,” says Philippe Chevallier.

In order to obtain the precise information it is seeking, the project will continue until 2017. The findings will offer insights into how the cultural institution can improve its services. “We have a public service mission to make knowledge available to as many people as possible,” says Philippe Chevallier. In light of observations by researchers, the key question that will arise is how to optimize Gallica. Who should take priority? The minority of users who spend the most time on the website, or the overwhelming majority of users who only use it sporadically? Users from the academic community— researchers, professors, students — or the “general public”?

The BnF will have to take a stance on these questions. In the meantime, the multidisciplinary team at Télécom ParisTech will continue its work to describe Gallica users. In particular, it will seek to fine-tune the categorization of sessions by enhancing them with a semantic analysis of the records of the 4 million digital documents. This will make it possible to determine, within the large volume of data collected, which topics the sessions are related to. The task poses modeling problems which require particular attention, since the content of the records is intrinsically inhomogeneous: it varies greatly depending on the type of document and digitization conditions.

[divider style=”normal” top=”20″ bottom=”20″]

Online users: a focus for the BnF for 15 years

Online users: a focus for the BnF for 15 years

The first study carried out by the BnF to describe its online user community dates back to 2002, five years after the launch of its digital library, in the form of a research project that already combined approaches (online questionnaires, log analysis etc.). In the years that followed, digital users became an increasingly important focus for the institution. In 2011, a survey of 3,800 Gallica users was carried out by a consulting firm. Realizing that studying users would require more in-depth research, the BnF turned to Télécom ParisTech in 2013 with the objective of assessing the different possible approaches for a sociological analysis of digital uses. At the same time, BnF launched its first big data research to measure Gallica’s position on the French internet for World War I research. In 2016, the sociology of online uses and big data experiment components were brought together, resulting in the project aiming to understand the uses and users of Gallica.[divider style=”normal” top=”20″ bottom=”20″]

After Claude Berrou in 2012, Francesco Andriulli is the second IMT Atlantique researcher to be honored by Europe as part of the ERC program. He will receive a grant of €2 million over five years, enabling him to develop his work in the field of computational electromagnetism. Find out more +

After Claude Berrou in 2012, Francesco Andriulli is the second IMT Atlantique researcher to be honored by Europe as part of the ERC program. He will receive a grant of €2 million over five years, enabling him to develop his work in the field of computational electromagnetism. Find out more + Yanlei Diao, a world-class scientist, recruited jointly by École Polytechnique, the Inria Saclay – Île-de-France Centre and Télécom ParisTech, has been honored for scientific excellence for her project as well as her innovative vision in terms of “acceleration and optimization of analytical computing for big data”. [/box][/one_half_last]

Yanlei Diao, a world-class scientist, recruited jointly by École Polytechnique, the Inria Saclay – Île-de-France Centre and Télécom ParisTech, has been honored for scientific excellence for her project as well as her innovative vision in terms of “acceleration and optimization of analytical computing for big data”. [/box][/one_half_last] Petros Elia is a professor of Telecommunications at Eurecom and has been awarded this ERC Consolidator Grant for his DUALITY project (Theoretical Foundations of Memory Micro-Insertions in Wireless Communications).

Petros Elia is a professor of Telecommunications at Eurecom and has been awarded this ERC Consolidator Grant for his DUALITY project (Theoretical Foundations of Memory Micro-Insertions in Wireless Communications). This marks the third time that Roisin Owens, a Mines Saint-Étienne researcher specialized in bioelectronics, has been rewarded by the ERC for the quality of her projects. She received a Starting Grant in 2011 followed by a Proof of Concept Grant in 2014.

This marks the third time that Roisin Owens, a Mines Saint-Étienne researcher specialized in bioelectronics, has been rewarded by the ERC for the quality of her projects. She received a Starting Grant in 2011 followed by a Proof of Concept Grant in 2014.

Roberto Minerva, has a Master Degree in Computer Science from Bari University, Italy, and a Ph.D in computer Science and Telecommunications from Telecom Sud Paris, France. Roberto is the head of Innovative Architectures group within the Future Centre in the Strategy Department of Telecom Italia. His job is to create advanced scenarios derived from the application of emerging ICT technologies with innovative business models especially in the area of IoT, distributed computing, programmable networks and personal data. he is currently involved in Telecom Italia activities related to Big Data, architecture for IoT, and ICT technologies for leveraging Cultural Heritage.

Roberto Minerva, has a Master Degree in Computer Science from Bari University, Italy, and a Ph.D in computer Science and Telecommunications from Telecom Sud Paris, France. Roberto is the head of Innovative Architectures group within the Future Centre in the Strategy Department of Telecom Italia. His job is to create advanced scenarios derived from the application of emerging ICT technologies with innovative business models especially in the area of IoT, distributed computing, programmable networks and personal data. he is currently involved in Telecom Italia activities related to Big Data, architecture for IoT, and ICT technologies for leveraging Cultural Heritage.

Networks and New Services: A Complete Story

Networks and New Services: A Complete Story