The satellite measurements that are taken every day rely greatly on atmospheric conditions, the main cause of missing data. In a scientific publication, Ronan Fablet, a researcher at Télécom Bretagne, proposes a new method for reconstructing the temperature of the ocean surface to complete incomplete observations. This reconstructed data provides fine-scale mapping of the homogeneous details that are essential in understanding the many different physical and biological phenomena.

What do a fish’s migration through the ocean, a cyclone, and the Gulf Stream have in common? They can all be studied using satellite observations. This is a theme Ronan Fablet appreciates. As a researcher at Télécom Bretagne, he is particularly interested in processing satellite data to characterize the dynamics of the ocean. This designation involves several themes, including the reconstruction of incomplete observations. Missing data impairs satellite observations and limits the representation of the ocean, its activities and interactions. This represents essential components used in various areas, from the study of marine biology to ocean-atmosphere exchanges that directly influence the climate. In an article published in June 2016 in the IEEE J-STARS[1] Ronan Fablet proposed a new statistical interpolation approach for compensating for the lack of observations. Let’s take a closer look at the data assimilation challenges in oceanography.

Temperature, salinity…: the oceans’ critical parameters

In oceanography, the name of a geophysical field refers to its fundamental parameters of sea surface temperature (or SST), salinity (quantity of salt dissolved in the water), water color, which provides information on the primary production (chlorophyll concentrations), and the altimetric mapping (ocean surface topography).

Ronan Fablet’s article focuses on the SST for several reasons. First of all, the SST is the parameter that is measured the most in oceanography. It benefits from high-precision or high-resolution measurements. In other words, a relatively short distance of one kilometer separates two observed points, unlike salinity measurements, which have a lower level of precision (distance of 100km between two measurement points). Surface temperature is also an input parameter that is often used to design digital models for studying ocean-atmosphere interactions. Many heat transfers take place between the two. One obvious example is cyclones. Cyclones are fed by pumping heat from the oceans’ warmer regions. Furthermore, the temperature is also essential in determining the major ocean structures. It allows surface currents to be mapped on a small-scale.

But how can a satellite measure the sea surface temperature? As a material, the ocean will react differently to a given wavelength. “To study the SST, we can, for example, use an infrared sensor that first measures the energy. A law can then be used to convert this into the temperature,” explains Ronan Fablet.

Overcoming the problem of missing data in remote sensing

Unlike the geostationary satellites that orbit at the same speed as the Earth’s rotation, moving satellites generally complete one orbit in a little over 1 hour and 30 minutes. This enables them to fly over several terrestrial points in one day. They therefore build images by accumulating data. Yet some points in the ocean cannot be seen. The main cause of missing data is satellite sensors’ sensitivity to atmospheric conditions. In the case of infrared measurements, clouds block the observations. “In a predefined area, it is sometimes necessary to accumulate two weeks’ worth of observations in order to benefit from enough information to begin reconstructing the given field,” explains Ronan Fablet. In addition, the heterogeneous nature of the cloud cover must be taken into account. “The rate of missing data in certain areas can be as high a 90%,” he explains.

The lack of data is a true challenge. The modelers must find a compromise between the generic nature of the interpolation model and the complexity of its calculations. The problem is that the equations that characterize the movement of fluids, such as water, are not easy to process. This is why these models are often simplified.

A new interpolation approach

According to Ronan Fablet, the techniques that are being used do not take full advantage of the available information. The approach he proposes reaches beyond these limits: “we currently have access to 20 to 30 years of SST data. The idea is that among these samples we can find an implicit representation of the ocean variations that can identify an interpolation. Based on this knowledge, we should be able to reconstruct the incomplete observations that currently exist.”

The general idea of Ron Fablet’s method is based on the principle of learning. If a situation that is observed today corresponds to a previous situation, it is then possible to use the past observations to reconstruct the current data. It is an approach based on analogy.

Implementing the model

In his article, Ronan Fablet therefore used an analogy-based model. He characterized the SST based on a law that provides the best representation of its spatial variations. The law that was chosen provides the closest reflection of reality.

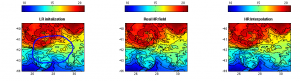

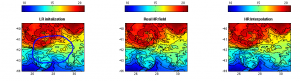

In his study, Ronan Fablet used low-resolution SST observations (100km distances between two observations). With low-resolution data, optimum interpolation is usually favored. The goal is to reduce errors in reconstruction (differences between the simulated field and observed field) at the expense of small-scale details. The image obtained through this process has a smooth appearance. However, when the time came for interpolation, the researcher chose to maintain a high level of detail. The only uncertainty that remains is where the given detail is located on the map. This is why he opted for a stochastic interpolation. This method can be used to simulate several examples that will place the detail in different locations. Ultimately, this approach enabled him to create SST fields with the same level of detail throughout, but with the local constraint of the reconstruction error not improving on that of the optimum method.

“The proportion of ocean energy within distances under 100km is very significant in the overall balance. At these scales, a lot of interaction takes place between physics and biology. For example, schools of fish and plankton structures are formed under the 100km scale. Maintaining a small-scale level of detail also serves to measure the impact of physics on ecological processes,” explains Ronan Fablet.

The blue circle represents the missing data fields. The maps represent the variations in SST at low-resolution based on a model (left), and at high-resolution based on observations (center) and at high resolution based on the model in the article (right).

New methods ahead using deep learning

Another modeling method has recently begun to emerge using deep learning techniques. The model designed using this method learns from photographs of the ocean. According to Ronan Fablet, this method is significant: “it incorporates the idea of analogy, in other words, it uses past data to find situations that are similar to the current context. The advantage lies in the ability to create a model based on many parameters that are calibrated by large learning data sets. It would be particularly helpful in reconstructing the missing high-resolution data from geophysical fields observed using remote sensing.”

Also read on I’MTech

[one_half]

[/one_half][one_half_last]

[/one_half_last]

[1] Journal of Selected Topics in Applied Earth Observations and Remote Sensing. An IEEE peer-reviewed journal.

Security and Privacy in the Digital Era

Security and Privacy in the Digital Era