Simplicity theory: teaching relevance to artificial intelligences

The simplicity theory is founded on humans’ sensitivity to variations in complexity. Something that seems overly simple suddenly becomes interesting. This concept, which was developed by Jean-Louis Dessalles from Télécom ParisTech, challenges Shannon’s probabilistic method for describing certain information. Using this new approach, he can explain events that are otherwise inexplicable, such as creativity, decision-making, coincidence, or “if only I had…” thoughts. And all these concepts could someday be applied to artificial intelligences.

How can we pinpoint the factors that determine human interest? This seemingly philosophical question from researcher Jean-Louis Dessalles from Télécom ParisTech, is addressed from the perspective of the information theory. To mark the centenary of Claude Shannon’s birth, the creator of the information theory, his successors will present their recent research at the Institut Henri Poincaré from October 26 to 28. On this occasion, Jean-Louis Dessalles will present his premise which states that the solution to an apparently complex problem can be found in simplicity.

Founded on the work of Claude Shannon

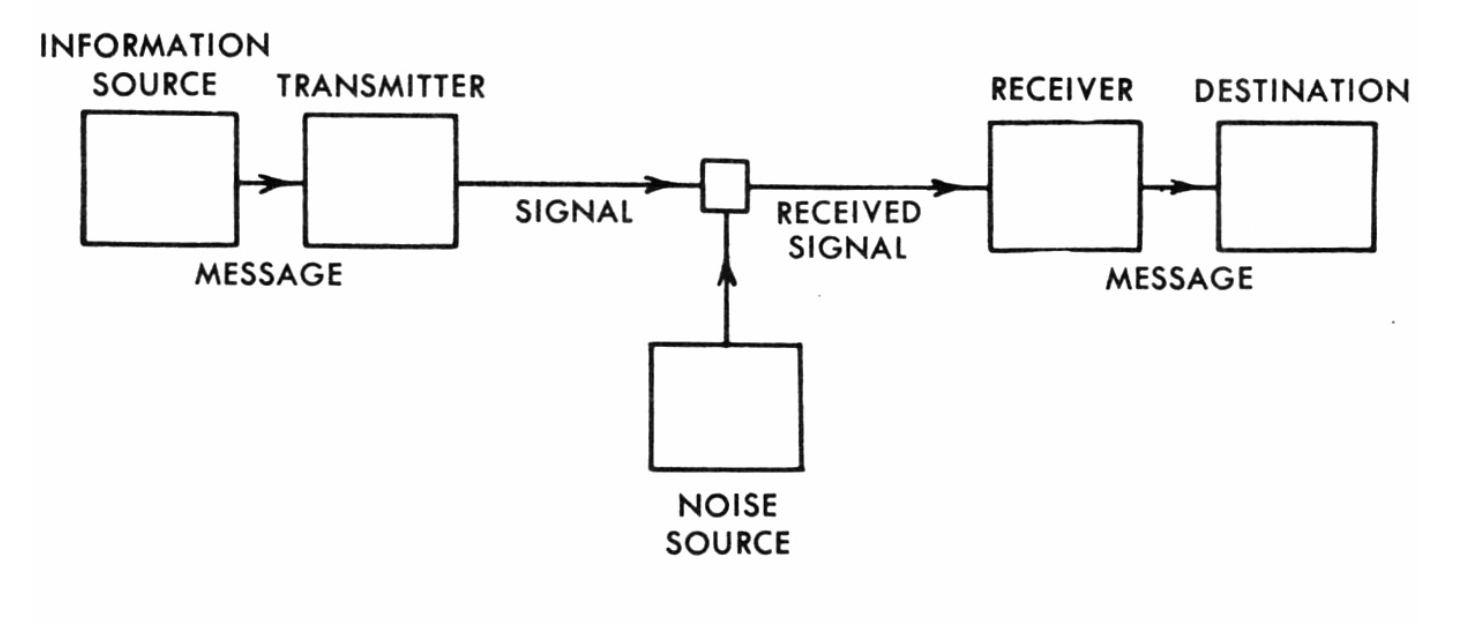

Claude Shannon defined information based on three principles: coding, surprise, and entropy. The latter has the effect of eliminating redundancy, which is generalized by using Kolmogorov complexity. This definition of complexity corresponds to the minimal description size that interests the observer. For example: a message is transmitted, which is identical to one that was previously communicated. Its minimal description consists in stating that it is a copy of the previous message. The complexity therefore decreases, and the information is simplified.

In his research, Jean-Louis Dessalles reuses Shannon’s premises, but, in his opinion, the probabilistic approach is not enough. By way of illustration, the researcher uses the example of the lottery. “Imagine the results from a lottery draw are: “1, 2, 3, 4, 5, 6”. From a probability perspective, there is nothing astonishing about this combination, because its probability is the same as any other combination. However, humans find this sensational, and see it as more astonishing than a random series of numbers in no particular order.” Yet Shannon stated: “what is improbable is interesting“. For Jean-Louis Dessalles, this presents a problem. According to Dessalles, probabilities are unable to represent human interest and the type of information a human being will consider.

The simplicity theory

Jean-Louis Dessalles offers a new cognitive approach that he calls the simplicity theory. This approach does not focus on the minimal description of information, but rather on discrepancies in information. In other words, the difference between what the observer expects and what he or she observes. This is how he redefines Shannon’s concept of surprise. For a human observer, what is expected corresponds to a causal probability. In the lottery example, the observer expects to obtain a set of six numbers that are completely unrelated to each other. However, if the results are “1, 2, 3, 4, 5, 6”, the observer recognizes a logical sequence. This sequence reflects Kolmogorov complexity. Therefore, between the expected combination and the observed combination, the drawing’s degree of description and categorization was simplified. And understandably so, since there is a switch from 6 random numbers to an easily fathomable sequence. The expected complexity of the six numbers of the lottery drawing is much higher than the drawing that was obtained. An event is considered surprising when it is perceived as being simpler to describe than it is to produce.

The simplicity theory was originally developed to account for what people see as interesting in language. The concept of relevance is particularly important here because this word refers to all the elements in information that are worthy of interest, which is something humans can detect very easily. Human interest is made up of two different components: the unique aspect of a situation and the emotion linked to the information. When the simplicity theory is applied, it can help to detect the relevance of news in the media, the subjectivity surrounding an event, or the associated emotional intensity. This emotional reaction depends on the spatio-temporal factor. “An accident that occurs two blocks away from your house will have a greater impact than one that occurs four blocks away, or fifteen blocks, etc. The proximity of a place is characterized by the simplification of the number of bits in its description. The closer it is, the simpler it is,” the researcher explains. And Jean-Louis Dessalles has plenty of examples to illustrate simplicity! Especially since each scenario can be studied in retrospect to better identify what is or is not relevant. This is the very strength of this theory; it characterizes what moves away from the norm and is not usually studied.

Read the blog post Artificial Intelligence: learning driven by childlike curiosity

Research for artificial intelligence

The ultimate goal of Jean-Louis Dessalles’ theory is to enable a machine to determine what is relevant without explaining it to the machine ahead of time. “AI currently fail in this area. Providing them with this ability would enable them to determine when they must compress the information,” Jean-Louis Dessalles explains. Today these artificial intelligences use a description of statistical relevance, which is often synonymous with importance, but is far removed from relevance as perceived by humans. “AI, which are based on the principle of statistical Machine Learning, are unable to identify the distinctive aspect of an event that creates its interest, because the process eliminates all the aspects that are out of the ordinary,” the researcher explains. The simplicity theory, on the other hand, is able to characterize any event, to such an extent that the theory currently seems limitless. The researcher recommends that relevance be learned as it is learned naturally by children. And beyond the idea of interest, this theory encompasses the computability of creativity, regrets, and the decision-making process. These are all concepts which will be of interest for future artificial intelligence programs.

Read the blog post What is Machine Learning?

[box type=”shadow” align=”” class=”” width=””]

Claude Shannon, code wizard

To celebrate the centenary of Claude Shannon’s birth, the Institut Henri Poincaré is organizing a conference dedicated to the information theory, from October 26 to 28. The conference will explore the mathematician’s legacy in current research, and areas that are taking shape in the field he created. Institut Mines-Télécom, a partner of the event, along with CNRS, UPMC and Labex Carmin, will participate through presentations from four of its researchers: Olivier Rioul, Vincent Gripon, Jean-Louis Dessalles and Gérard Battail.

To find out more about Shannon’s life and work, CNRS has created a website that recounts his journey.[/box]

Read more on our blog