Digital transition: the music industry reviews its progress

The music industry – the sector hit hardest by digitization – now seems to have completed the transformation that was initiated by digital technology. With the rise of streaming music, there has been a shift in the balance of power. Producers now look for revenue from sources other than record sales, and algorithms constitute the differentiating factor in the wide range of offers available to consumers.

Spotify, Deezer, Tidal, Google Music and Apple Music… Streaming is now the new norm in music consumption. Albums have never been so digitized; to an extent that raises the question: has the music industry reached the end of its digital transformation? After innumerable attempts to launch new economic models in the 2000s, such as purchases of individual tracks through iTunes, and voluntary contribution as for the Radiohead album In Rainbows, etc., streaming music directly online seems to have emerged as a lasting solution.

Marc Bourreau is an economist at Télécom ParisTech and runs the Innovation and Regulation Chair,[1] which is organizing a conference on September 28 on the topic: “Music – the end of the digital revolution?”. In his opinion, despite many artists’ complaints about the low level of royalties they receive from streaming plays, this is a durable model. Based on the principle of proportional payment for plays — Spotify pays 70% of its revenue to rights holders — streaming is now widely accepted by producers and consumers.

Nevertheless, the researcher sees areas of further development for the model. “The main problem is that the monthly subscriptions to these services represent an average annual investment that exceeds consumer spending before the streaming era,” explains Marc Bourreau. With initial subscriptions of around €10 per month, streaming platforms represent annual spending of €120 for subscribers – twice the average amount consumers used to spend annually on record purchases.

Because sites like Deezer use a freemium model, in which those who do not subscribe have access to the service in exchange for being exposed to ads, this observation enabled the researcher to confirm that an average music consumer will not subscribe to the premium offers proposed by these platforms. The investment is indeed too high for these consumers. To win over this target group, which constitutes a major economic opportunity, “one of the future challenges for streaming websites will be to offer lower rates,” Marc Bourreau explains.

Streaming platforms: where is the value?

However, rights holders may not agree with this strategy. “I think the platforms would like to lower their prices, but record companies also require a certain threshold, since they are dependent on the sales revenue generated by subscriptions,” explains Marc Bourreau. This decision prevents any type of price competition from taking place. The platforms must therefore find new differentiating features. But where?

In their offerings, perhaps? The researcher disagrees: “The catalog proposed by streaming sites is almost identical, except for a few details. This is not really a differentiating feature.” Or what about the sound quality, then? This seems possible considering the analogy with the streaming video industry, for which Netflix charges a higher fee for a higher quality image. But in reality, users do not pay much attention to the sound quality. “In economics, we call this a revealed preference: we discover what people prefer by watching what they do,” explains Marc Bourreau. But apart from a few purists, few users pay much attention to this aspect.

In fact, we must use algorithms to ascertain the value. The primary differentiation is found in the services offered by the platforms: recommendations, user-friendly designs, loading times… “To a large extent, the challenge is to help customers who are lost in the abundance of songs,” the economist explains. These algorithms allow listeners to find their way around the vast range of options.

And there is strong competition in this area due to the acquisition of start-ups and recruitment of talent in this field… Nothing is left to chance. In 2016, Spotify has already acquired Cord Project, which develops a messaging application for audio sharing, Soundwave, which creates social interactions based on musical discoveries, and Crowdalbum, which allows for the collection and sharing of photos taken during concerts.

Research in signal processing is also of great interest to streaming platforms, for analyzing audio files, understanding what makes a song unique, and finding songs with the same profile to recommend to users.

New relationships between stakeholders in the music industry

One thing is clear – sales of physical albums cannot compete with the constantly expanding range of the digital offering. Album sales are in constant decline. Performers and producers have had to adapt and find new agreements. Although artists’ income has historically been based less on album sales and more on proceeds from concerts, this was not the case for labels, which have now come to rely on events, and even merchandising. “The change in consumption has led to the appearance of what are called ‘360 ° deals’, in which a record company keeps all the revenue from their clients’ activities, and pays them a percentage,” explains Marc Bourreau.

Robbie Williams was one of the first music stars to sign a 360° deal in 2001, handing the revenue from his numerous business segments over to his record company. Credits: Matthias Muehlbradt.

Will these changes result in even less job security for artists? “Aside from superstars, you must realize that performers have a hard time making a living from their work,” the economist observes. He bases this view on a study carried out in 2014 with Adami,[2] which shows that with an equivalent level of qualification, an artist earns less than an executive counterpart — showing that music is not a particularly lucrative industry for performers.

Nonetheless, digital technology has not necessarily made things worse for artists. According to Marc Bourreau, “certain online tools now enable amateurs and professionals to become self-produced, by using digital studios, and using social networks to find mixers…” YouTube, Facebook and Twitter offer good opportunities for self-promotion as well. “Fan collectives that operate using social media groups also play a major role in music distribution, especially for lesser-known artists,” he adds.

In 2014, 55% of artists owned or used a home studio for self-production. This number is growing, since it was only 45% in 2008. Therefore, the industry’s digital transition has not only changed the means of music consumption, but also the production methods. Although things seem to be stabilizing in this area a well, it is hard to say whether or not these major digital transformations in the industry are behind us. “It is always hard to predict the future in economics,” Marc Bourreau admits with a smile, “I could tell you something, and then realize ten years from now that I was completely wrong.” No problem, let’s meet again in ten years for a new review!

[1] The Innovation and Regulation Chair includes Télécom ParisTech, Polytechnique and Orange. Its work is focused on studying intangible services and on the dynamics of innovation in the area of information and communication sciences.

[2] Adami: A civil society for the administration of artists’ and performers’ rights. It collects and distributes fees relating to intellectual property rights for artistic works.

[divider style=”solid” top=”5″ bottom=”5″]

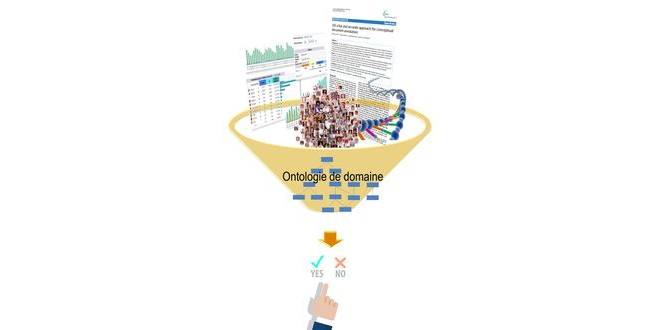

Crowdfunding – more than just a financial tool

As a symbol of the new digital technology-based economy, crowdfunding enables artists to fund their musical projects using the help of citizens. Yet crowdfunding platforms also have another advantage: they allow for market research. In this way, Jordana Viotto da Cruz, a PhD student at Télécom ParisTech and Paris 13 University, under the joint supervision of Marc Bourreau and François Moreau, has observed in her on-going thesis work that project sponsors used these tools to obtain information about potential album sales. Based on an econometric study, she showed that for projects that failed to meet the threshold for funding, greater pledges for funding had a positive effect on the likelihood of these albums being marketed on platforms such as Amazon in the following months.

[divider style=”solid” top=”5″ bottom=”5″]