Automated learning from data

Big Data is an issue not only of technology, but also for society. Aware of the value of data, Institut Mines-Télécom has made it a major field of research, because a new approach is needed in order to store, share and use data to achieve optimal use. Machine Learning is one such approach. It is being researched by Stéphan Clémençon, Professor at Télécom ParisTech.

Several zettabytes of data are generated each year, which is the equivalent of several billion billion thousands of octets, and while this supply of data enables the creation of new services it also considerably modifies our needs and requirements. Yesterday’s tools are outdated and new ways of putting this abundance of data to good use must be found. Machine Learning aims to do this. The discipline combines mathematics and information technology in order to create algorithms for processing big data, with a large number of industrial applications.

Télécom ParisTech recruited Stéphan Clémençon, a mathematician who specializes in modeling and statistics, to help develop Machine Learning. As Stéphan explains, “when you start to deal with very large quantities of data, you enter the realm of probability”. This field has long been neglected, especially by engineering schools where mathematics was taught in a very deterministic way, but students are becoming more and more interested in it, which is fortunate, for “questions of large scale raise difficult problems requiring lots of creativity!” New methods are required and the main difference between these and the old methods is that the latter were based on traditional statistics and relied on predetermined models of the data. They were developed principally in the 1930s when the methods and challenges were different, computers had a very limited capacity for calculations and when data was expensive to produce.

Finding the hidden meaning in big data

Nowadays there are sensors everywhere and data is collected automatically, with no pre-defined use but with the notion that it contains valuable information. The idea is to examine the data keenly and make the best use of it. Machine Learning’s objective is to design algorithms suited to dealing with big data. Enabling machines to learn automatically was an idea born from the fact that the data is too large to realistically enable each stage of processing it to be carried out by an expert, as well as from a desire to see the emergence of innovative services and teaching with no a priori.

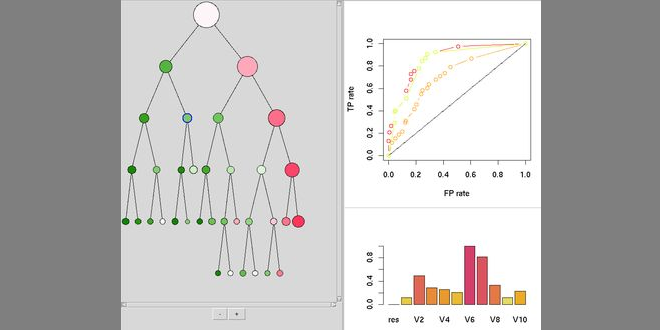

The question is “how can a machine learn to decide by itself?” How can we compress, represent and predict information from data selected to provide examples? This is the challenge of Machine Learning, which is fuelled by probabilistic modelling based on optimization and a theory of learning which guarantees sound results. The major problem is designing algorithms with good potential for generalization. Using criteria that is too strict may lead to overfitting, meaning the production of models that suit the given examples perfectly but which cannot be generalized. On the other hand, criteria that is not specific enough does not have sufficient predictive capacity. In the case of Machine Learning, the right amount of complexity must of course be deduced automatically from the data.

A chair for Machine Learning

The chair in “Machine Learning for Big Data” was created at the end of 2013 and has fifteen professors, all from Télécom ParisTech. Its aim is to inform people about Machine Learning, illustrate the ubiquity of math and carry out a research program with four private partners who are providing a financial contribution of two million euros over five years, as well as real and concrete challenges:

- Criteo, a world leader in advertising targeting, aims to offer each internet user the link that they are most likely to click on according to their browsing history. How can the enormous space of the Web be explored?

- The Safran group manufactures 70% of the world’s civil and military plane engines. How can anomalies be detected in real time and the replacement of a part suggested before failure occurs? ?

- PSA Peugeot Citroën hopes to connect data with its uses. How can construction costs be reduced and the commercial offering optimized, with models that meet market expectations?

- A French banking group is launching an all-digital bank. How can client accounts be monitored in real time? How can its use be made simpler and the right financial products be offered?

Designing new services from data

“The multiple applications of Machine Learning are a driving force for research“, says Stéphan Clémençon, giving a number of examples showing the variety of fields in which big data is collected and used: “automated facial recognition in biometrics, risk management in finance, analysis of social networks in viral marketing, improving the relevance of results produced by search engines, security in intelligent buildings or in transport, surveillance of infrastructures and predictive maintenance through on-board systems, etc.”

In Machine Learning, potential applications are found first, and then the math that allows them to be understood and clearly defined, significantly improving the process. A “professional” knowledge of these applications is therefore necessary. It was in view of this that the chair in Machine Learning for Big Data of Télécom ParisTech (see insert) was created with Criteo, PSA Peugeot Citroën, the Safran group and a major French bank. The idea is to work together with industry and academics to produce effective products based on the partners’ projects and notably providing increased knowledge of the state of the art for some and a keener understanding of the challenges of application for others.

Big Data refers at the same time to a specific infrastructure and a list of unresolved problems. Stéphan Clémençon regrets that in France, “we have missed out on the equipment stage” but fortunately, he adds: “we do have a large number of innovative SMEs headed by well-educated students, especially in applied mathematics.” Data engineering is multi-disciplinary by definition and the trump card of a school like Télécom ParisTech, which teaches in a variety of fields, is being able to offer specialized programs, in particular since, as Stéphan Clémençon underlines, “Machine Learning relates to key business challenges and there are lots of potential applications in this field.”

Stimulating Machine Learning research and teaching

Stéphan Clémençon joined Télécom ParisTech in 2007 in order to develop teaching and research in Machine Learning, automated learning from data. He is in charge of the STA (Statistics and Applications) group and is head of the Advanced Master’s program titled “Management and Analysis of Big Data”. He set up the “Data Scientist” Specialized Studies Certificate awarded by the school for continuous learning, for engineers wanting to increase their skills in Machine Learning techniques. Stéphan also teaches at ENS Cachan and at the University of Paris Diderot, and is an Associate Professor at the Ecole des Ponts ParisTech and ENSAE ParisTech.

Leave a Reply

Want to join the discussion?Feel free to contribute!