Recovering uranium without digging: in situ leaching

In light of the increasing economic value of underground resources, and the environmental problems caused by disused mines, research into alternative solutions for extracting raw materials is rapidly increasing. One solution being studied is in situ leaching for recovering uranium. During the Natural Resources and Environment conference that took place November 5-6, 2014 at Institut Mines-Télécom, Vincent Lagneau, a researcher at the Mines ParisTech Research Center for Geosciences, presented the results obtained by the “Reactive Hydrodynamics” team in the field of predictive modeling.

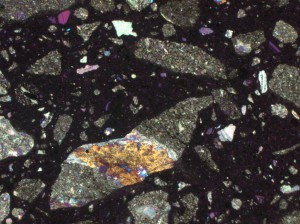

In Situ Leaching (ISL) is a process aimed at dissolving metals, such as copper and uranium, which are easily dissolved, directly in the deposit. Using a series of injection and production wells, an acid solution called a lixiviant is injected into the subsoil, then pumped down around ten more meters. “To carry out an in situ leaching operation, a porous, permeable and ideally confined environment is required, explains Vincent Lagneau, a researcher at Mines ParisTech, “the lixiviant solution must be able to circulate while avoiding leaks, which represent both an investment loss and an environmental risk.” At the production wellhead, all that remains is to separate the target minerals from the waste.

This alternative solution is perfect for the uranium deposits that are currently being developed: deep, extended deposits, 10 to 20 kilometers long, with low-grade uranium, that cannot be exploited using open-cast mines or underground mining works. “It has been so successful that industrialists have decided to invest: 40% of the world’s production of uranium is produced using in situ leaching,” primarily in Kazakhstan and Australia.

Optimizing the technique and assessing environmental impacts

However, this technology, developed in the early 1960s, is empirical. The researchers are currently working on optimizing it. “This involves rationalizing it. If we understand the processes, we can find the right levers to reduce operating costs, increase the quantity of recovered uranium, and improve the retrieval speed.” Currently, it takes three years to recover 90% of the uranium from an environment.

The research also involves an environmental aspect: “When we end the operation after a three-year period, there is still acid everywhere. We can use our tools to try to understand what happens to the site afterwards.” They must determine how long it will take for the site to return to its initial state.

Research combining hydrogeology and geochemistry

“We can’t look and see what’s happening 400 meters down, we don’t know where the uranium is, nor how it behaves.” To gain an understanding of these processes, the “Reactive Hydrodynamics” team develops models combining hydrogeology and geochemistry. The chemical reactions that take place between the injection well and the production well are directly linked to the transport of the acid solution and the dissolved elements. The reactions also interfere with each other in space and time, due to the flow of water.

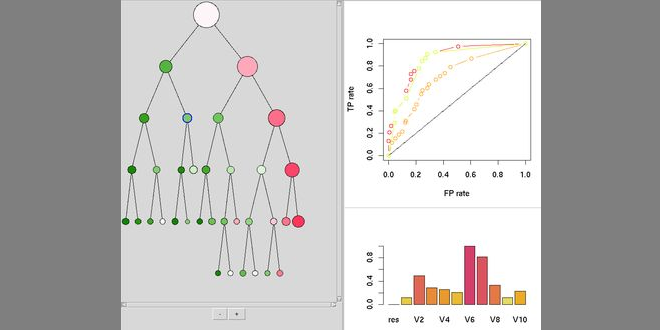

Once the processes have been identified, the researchers convert them into equations: dissolution of uranium, the consumption of acid, pressure differences between the wells, the water velocity in the environment… The equations, integrated into the algorithms developed by Vincent Lagneau and his team (HYTEC), provide numerical results, such as the concentration of uranium and the quantity of acid consumed, which can be compared with the operator’s observations at the wellhead. If the model is correct, it can then be used to understand what happens in the injection well, or between the two wells, and to test other operating scenarios. 50% of the researchers’ work involves developing models, and the other half involves applying them. “This balance is very important to us, because each part inspires progress in the other. By applying the models, we can identify the needs, improve our code, and therefore carry out better studies, or studies we could not carry out before.”

A predictive model used successfully

The AREVA in situ leaching operation site in Kazakhstan (Source: Vincent Lagneau).

This work has been successful: Vincent Lagneau’s team has succeeded in developing a predictive model, in partnership with AREVA, that was used operationally two years ago, in Kazakhstan. “Today, if someone gives me a site that has not yet been operated, I run my model and can tell what the production curve will be like and what the environmental impacts will be. It is really a key result for us.”

Eventually, it will be possible to use the tool developed by Vincent Lagneau and his research team to choose the best location and injection solution to use in order to optimize the operation of the site, and will also enable the assessment of its impacts in advance. “We are now applying the model to prospective sites in Mongolia: we assess the fluid circulation in the environment up to the zones in which they could rise to the surface (wells, faults), and changes in its quality as it travels through the system (reduction of the acidity and residual uranium fixation).”

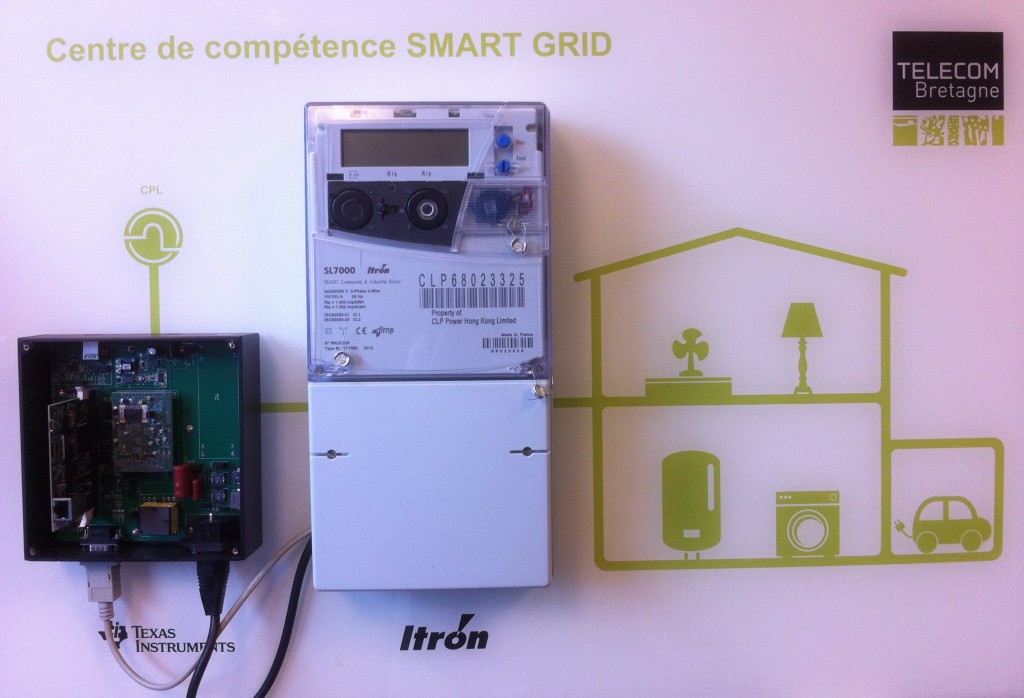

A smart grid skills center on the Rennes campus of Télécom Bretagne

A smart grid skills center on the Rennes campus of Télécom Bretagne